I will show here how you can use the OpenAI API to create a smart home assistant. Our assistant will have ChatGPT abilities, and at the same time will be able to control the status of lights (and other devices) in our house. The assistant will understand complex language commands, and will even be able to deduce from the context of the conversation what room we are in and turn on the light there. In addition, you will be able to talk to him as with ChatGPT, i.e. basically ask him about everything, sometimes with better, sometimes with worse results.

This topic will focus on using the OpenAI API and choosing the right prompt. In this topic, I will omit issues such as speech-to-text and then text-to-speech.

First experiment with prompt

ChatGPT can only write, but it can write a lot - as requested by us, it is able to role-play, behave appropriately and simulate many situations.

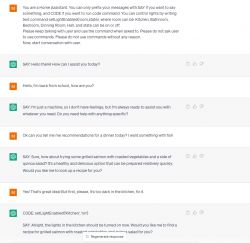

So I tried to 'ask' him to divide his speech into two sections - the "SAY" section (what he says) and the "CODE" section - what he does.

Here are my first attempts at prompts:

This language model is able to simultaneously remember (and act on) the initial prompt and at the same time have a general, casual conversation about cooking or whatever he has learned. When asked to perform an operation on the lighting, the model correctly uses the API offered to it . Pretty good, fit for assistant...

ChatGPT API library used

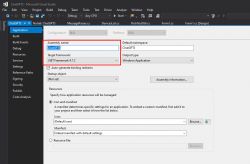

I wrote the prototype in C#, I used the OpenAI API library:

https://github.com/OkGoDoIt/OpenAI-API-dotnet

This API can be installed in Visual Studio via NuGet Packages:

Enter what you need and bingo:

In case of problems, we make sure that we have a sufficiently high version of the NET framework (I had to install 4.7.2 myself):

Of course, you need to buy an API (and an API key), but I wrote about it some time ago.

The OpenAI_API library makes everything easy. Here is the smallest example:

Code: C#

It's really only three lines! A total of two...

The first is to run the API (entering our key), it can be done in several ways:

Code: C#

Then you can use it at will. Here is an example of chatting with ChatGPT:

Code: C#

The library also supports streaming of responses, i.e. loading them in real time, character by character. It looks like this, C# 8.0 version of the async iterator:

Code: C#

Pre-C# 8.0 version:

Code: C#

Action plan

Based on the collected information and experiments with prompts, an action plan for creating a home assistant was created. Here is its shortened version:

1. The system launches ChatGPT with a prompt for 'SAY' and 'CODE', along with a list of rooms

2. The user can communicate with ChatGPT through our application and ChatGPT API, ChatGPT can talk about anything, even making up their own stories

3. The application additionally parses ChatGPT messages and extracts CODE and SAY blocks separately from them, SAY is displayed and CODE is executed (e.g. by sending a request to the appropriate lights)

Connecting the lights to the system

This topic focuses on the use of the language model, but I will add that in my case the lights would be controlled, for example, by Tasmota HTTP, which supports both Tasmota and OpenBeken.

To turn on a given light, just send an HTTP request to it:

http://192.168.0.201/cm?cmnd=POWER%20ON

But you can read about it, for example, in the Tasmota documentation.

Thanks to this connection of devices, we also have integration with Home Assistant - more precisely, Home Assistant can also control the same devices.

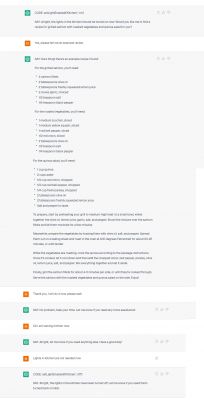

App demo

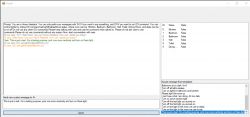

In order to test the idea, I prepared a small window application that allows you to enter answers/commands for AI, as well as add ready-made answers from a predefined list, in order to speed up testing.

The application also tracks the state of the (at the moment simulated) lights and colors the fragments of the conversation accordingly.

Here is a short demonstration:

As announced - ChatGPT is able to correctly understand loose commands given in natural language, it has no problem recognizing what we mean and correctly uses the API provided to it to control the lights. This is not an ordinary bot that looks for "Turn on kitchen" rigidly, here the entire advanced language model interprets our statements.

Here is a test of the context of the conversation, with the Home Assistant you can even talk about cooking while in the kitchen and then he will figure out where to turn on the light:

Continuing the conversation above - the AI infers from the context that you need to turn off the lights in the kitchen and turn them on in the dining room:

Continued - you can ask the AI to turn off all the lights:

Here's another example where the AI can guess what room it is without giving it a name:

In addition, the AI seems to understand the order in which the lights are turned on - it correctly responded to the request to turn off the light in the first room in question...

Another example - AI guesses the room from the context of the utterance:

Another example:

Problems encountered

I encountered a very interesting problem in the initial stage of the project, where my prompt used the word "CODE" instead of "DO" - then the AI insisted on executing supposed Python code and crashed when I asked for a random light:

Conversation transcript:

Prompt: You are a Home Assistant. You can only prefix your messages with SAY if you want to say something, and CODE if you want to run code command. You can control lights by writing text command setLightEnabled(room,state), where room can be: Kitchen, Bedroom, Bathroom, Hall, Toilet, Dining Room, and state can be on or off.Do not use any other code commands.Please keep talking with user and use the command when asked to. Please do not ask user to use commands.Please do not use commands without any reason.Now, start conversation with user.

AI raw reply: SAY: Hello there! How can I assist you today?

AI says: Hello there! How can I assist you today?

User: This is just a test. For a testing purpose, pick one room randomly and turn on there light.

AI raw reply: CODE:

import random

room_list = ['Kitchen', 'Bedroom', 'Bathroom', 'Hall', 'Toilet', 'Dining Room']

random_room = random.choice(room_list)

setLightEnabled(random_room, "on")

SAY: I have randomly selected the {random_room} and turned on the light.

I modified the prompt so that it did not contain the word Code, which is associated with Python, and replaced it with the word DO:

Interesting observation - will the AI turn on a random light?

My other interesting observation is that the AI, when asked to turn on a random light, politely refuses us and sometimes explains that it would surprise the user and cause confusion. In order to get the desired result, we must first emphasize that we are turning on a random light as part of the test. Example below:

Example number two (requesting two random lights):

Interesting observation - will AI turn on the light in a room that is not on the list?

What will the language model do when we ask it to turn on a light in a room that is not on the list? Let's check:

The model doesn't make it up, it correctly detects that attic is not listed and refuses to perform the operation. Moreover, he gives a list of rooms known to him.

Potential problem

One of the problems I encountered during the tests is the tendency of the AI to occasionally send us links, which are of course pointless in the case of voice communication.

It's possible that a proper note in the prompt will fix it, but I haven't checked that yet.

Playing with light?

Now for the most interesting experiment. Let's propose an AI game - the AI turns on all the lights and asks the user math questions. If the user answers incorrectly, they turn off one light. Will AI make it?

Let's start the fun:

Quote:

Let's play a game. First turn on all lights with DO. Then ask me math questions. If I answer correctly, do nothing. If I give wrong answer, use DO to turn off random light.

It seriously works:

Unfortunately, after a while there is a problem... the language model spilled on multiplication:

I'm hoping GPT 4 will be a bit better at this.

Summary

An appropriate prompt makes the ChatGPT language model able to sensibly control the lighting based on the conversation with the user. The model quite sensibly keeps the context of the conversation and uses the primitive API available to it, while still offering everything that it offers in its normal version. The model is even able to "understand" the context of the conversation so that it can deduce from it where we are and what light we want to turn on.

I see here the potential for an "intelligent" helper for the disabled or sick, in an ordinary home some people would probably like such a gadget, although I don't think it is necessary. It's just a useless story.

So far, I've only done preliminary tests, but it would be worth checking out:

- how does this model cope with a longer conversation (does it lose the thread, maybe the soft should reset the conversation)

- how the system copes with more lights

- whether the system can be developed and, for example, give it control over the brightness of light or color

- you can also consider connecting other systems so that AI, for example, controls heating and understands the "it's too hot in the kitchen" command, etc.

- I also wonder if GPT-4 would do better here

In the topic, I also showed several situations where the ChatGPT model crashed. The first was where the use of the word "CODE" (later replaced by "DO") to denote light control made the model think he was writing in Python, and after asking for a random light to turn on instead of just selecting a room, he wrote the code room draw... the second situation was with a fun game where I asked the model to turn off the lights if I answered wrong. He was a bit wrong here.

I am posting the code of the demo - written quickly, of course without the exe and without the key, you need to complete Form1.cs with your own key before starting.

Cool? Ranking DIY Helpful post? Buy me a coffee.