Are you trying to run latest Gemma3 multimodal AI models, but keep getting error 500 in Ollama WebUI?

Here's a solution, but first few words about Gemma 3. Gemma 3 is collection of lightweight, open models built from the same research and technology that powers Gemini 2.0 models. Gemma 3 models are designed to run fast, directly on devices and come in a range of sizes (1B, 4B, 12B and 27B), allowing you to choose the best model for your specific hardware and performance needs.

These models are very easy to download from Ollama Library and run, but Ollama Docker Package comes with obsolete Ollama version 0.6.1, so you can't run new them directly, at least until the Docker package is updated. I'll show a simple work-around here.

Error 500 issue

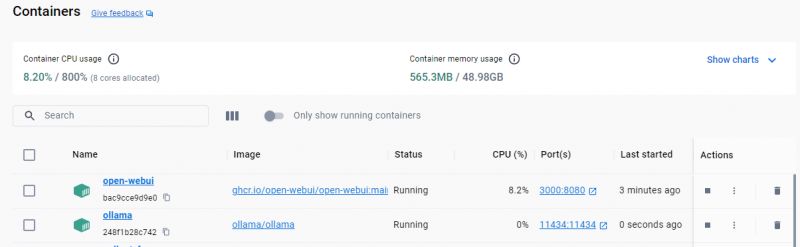

So, I'm assuming you already have a Docker setup like that:

You have Ollama core and Ollama Web interface both running in docker.

If not, you can get Ollama WebUI here.

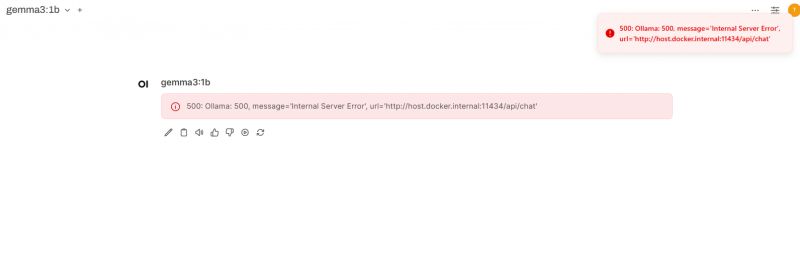

You have also probably already downloaded Gemma3 in your Ollama Web UI, but when you try to run it, you'll getting:

500: Ollama: 500, message='Internal Server Error', url='http://host.docker.internal:11434/api/chat'

Just as on the screenshot.

The cause of the problem

This is caused by Docker using obsolete Ollama core, namely version 0.6.1, at least in my case. You can check it by running:

C:\Users\user>docker run --rm ghcr.io/open-webui/open-webui:ollama ollama --version

Warning: could not connect to a running Ollama instance

Warning: client version is 0.6.1

I've tried to update it, but found no way to do so. Luckily, there is a workaround...

Easiest solution

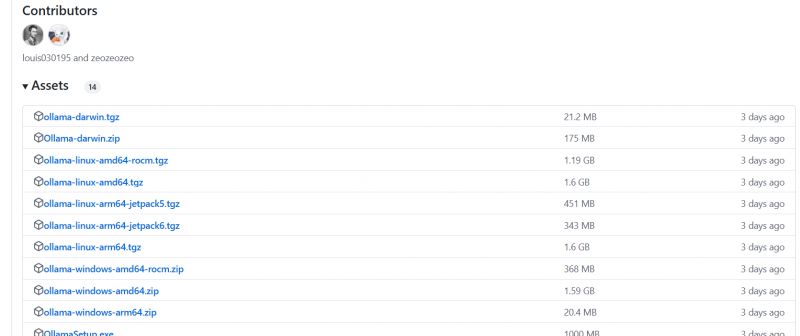

Download Ollama directly from the Releases tab:

https://github.com/ollama/ollama/releases/tag/v0.6.2

Choose package for your OS, in my case it was ollama-windows-amd64.zip.

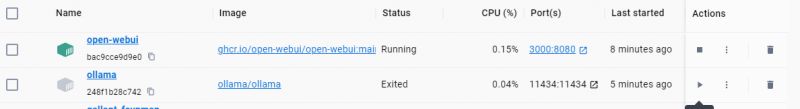

Shutdown ollama in Docker first:

Extract it and run, as in:

ollama.exe serve

Now, as long as port settings are matching, your Ollama WebUI from docker should be able to reach the new ollama core. You can also check it's version:

W:\TOOLS\ollama-windows-amd64>ollama.exe --version

Warning: could not connect to a running Ollama instance

Warning: client version is 0.6.2

So, now you're running a newer Ollama.

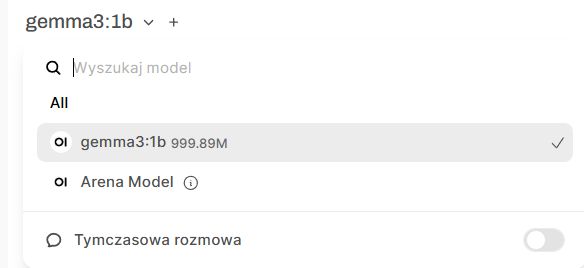

This will mean that you'll have to redownload AI models. I've downloaded only smallest Gemma so far.

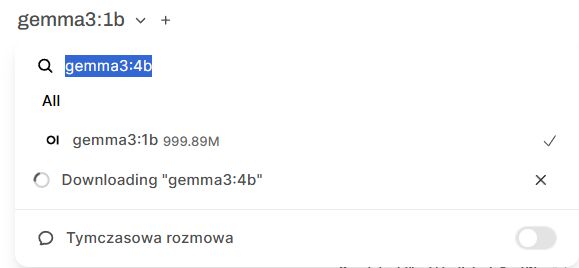

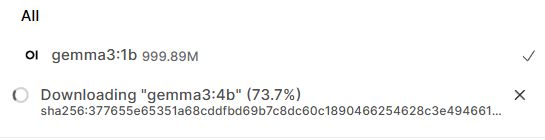

You can also download a bigger model:

Let's check if it works

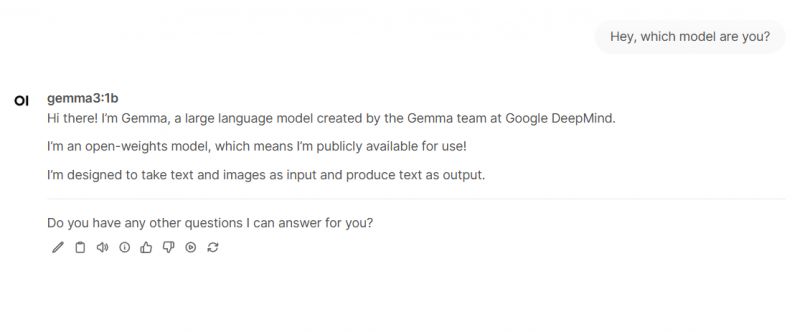

Now, a word of warning - smallest 1b model with not work with images, so I suggest starting with 4b.

Some first Gemma 3 tests

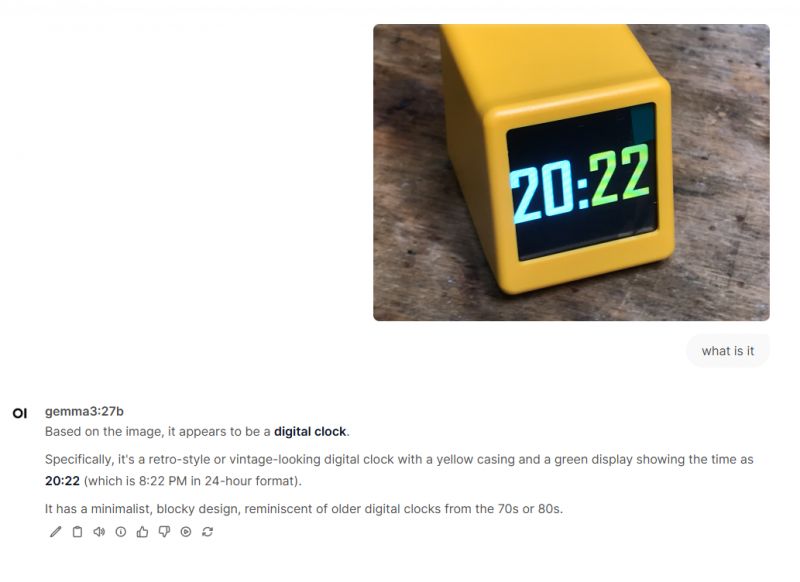

It's time for some little Gemma 3 testing. I've played around with it a bit and decided to showcase 27b model, as it seems more reliable than smaller ones, obviously. Yet I can still run it on my 7 years old ROG gaming notebook.

Nice, it can read the time correctly.

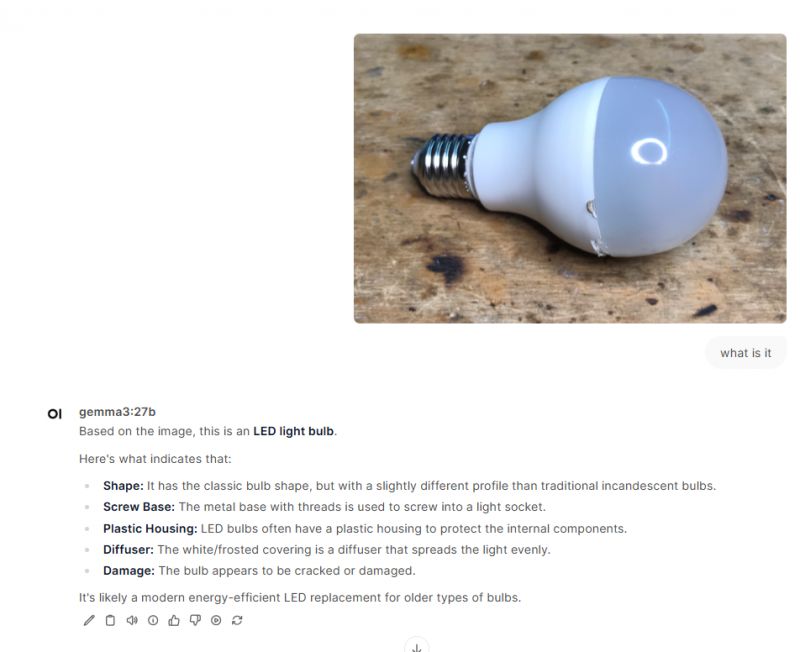

Not bad, it has even noticed the slight damage of the bulb.

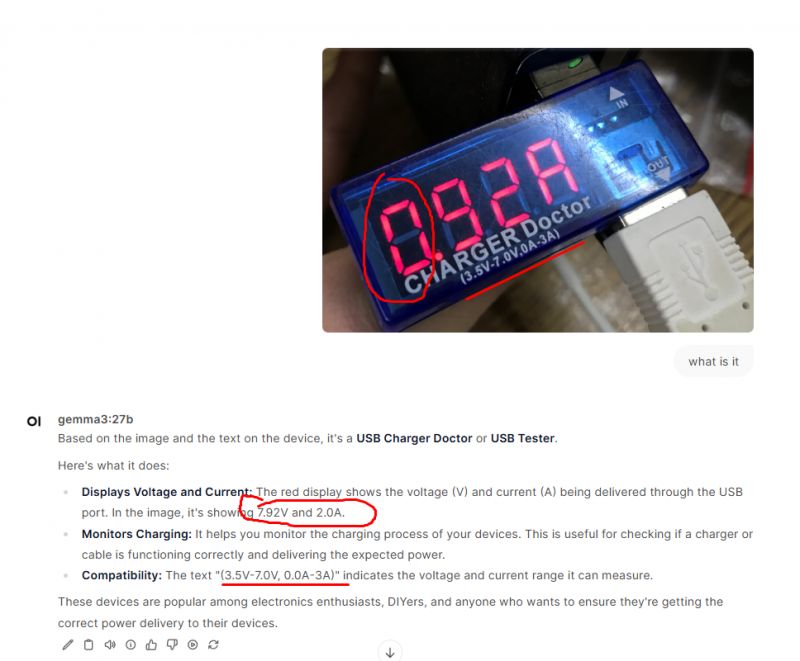

Let's try something harder.

Well, unfortunatelly it still makes mistakes and can give confusing results, but it's still better than LLaVA which I tested in the past...

Summary

It turns out that it is very easy to run new Gemma 3 models locally. The only issue that I encountered was the obsolete Ollama version in Docker, but hopefully, Docker package will be updated soon as well, so you won't encounted this problem in the future.

Regarding Gemma 3 itself, it seems very promising, especially the larger versions. They seem better than LLaVa at the first glance, but now I'm going to perform more tests.

I'll leave them for another topic.

Did you also try to run Gemma 3, and if so, what are your experiences there?

If you're more interested in Gemma 3, you can also just post here an image or a prompt and I'll test Gemma with it.

Cool? Ranking DIY Helpful post? Buy me a coffee.