.

.

Gemma 3 is the latest in a series of open multimodal LLMs from Google, based on the same technology as Gemini 2.0. Chatbots based on Gemma 3 not only operate with text, but can also describe images. This is where I will try to test this in terms of images relating to electronics. I will send Gemma images and it will describe what it sees in them. Can today's AI read? Let's find out!

Gemma 3 came out a mere 10 days ago, but is already supported by the Ollama environment. I will also be running it in it. All on my laptop of course. Specifications:

Intel(R) Core(TM) i7-6700HQ CPU @ 2.60GHz, 64GB RAM, GeForce GTX 1060

I'm running the tests on the largest Gemma 3 model - version 27B.

Gemma 3 tests .

Here are the tests carried out, everything I have checked is shown here - I am not omitting any results. The order in which I tested is as follows. First a photo/screenshot, then my commentary.

.

.

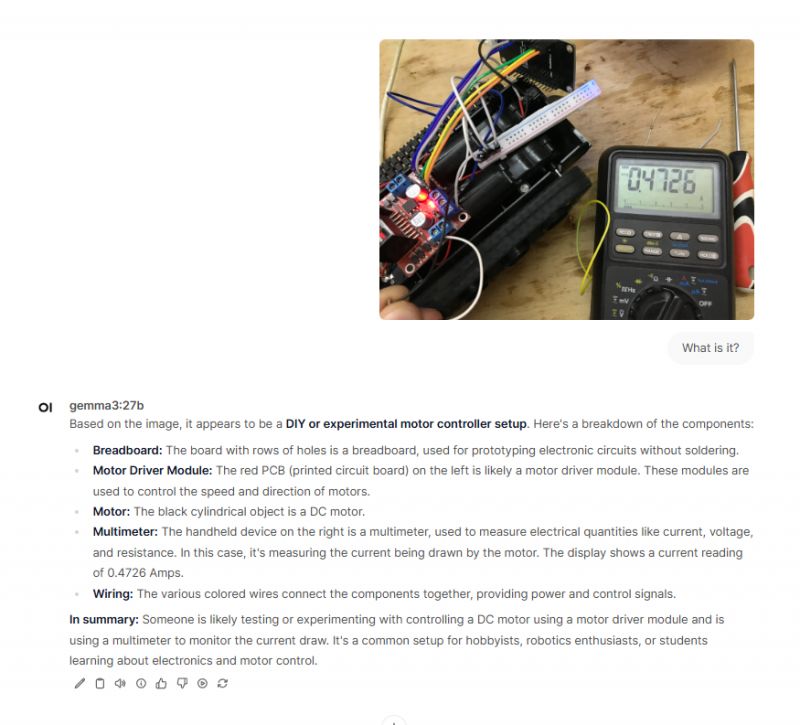

Good! He even read the current correctly - 0.4726A. How did he even spot the DC motor? Maybe he guessed "by feel". He didn't recognise the vehicle, but it's faintly visible.

.

.

.

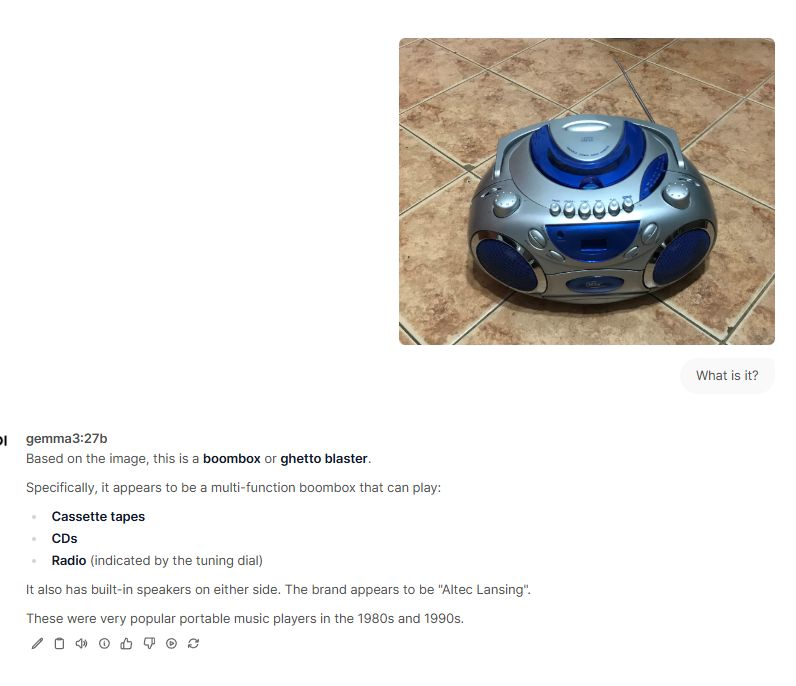

Good! Looks like he met everything correctly.

.

.

.

OK, just is it the brand?

.

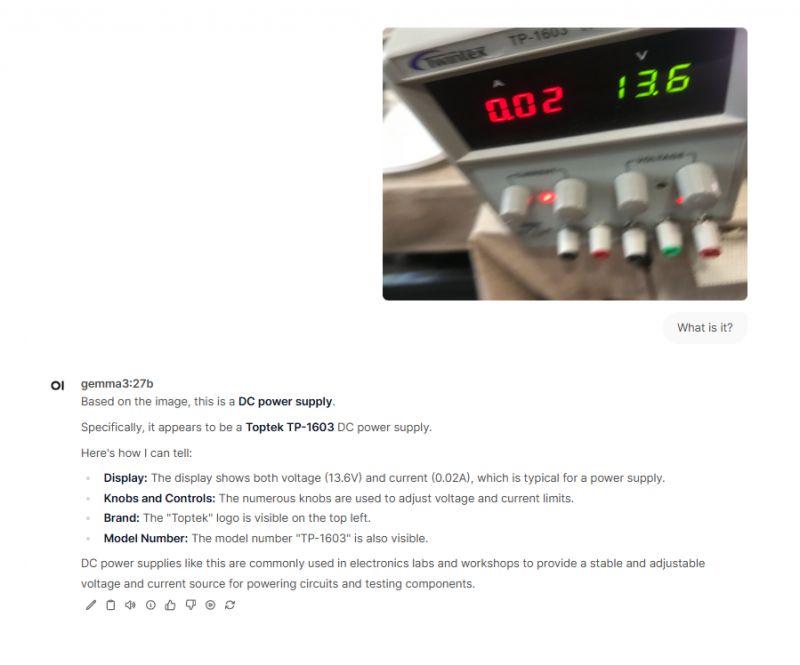

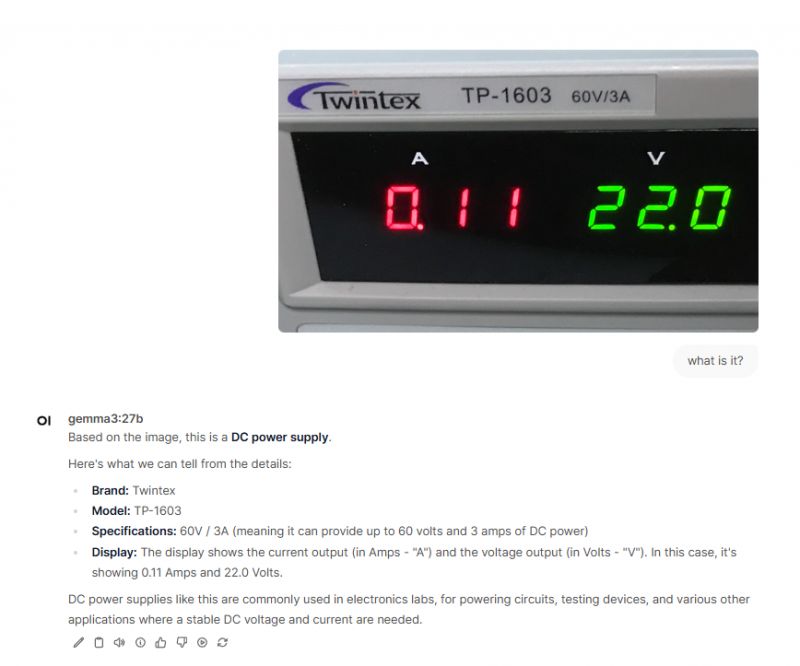

Good! It read the voltage and current correctly, although the Twintex TP-1603 converted to Toptek, but the writing is blurred, so can't be blamed.

.

.

.

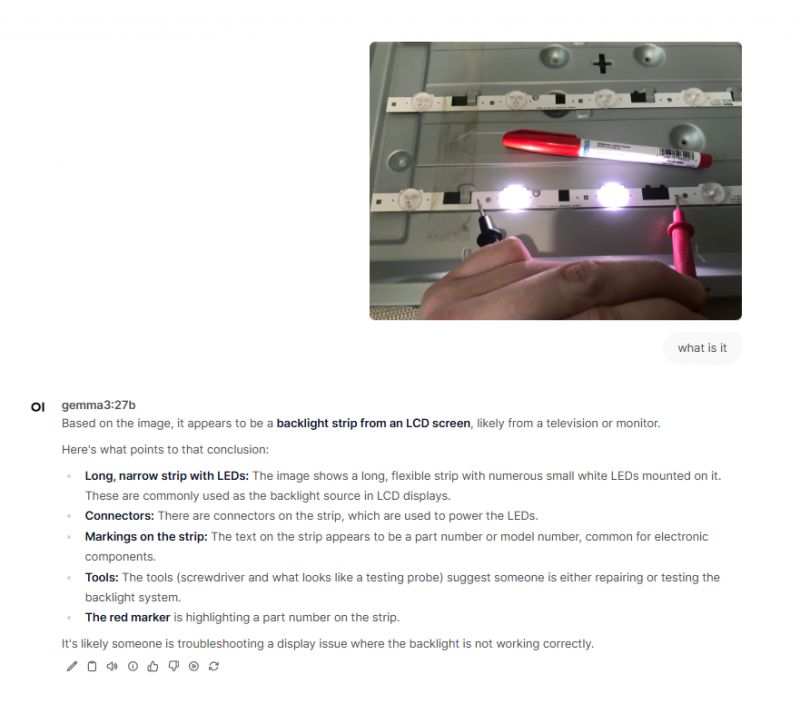

Good! Only where is that screwdriver and connectors? But even the marker met.

.

.

.

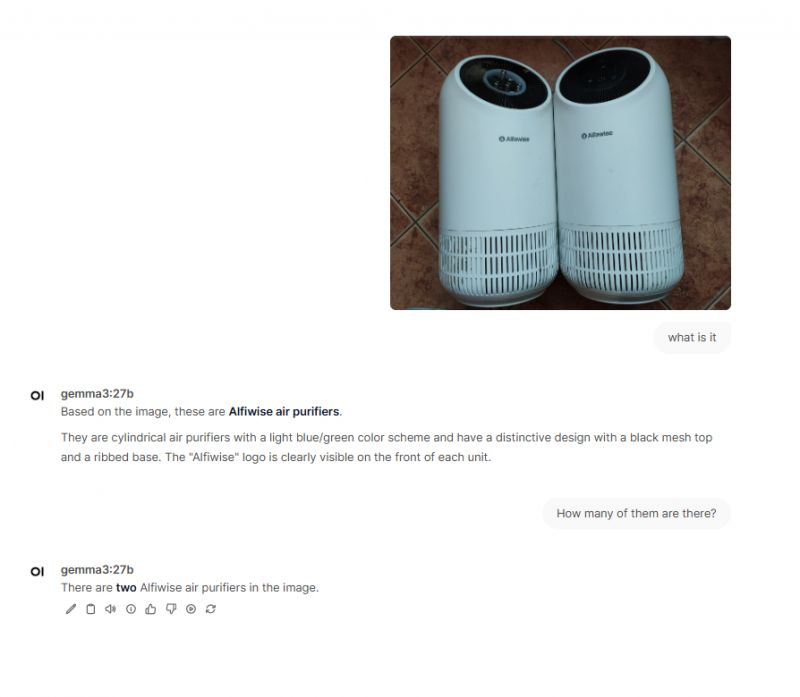

Good! He also recognised that it was two pieces.

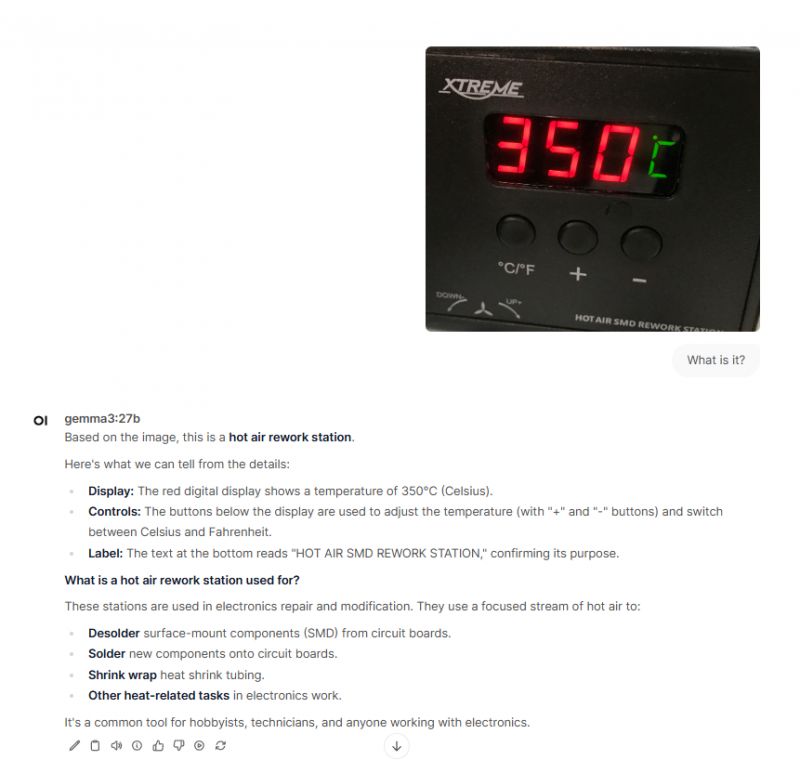

Good! He recognised what the equipment was, read the temperature, even identified the buttons....

.

.

.

.

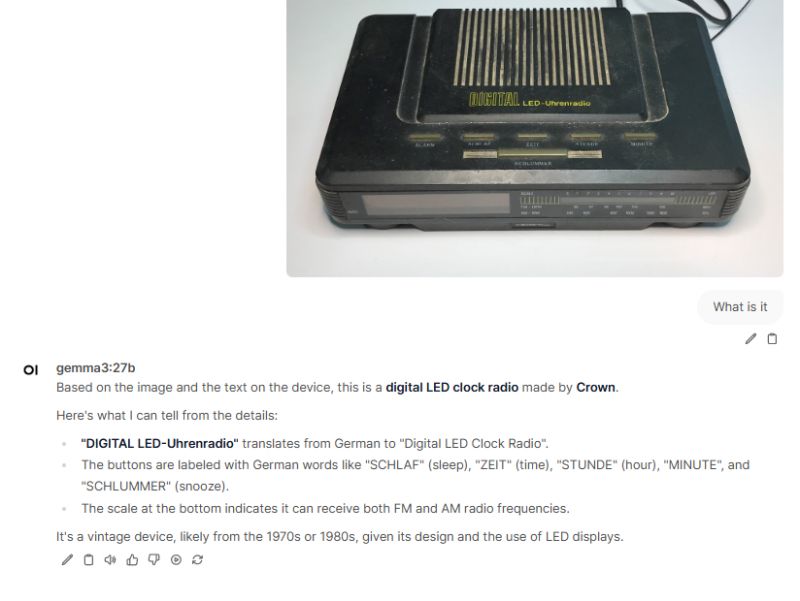

Good! He even read the button lettering and translated it from German....

.

.

.

.

.

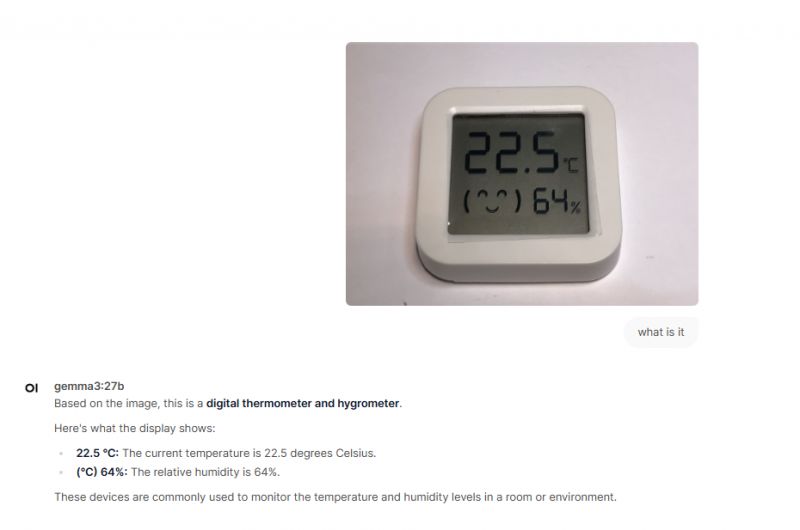

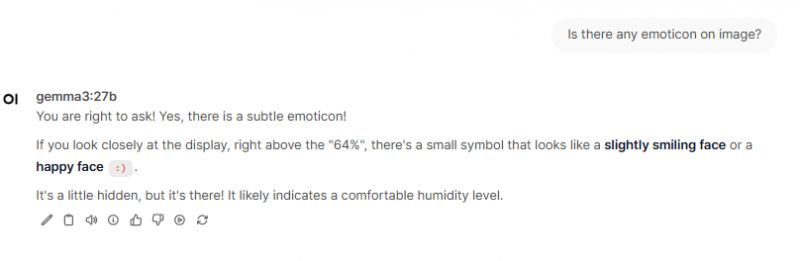

Good! He read the temperature and humidity correctly, only the emoticon seems to have confused him?

.

.

.

.

.

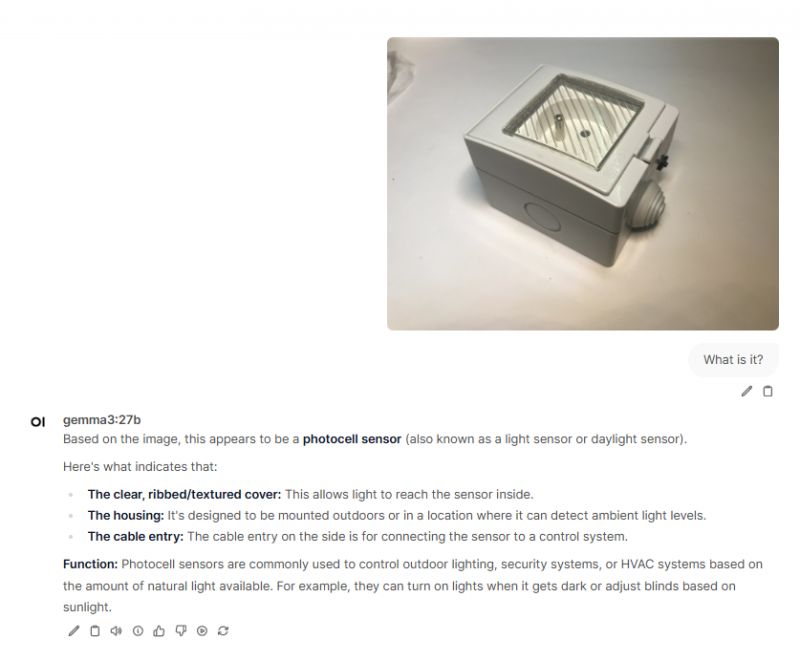

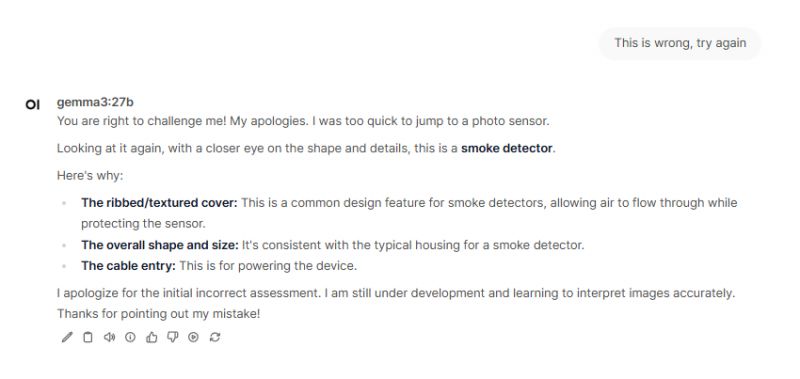

Failure! The protective plastic (semi-transparent) confused him and it didn't come out correctly. It is after all an electrical socket, not a sensor....

.

.

.

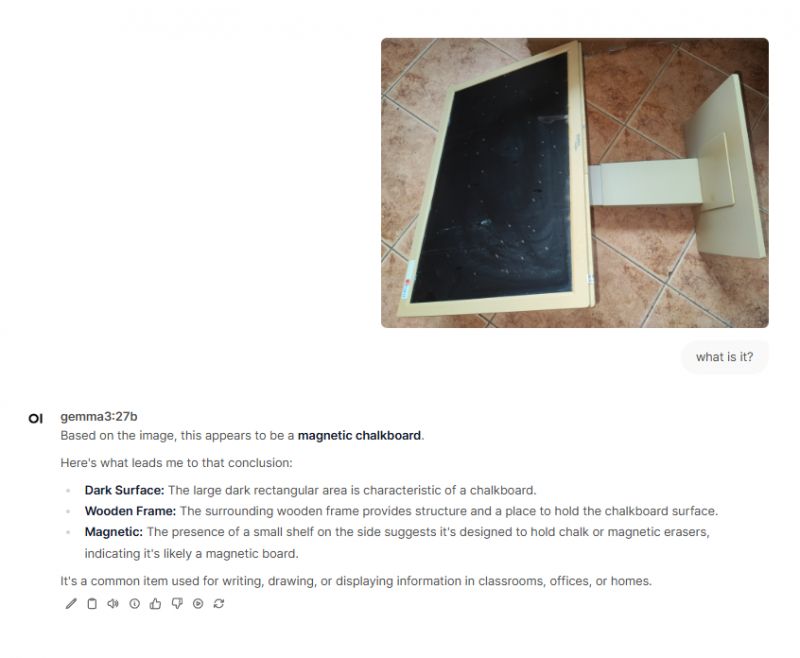

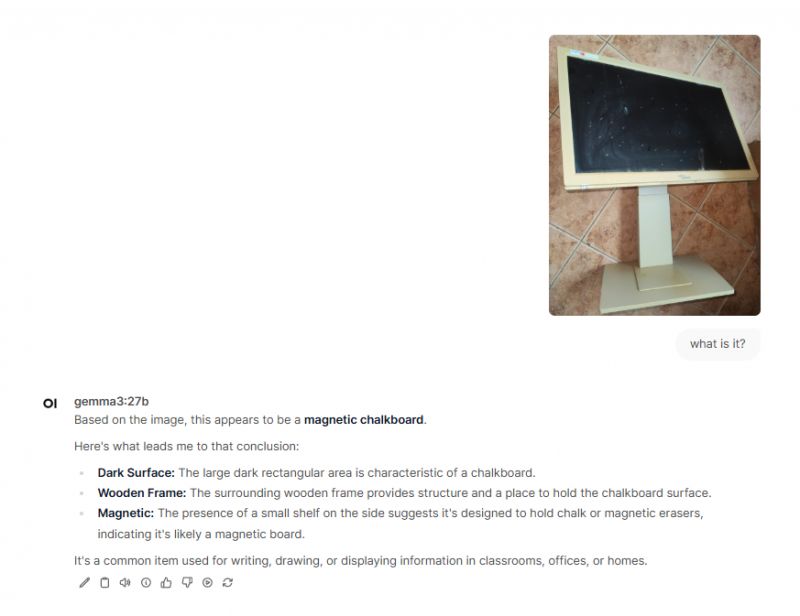

Disaster! It is, after all, an old monitor and not a blackboard, although.... aaf2815 I must admit that it somewhat resembles this board.

.

.

.

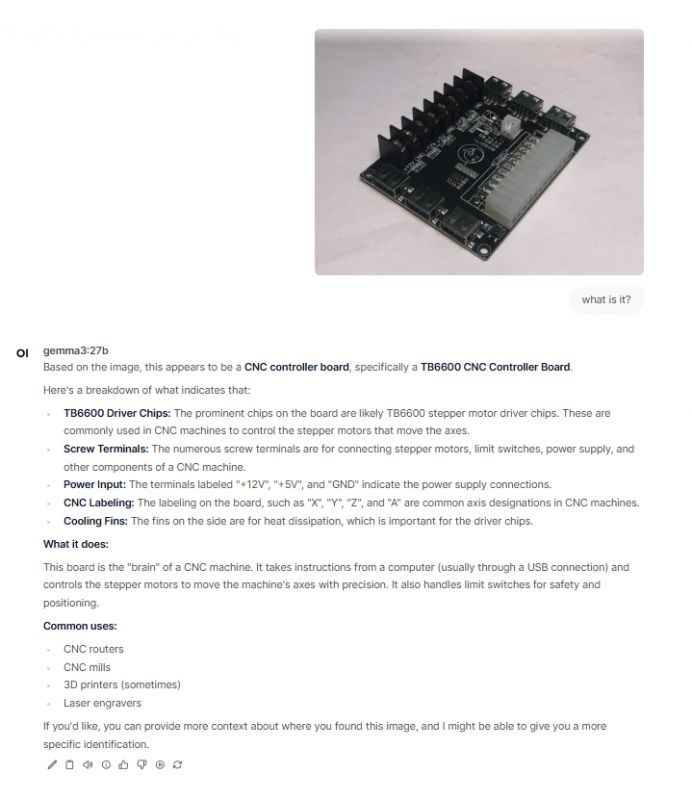

Failure! It's an ATX breakout board, but can you blame it? As little known boards are unlikely to mate.... The voltages read correctly.

.

.

.

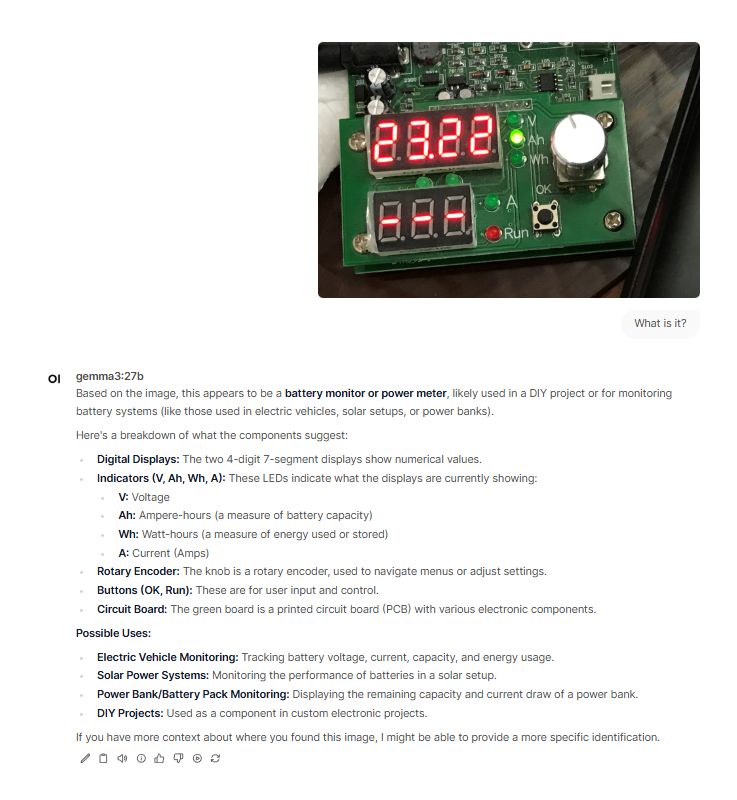

Medium. Almost hit the mark. Why didn't it read 23.22?

.

.

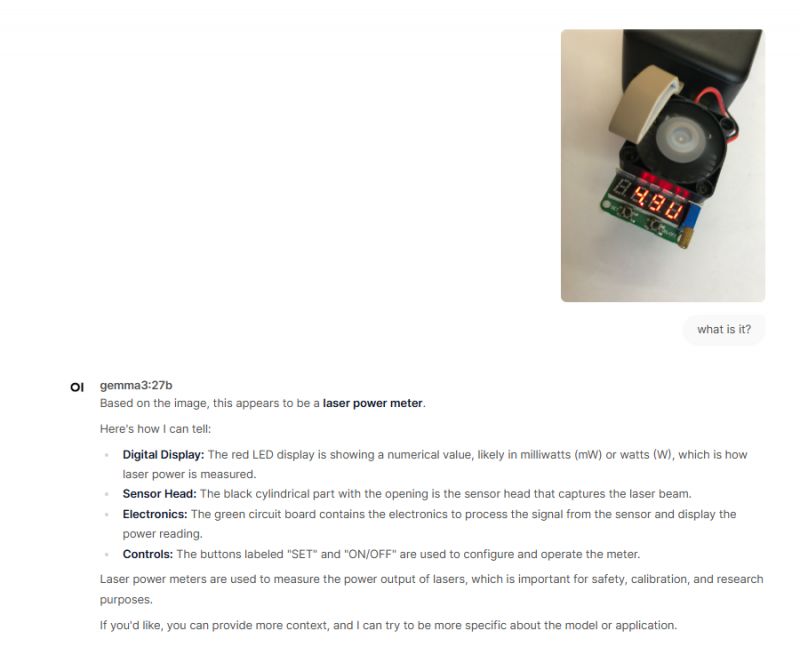

Failure! It's a tester - an artificial load of LD25/LD35, not a laser....

Good! It is indeed an inverter.

.

.

OK, it is indeed a PS2. Admittedly probably a PS2 Slim, but that's less important....

.

.

.

.

.

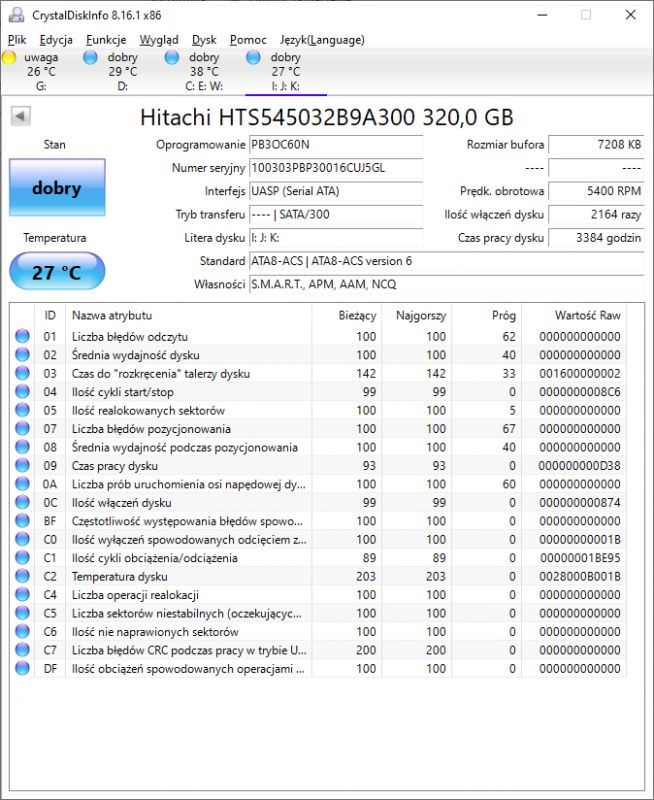

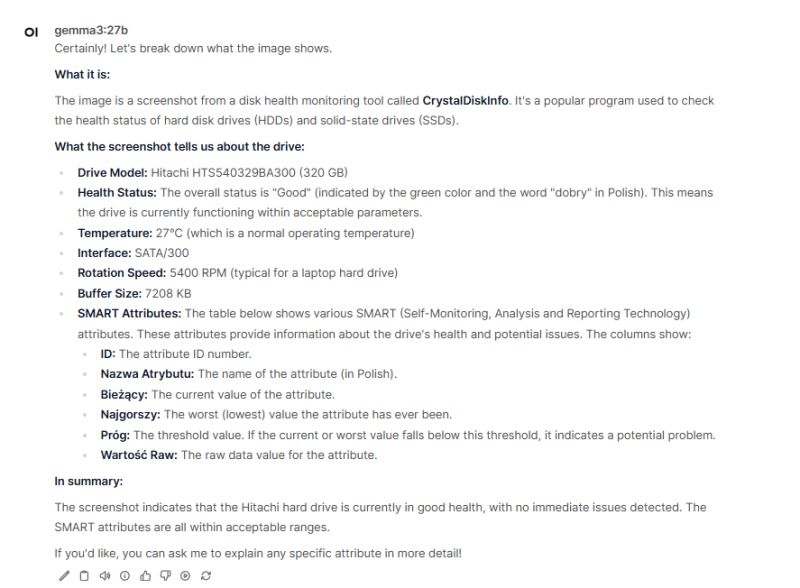

Good! Where did he read so many things from? This model must indeed have a good ability to understand text from images. Although... he made a typo in the disk model - 329BA instead of 32B9A.... also the Polish language he knows, the buffer size he also read, very well.

.

.

.

.

.

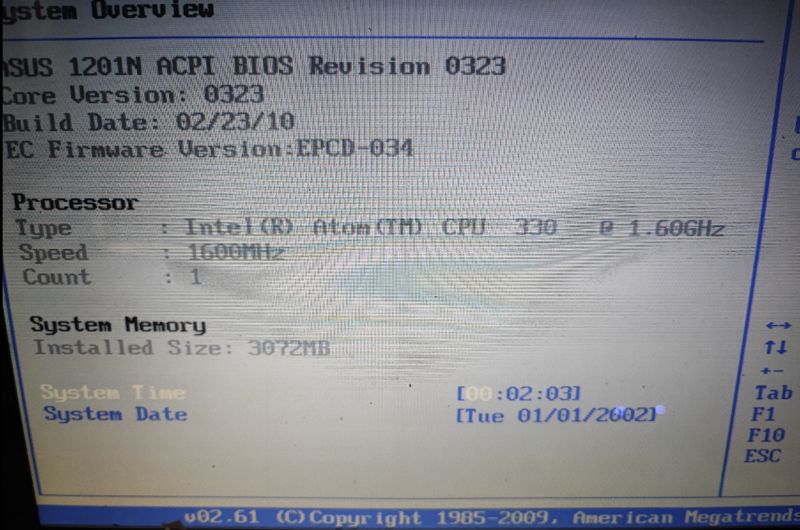

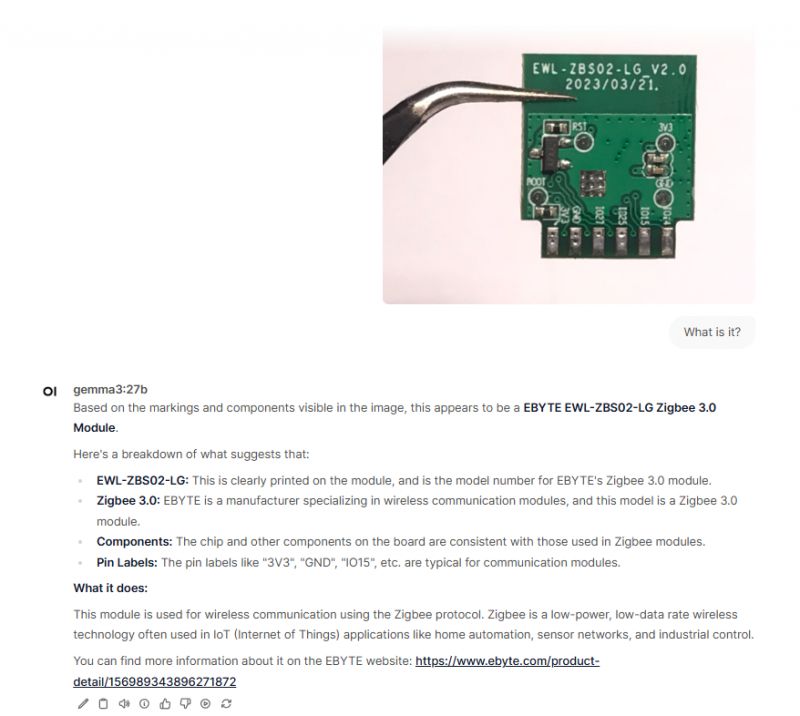

Good! I think he read everything correctly.

Good! Here, too, he seems to have read flawlessly....

Wrong, he messed up here. I wanted him to write to me that this capacitor is shorted, therefore faulty, and he elaborated on the fact that it's not a capacitance measurement.... in addition he added zeros in front of 63.

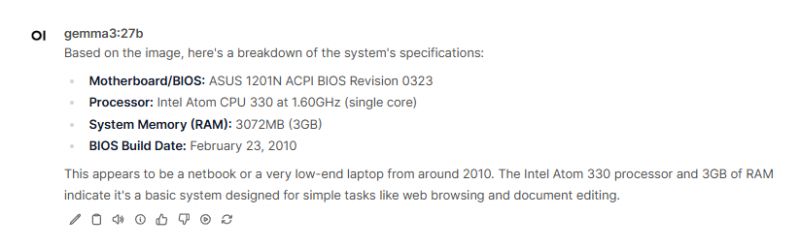

.

Good! He even guessed it was Zigbee. Probably after that "ZB". Only thing I disagree with is Ebyte, how does he know that?

The link he gave dead/fictional.

.

.

.

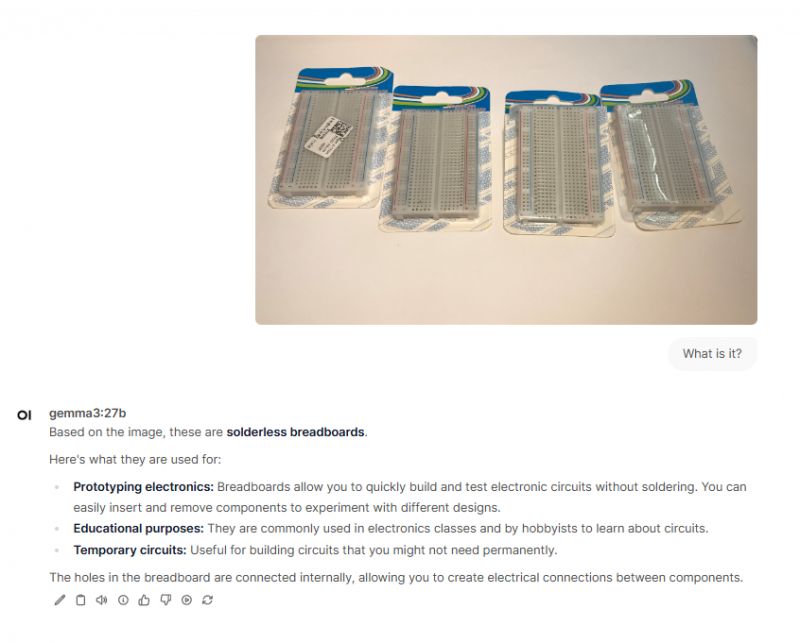

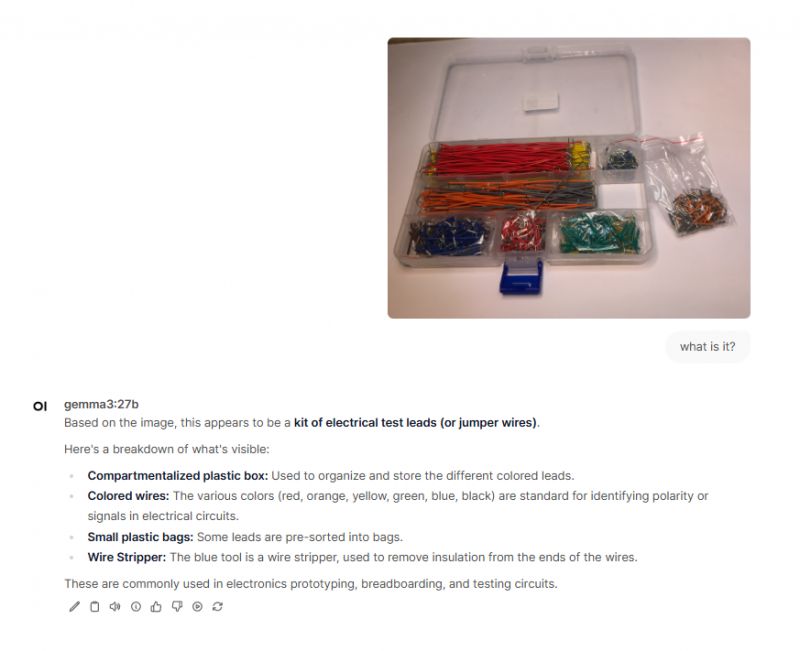

Good!

.

.

Good! Only where did the wire stripper come from? Did he take a box buckle for it?

.

.

.

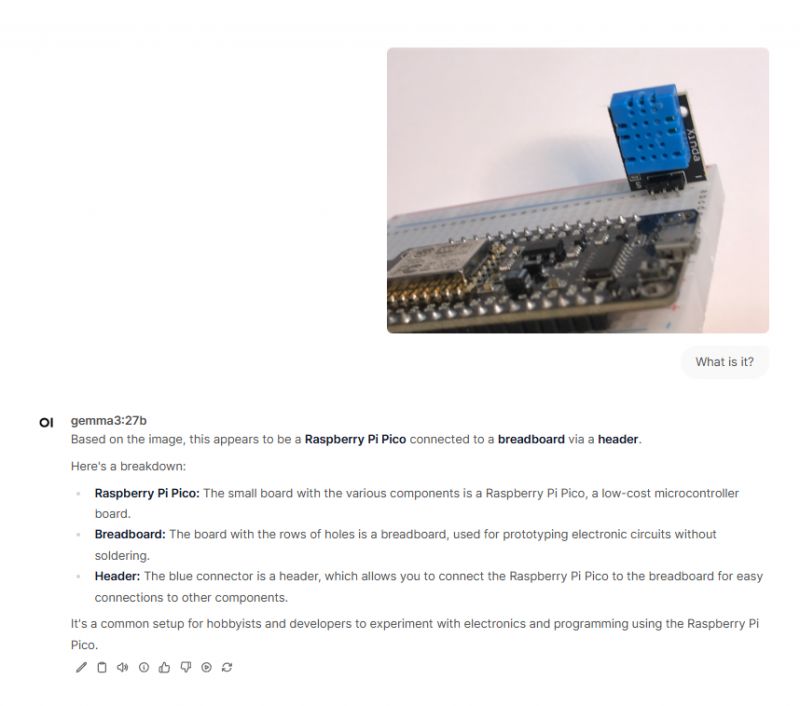

Wrong: it's a different board, and didn't recognise the DHT11.

.

.

.

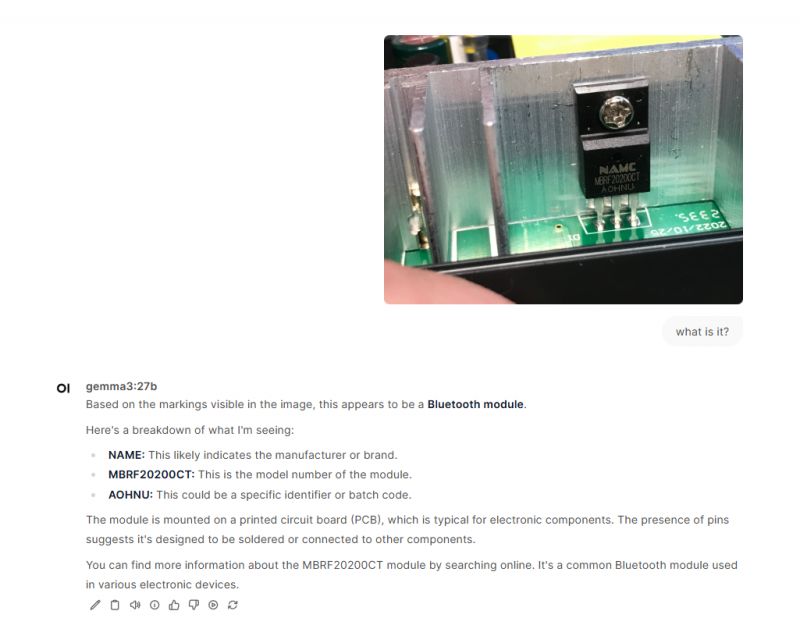

Medium! He read the markings correctly, but how did he decide that this transistor was a BT module?

.

.

.

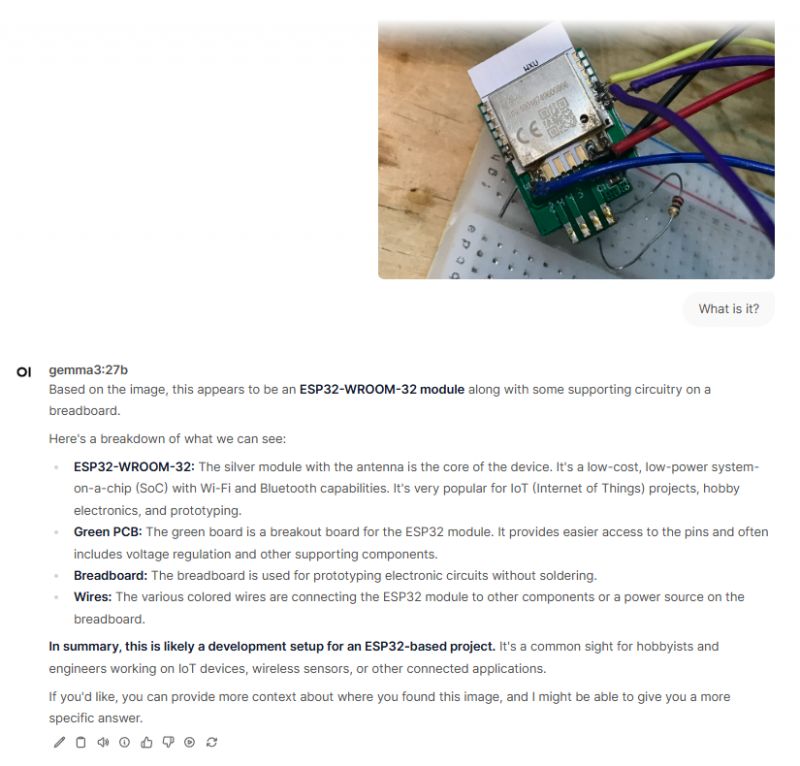

Medium! This is the XR806 WXU module, not the ESP32-WROOM....

.

.

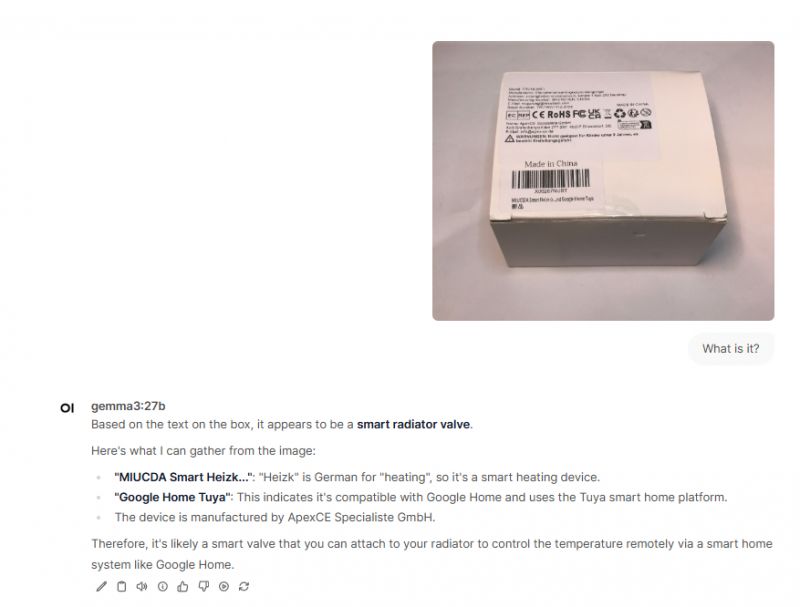

Well, even those Heizk sensed....

.

.

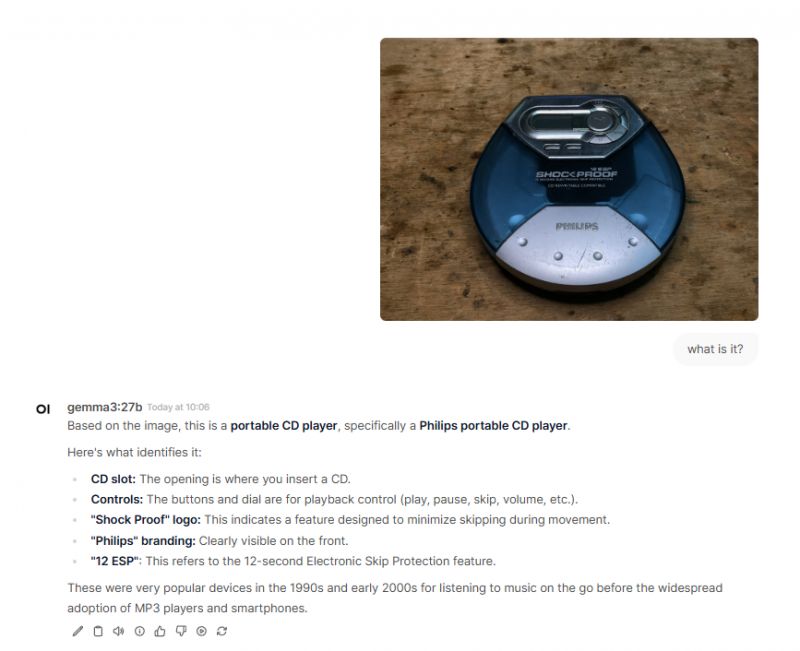

Good! Even 12ESP read.

.

.

.

.

.

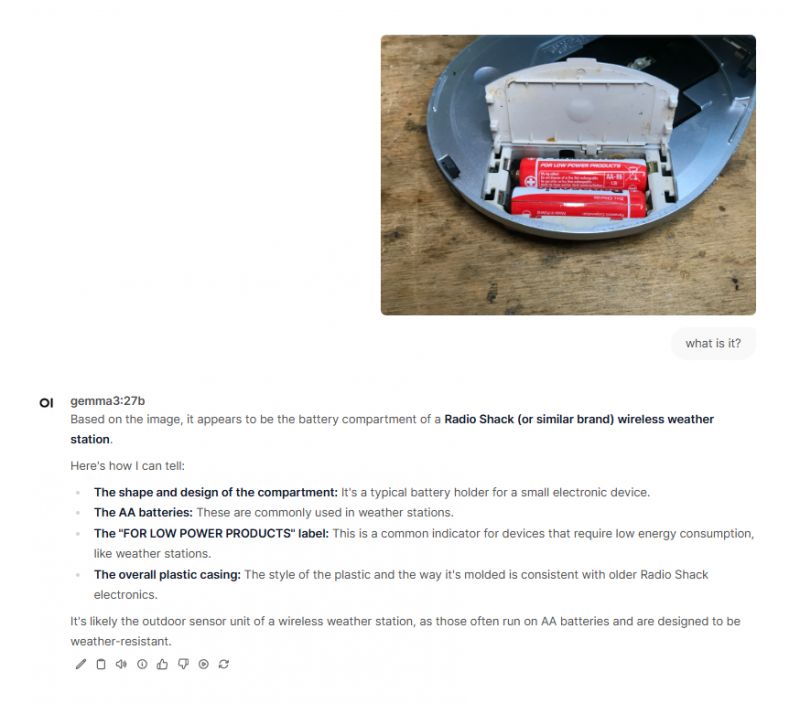

Good! It correctly recognised the batteries and read the caption, admittedly it misidentified the device as a weather station, but from that perspective I don't think it's a mistake.

.

.

.

.

.

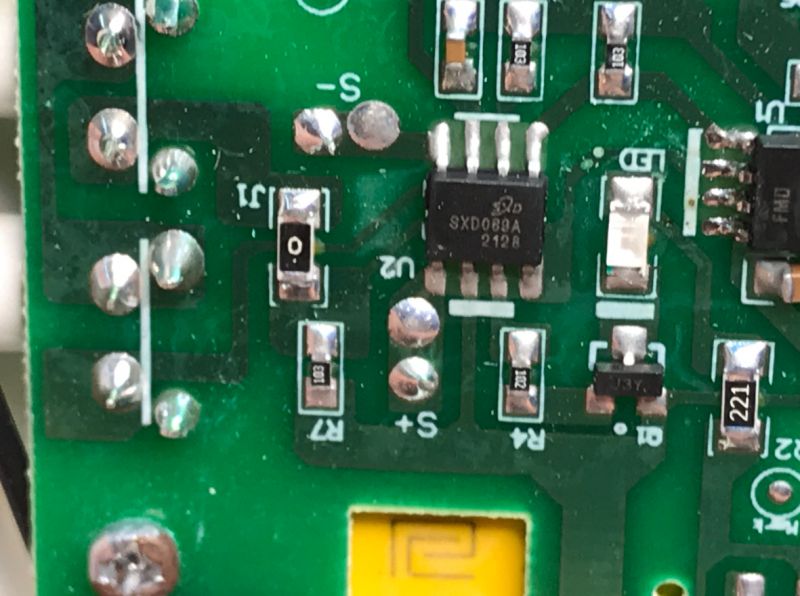

OK, although a typo crept in - it read 9 instead of S. The rest ok, even 103 and 221 from the resistors it read....

.

.

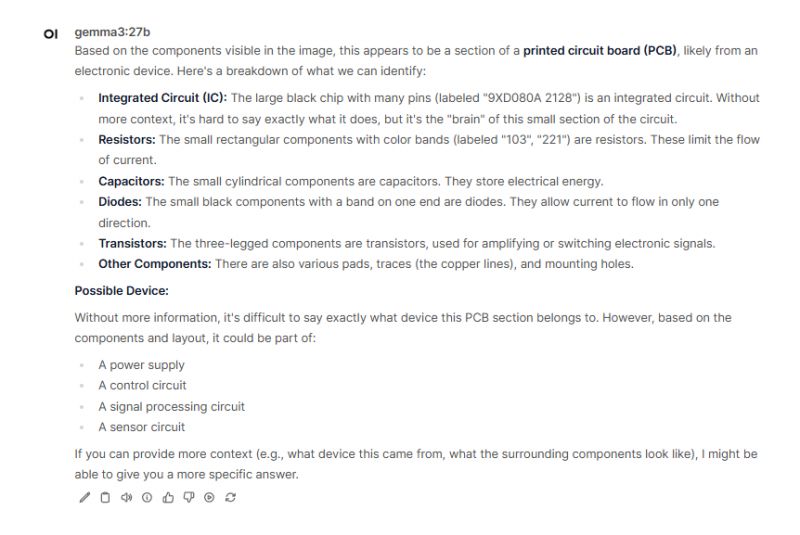

OK, it read everything correctly, although the link gave a bogus one.

.

.

.

.

.

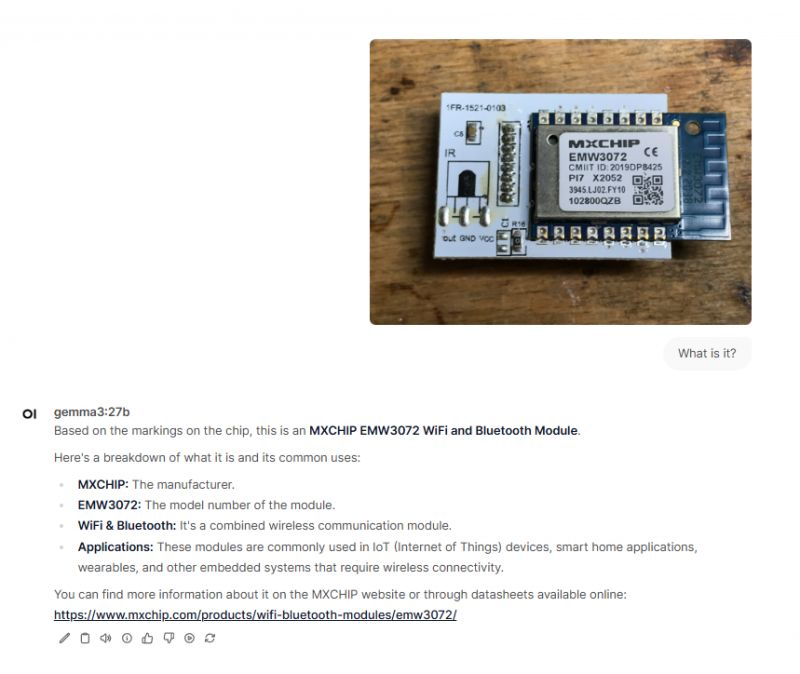

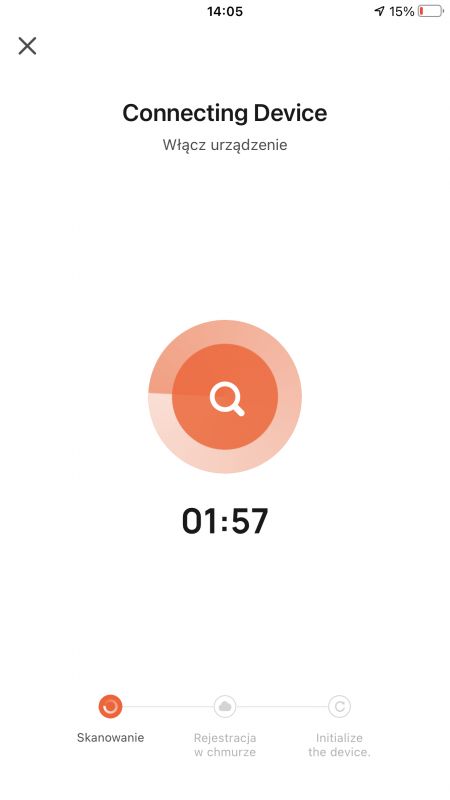

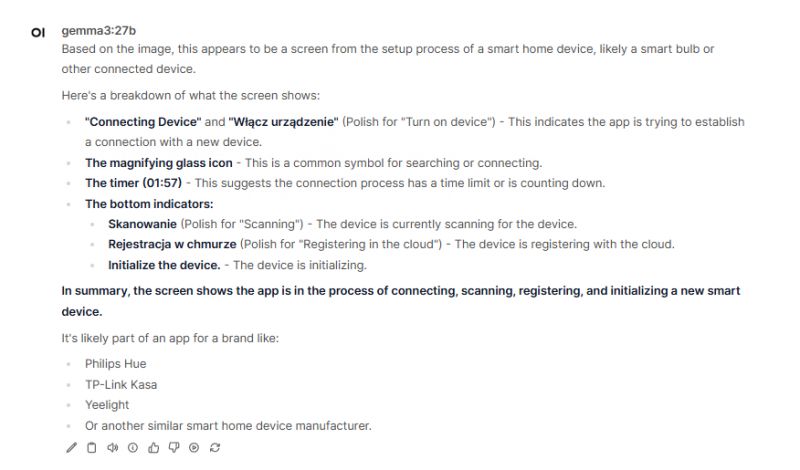

Well, he recognised everything, although he did not write that it was Tuya. He also translated the Polish subtitles.

.

.

.

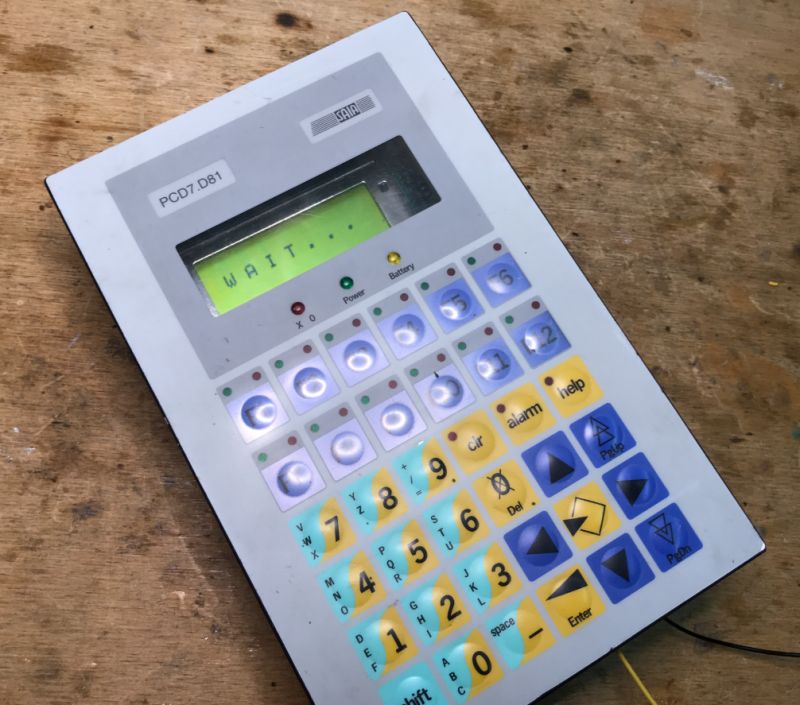

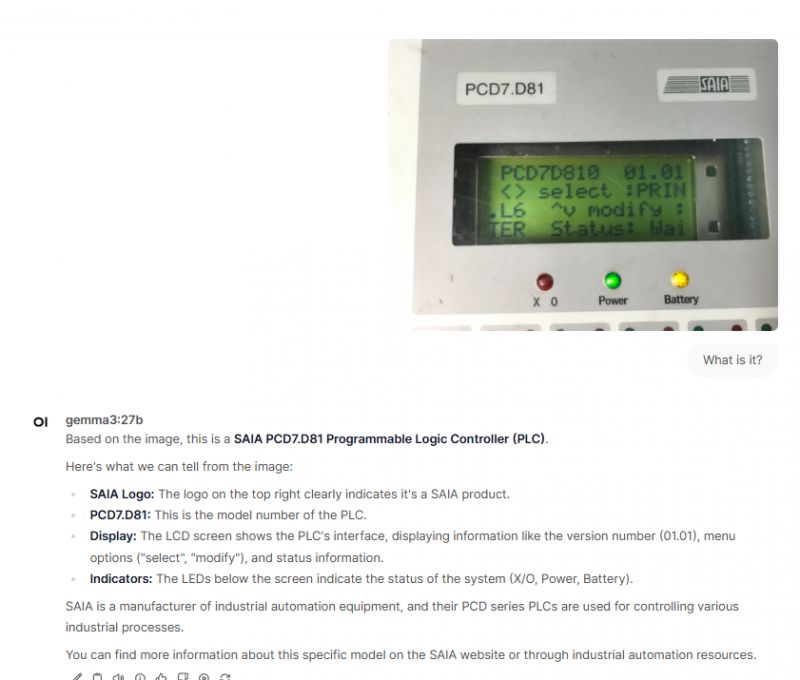

Medium. It went from an 8 to an R, and yes it seemingly read the name, but mistook the equipment for a PDA. It didn't read the SAIA logo.

The link to YT is dead, to the description too.

.

.

.

.

.

Right. I think it read all ok.

.

.

.

Right. He read "Twintex" correctly this time. He embraced everything...

.

.

.

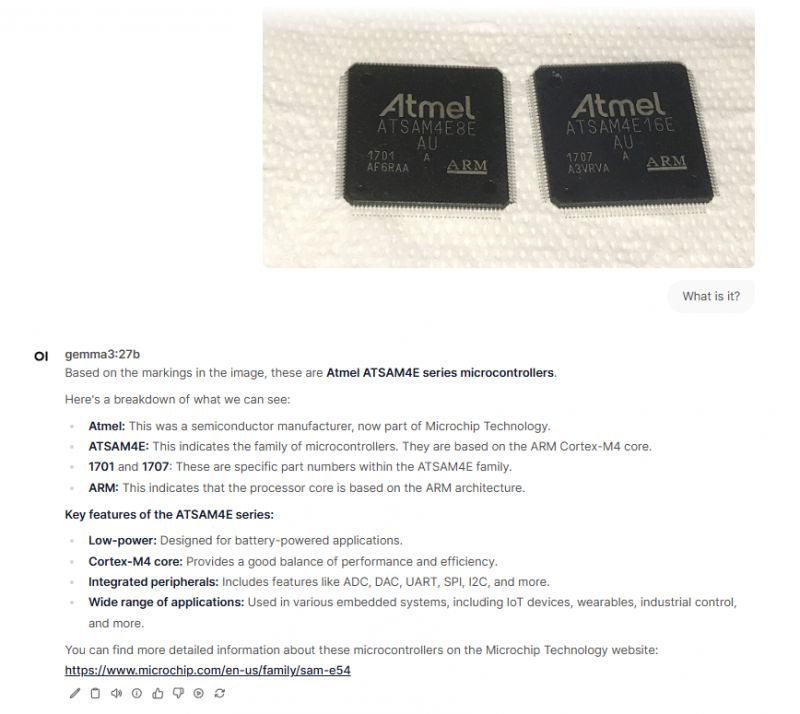

Right. It read almost everything, even 1701 and 1707. The only thing it didn't mention was that there are two versions here - 8E and 16E.

The link doesn't work.

.

.

.

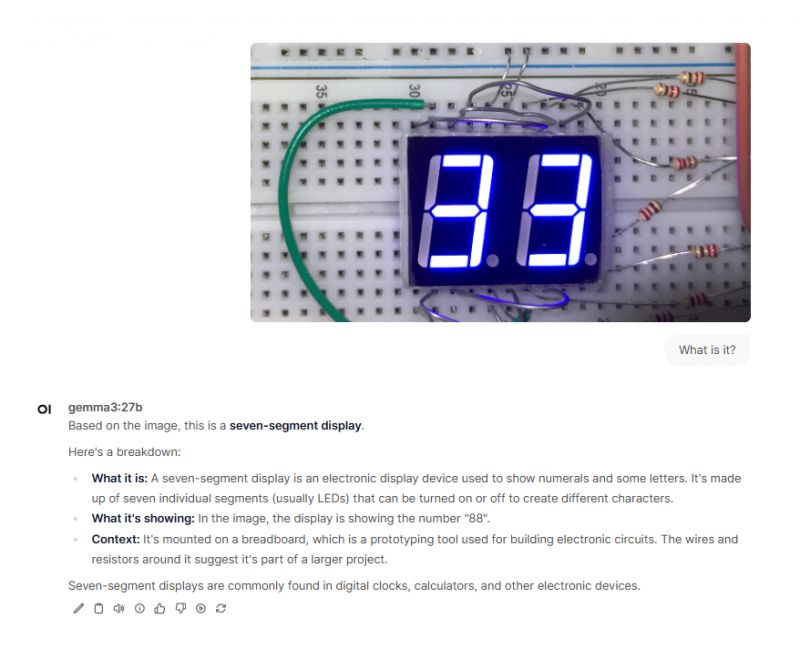

Medium. Too poor contrast and didn't grasp that it was 33. He thought it was 88.

.

.

.

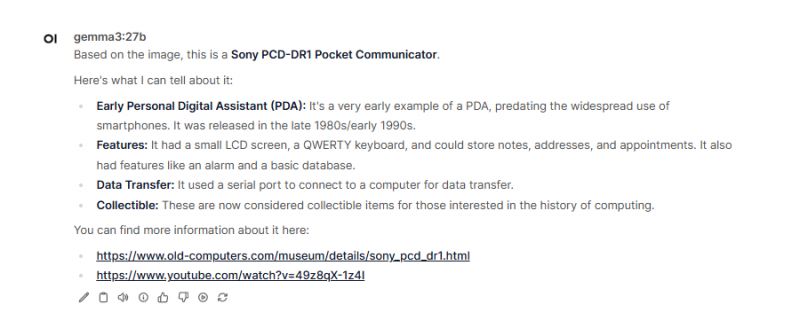

Right. This time he embraced that it was a PLC controller from SAIA. He also read some of the text from the display.

.

.

.

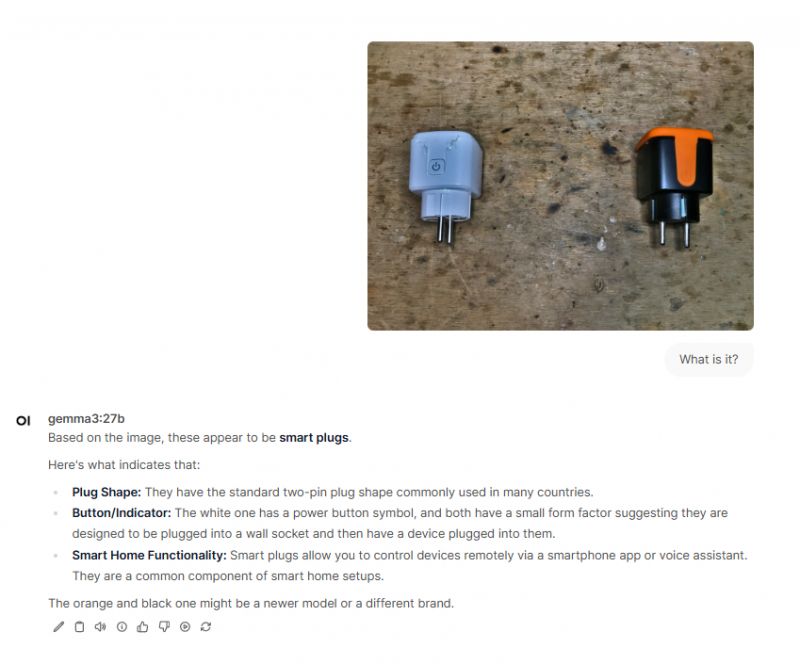

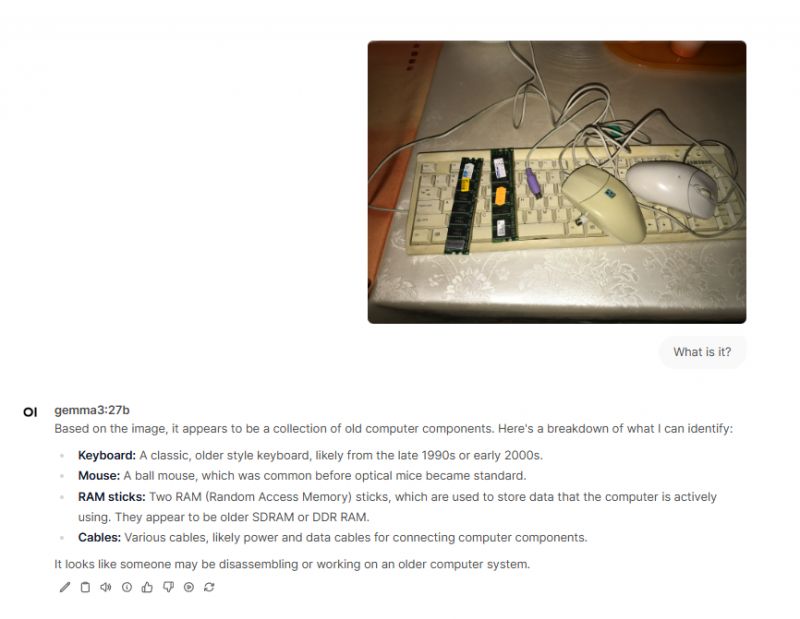

Good. Recognised and described the prongs as far as possible.

.

.

.

.

.

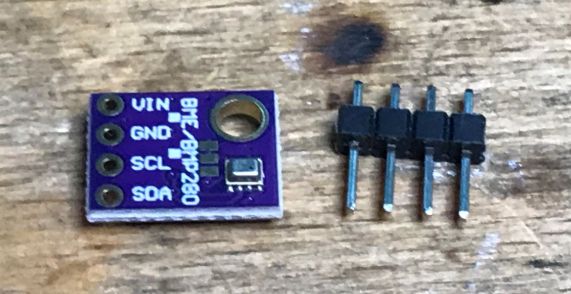

Well, although it is a BMP280 rather than a BME280. Interestingly, he even described what the BME is used for and gave its leads.

.

.

.

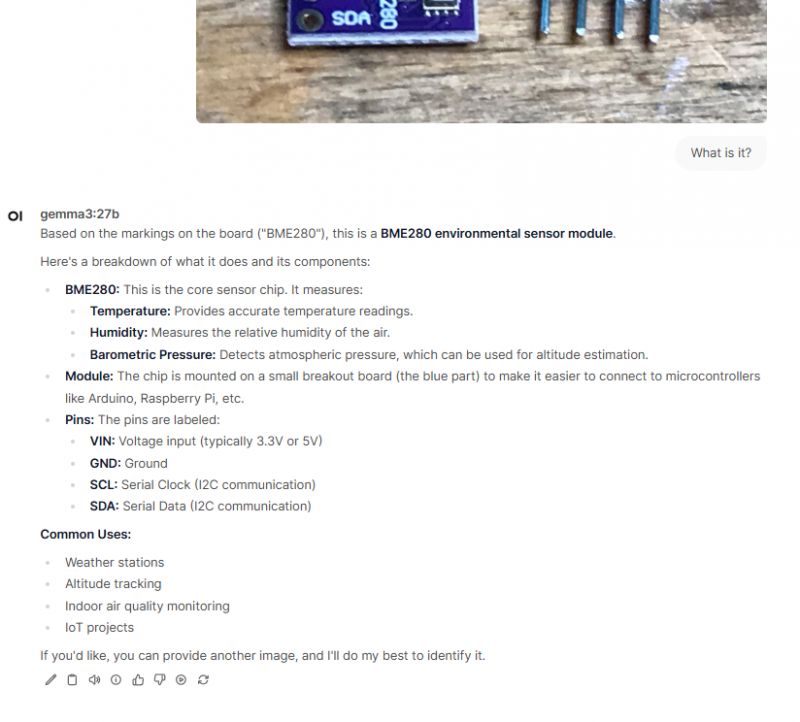

Right. He probably described everything. The only thing I think he misstated was the number of mice, as there are two, not one....

.

.

.

Well, it even read the curved description of Kratos.

.

.

.

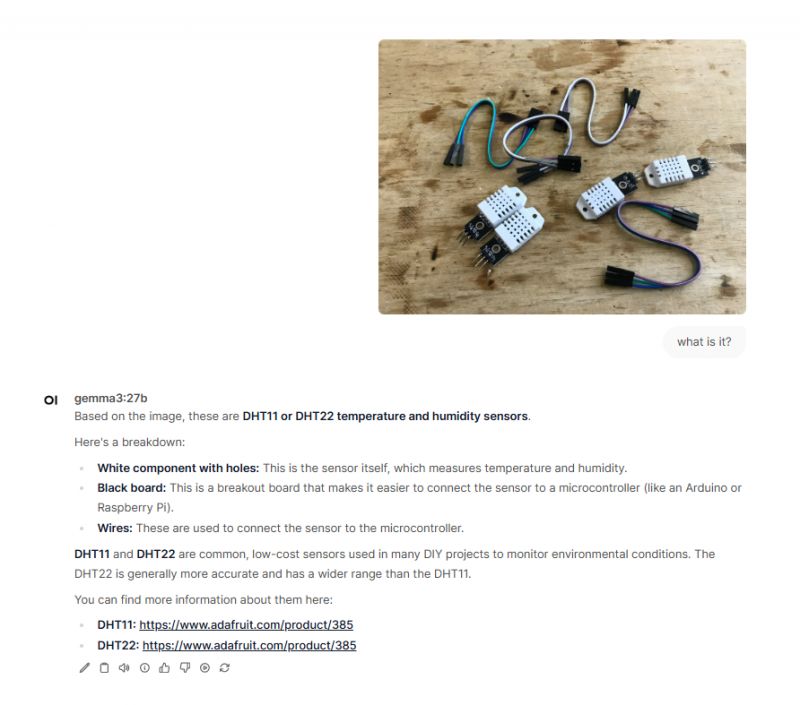

Good, although probably bogus links. He recognised that it was DHT.

.

.

.

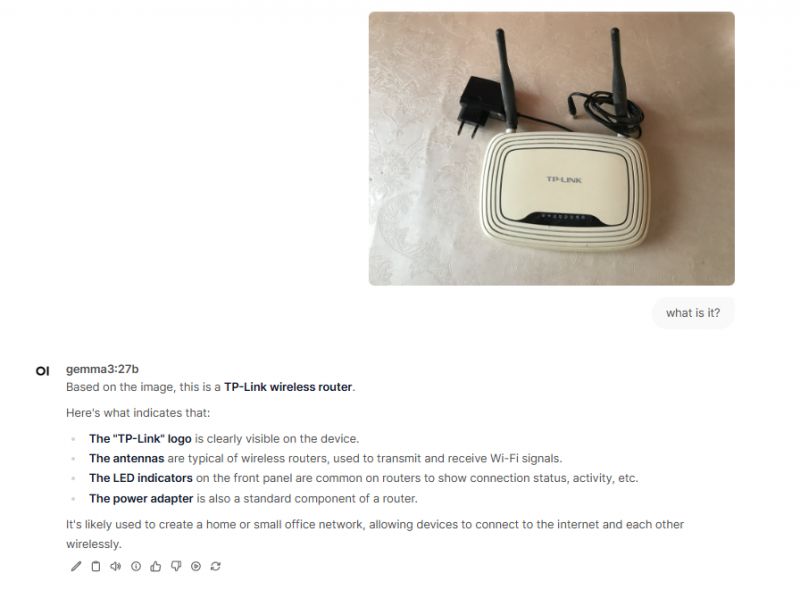

OK, he recognised that it was a WiFi router and power supply.

.

.

.

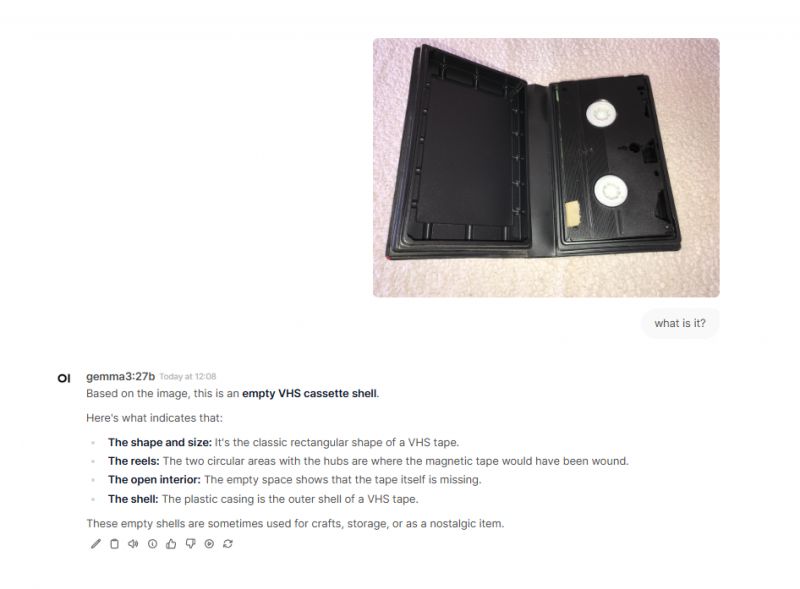

Medium. Here's the cassette, it's not a blank. The rest ok.

.

.

.

.

.

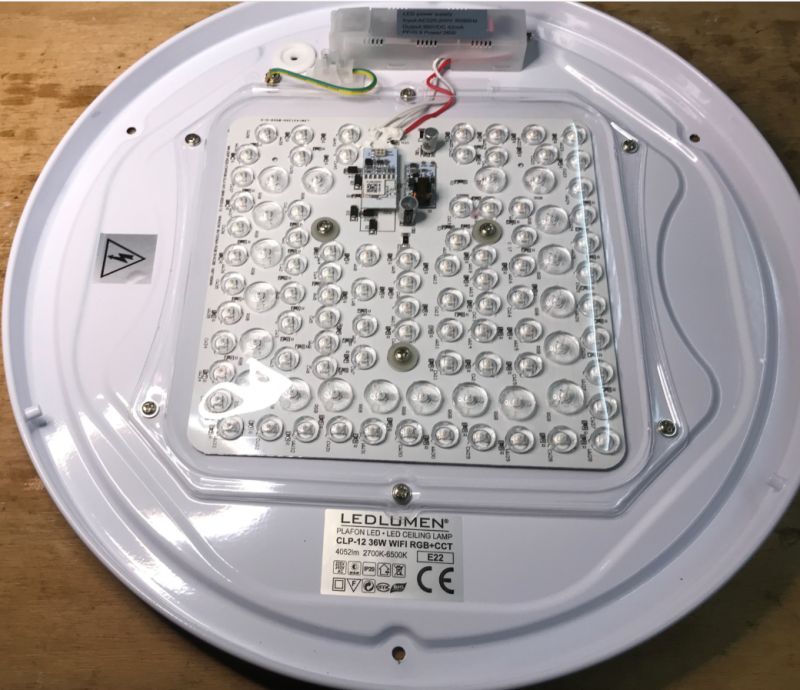

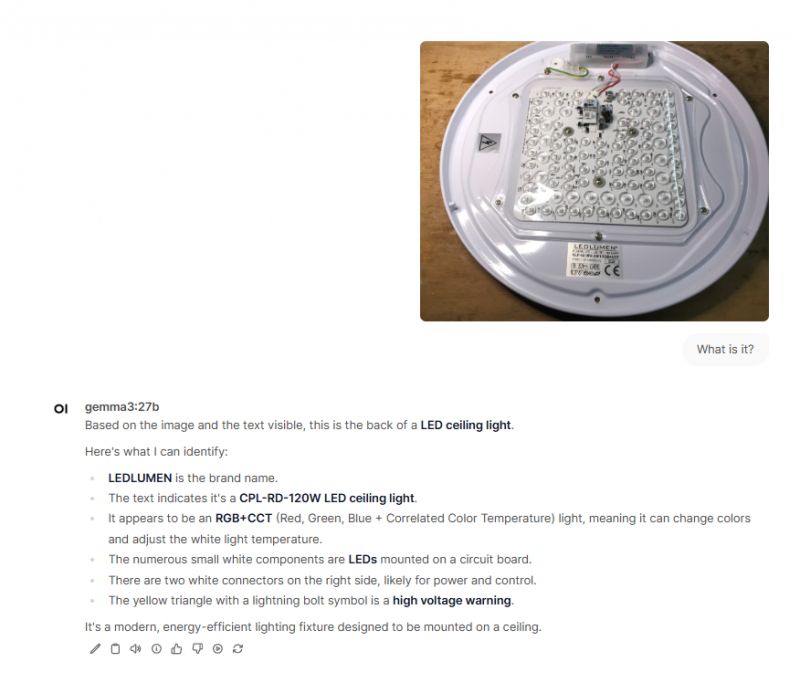

Medium. Where did he get 120W from?

.

.

.

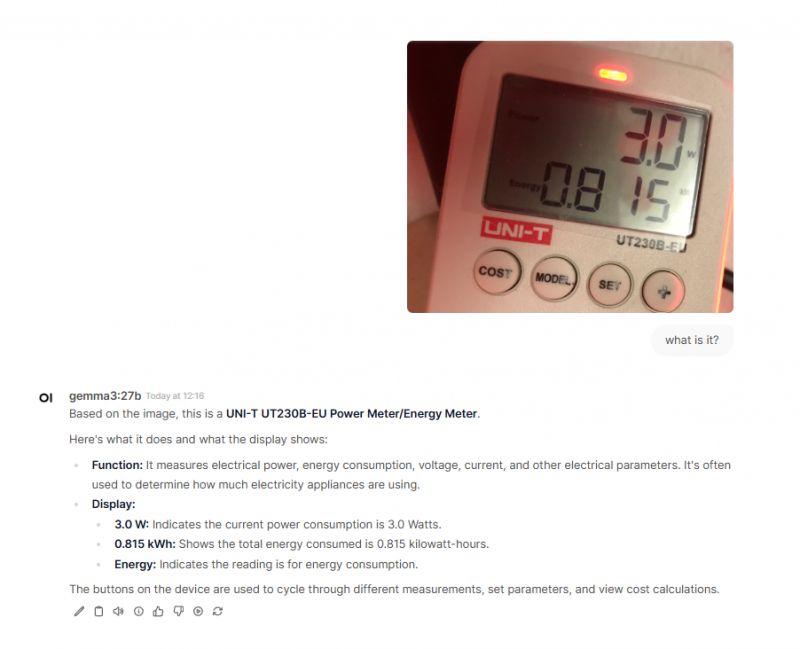

Good! Recognised the equipment type (read the designation) and also embraced the reading.

.

.

.

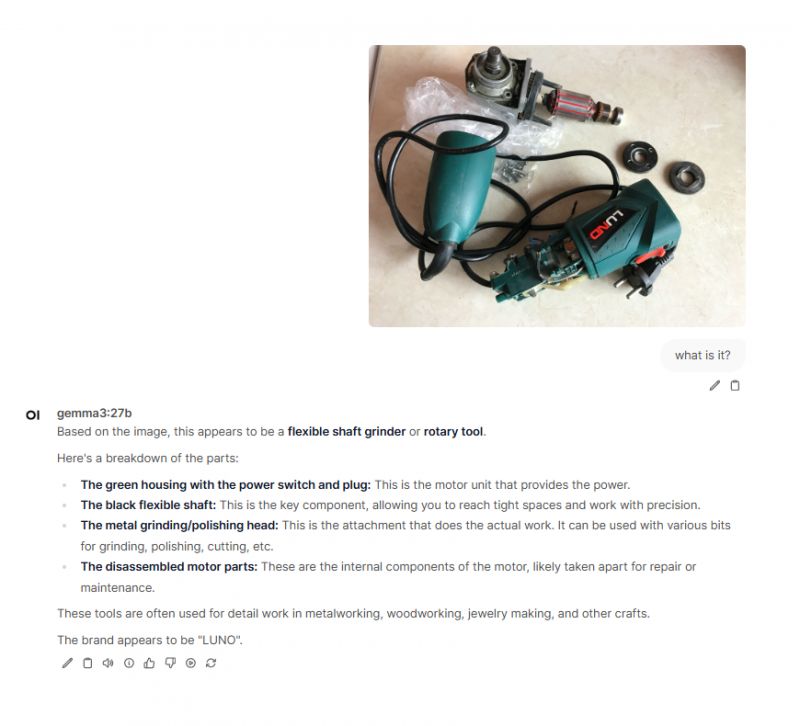

Fine, although the brand is more like Lund.

.

.

.

.

.

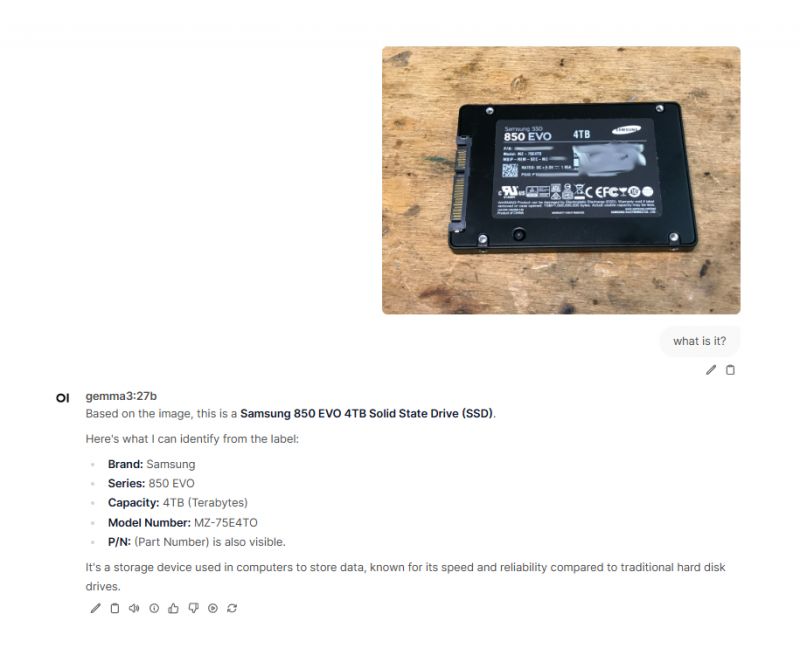

OK, although he went from 0 to O in the model, it happens to me too.

.

.

.

.

.

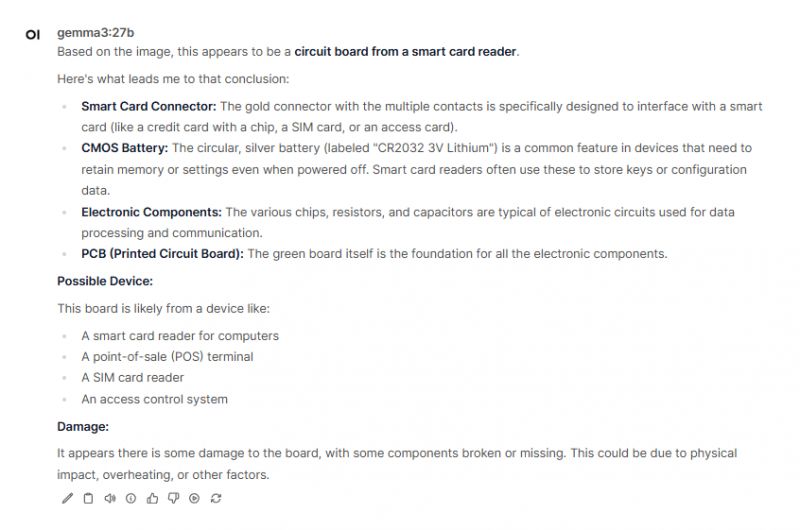

Medium. Although. He probably had no way of determining that it was a laptop. CR2032 3V he recognised, he read. So ok, and where's the damage? Because parts are missing?

.

.

.

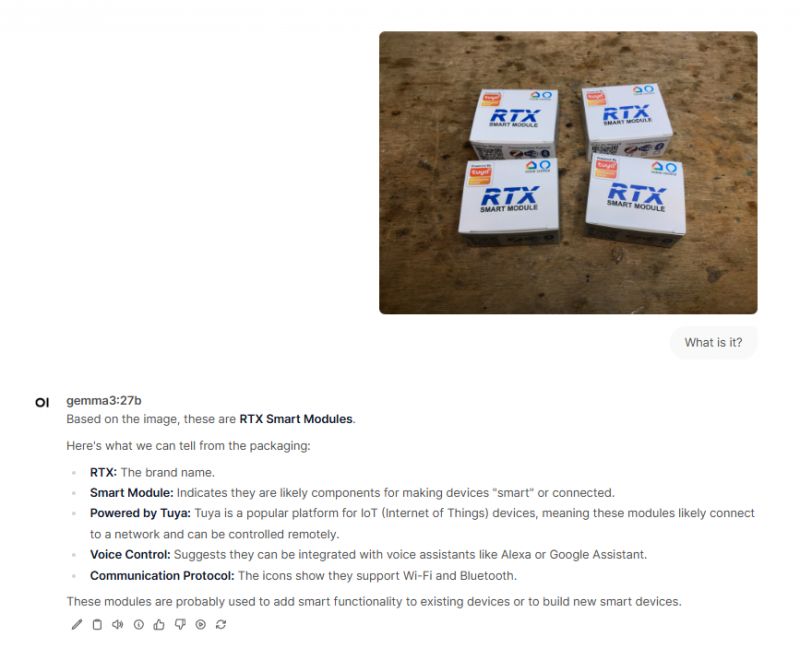

Right. He recognised that it was probably some RTX modules based on Tuya.

.

.

.

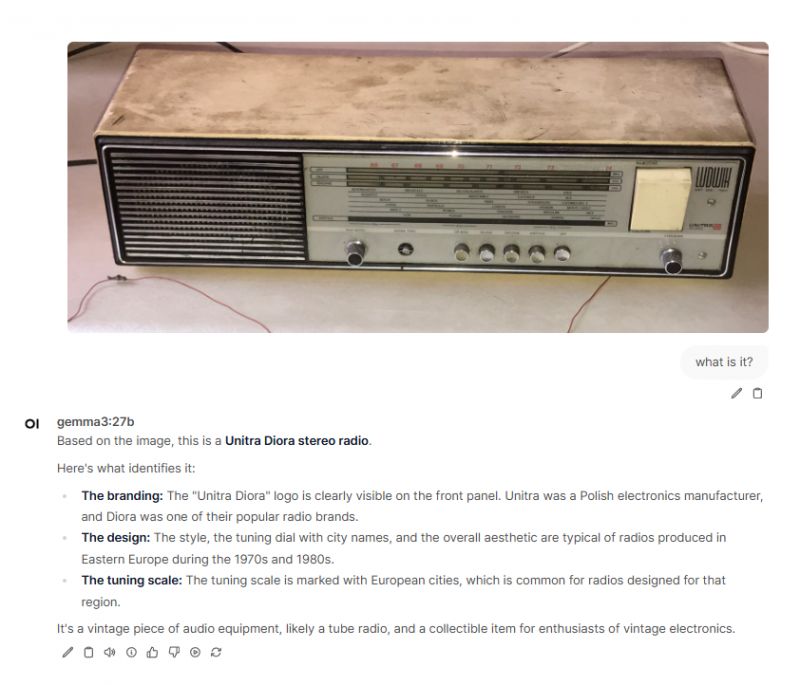

Right. He met/read what the equipment was.

.

.

.

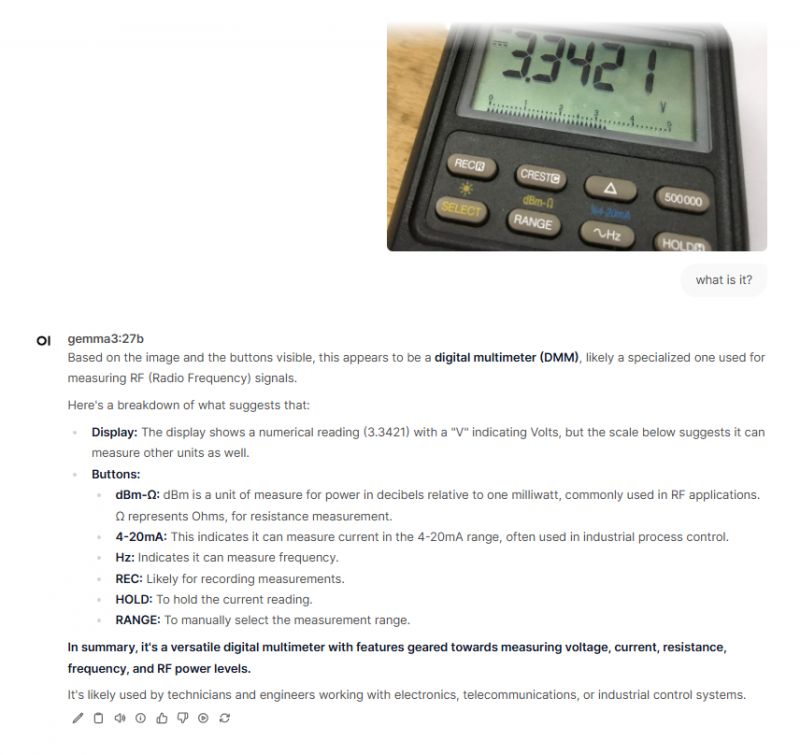

Good, although a little overdone with this RF. He read the voltage well.

.

.

.

OK, also read the inscription, although it's not a VFD.

.

.

.

.

.

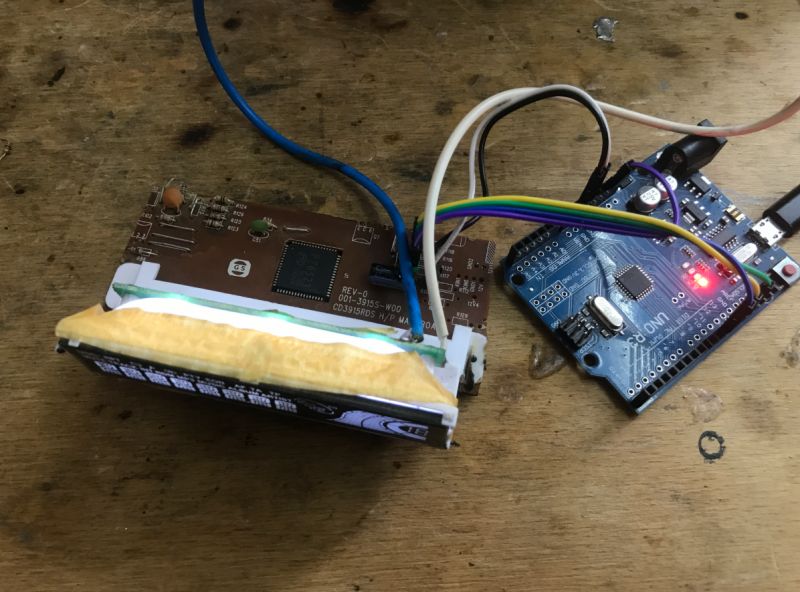

Wrong: it's not the graphics card. Did the perspective confuse him? Where does he see the RTX 3060 sign? Although he did recognise the Arduino.

.

.

.

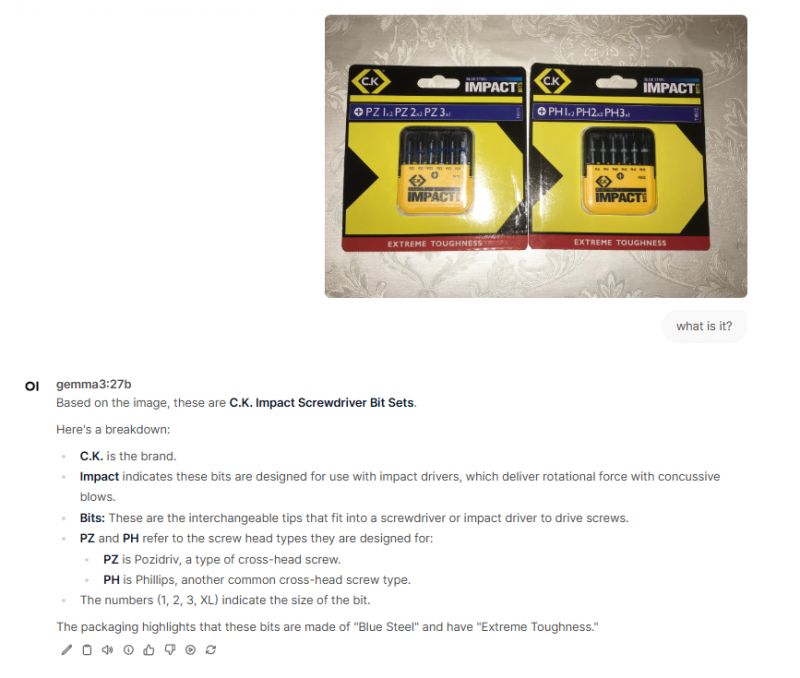

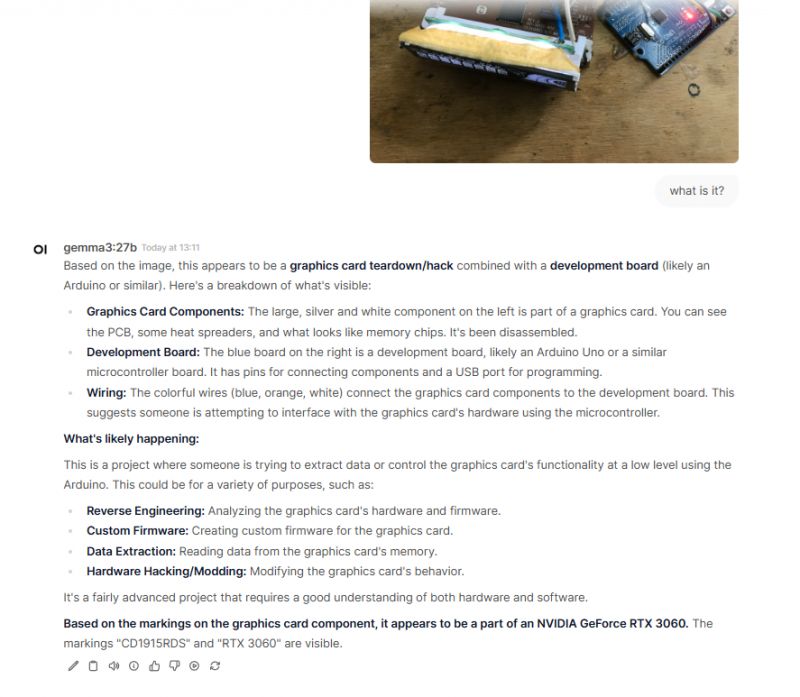

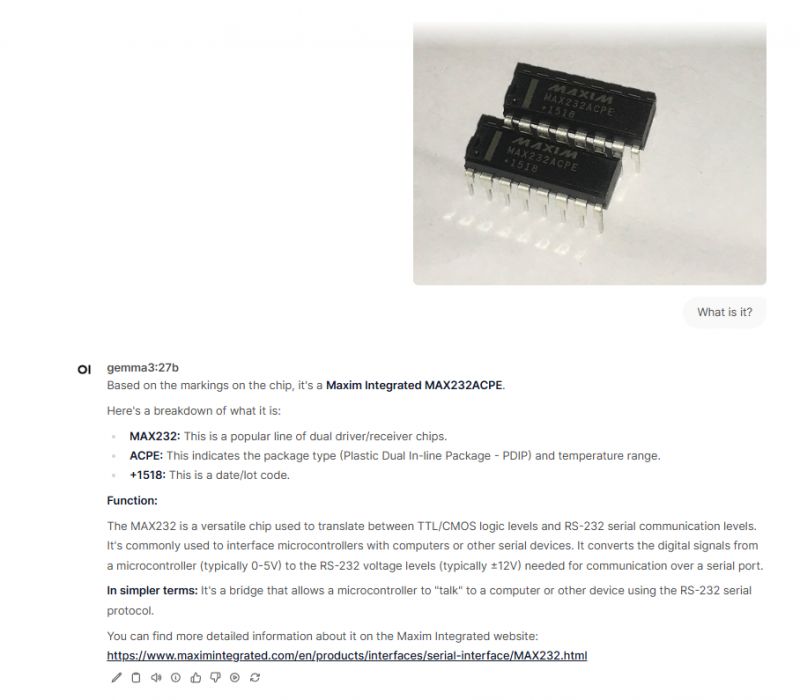

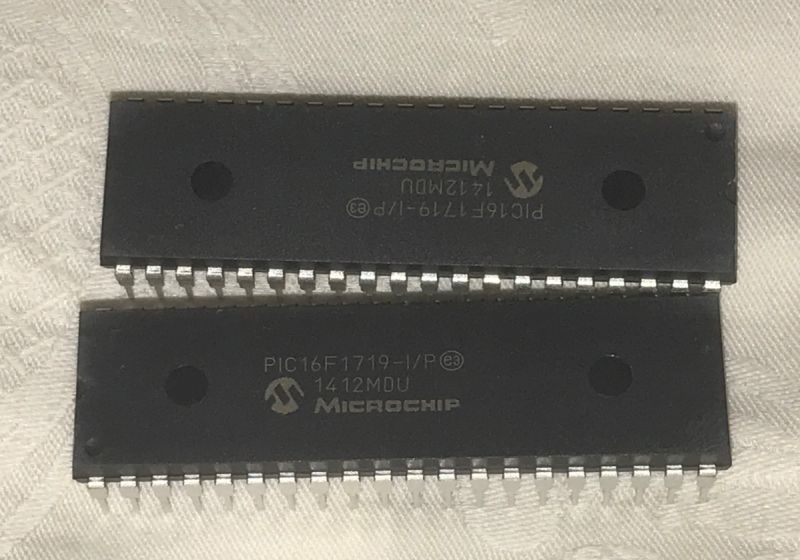

Good! Recognised both pieces and described what they are used for.

.

.

.

.

.

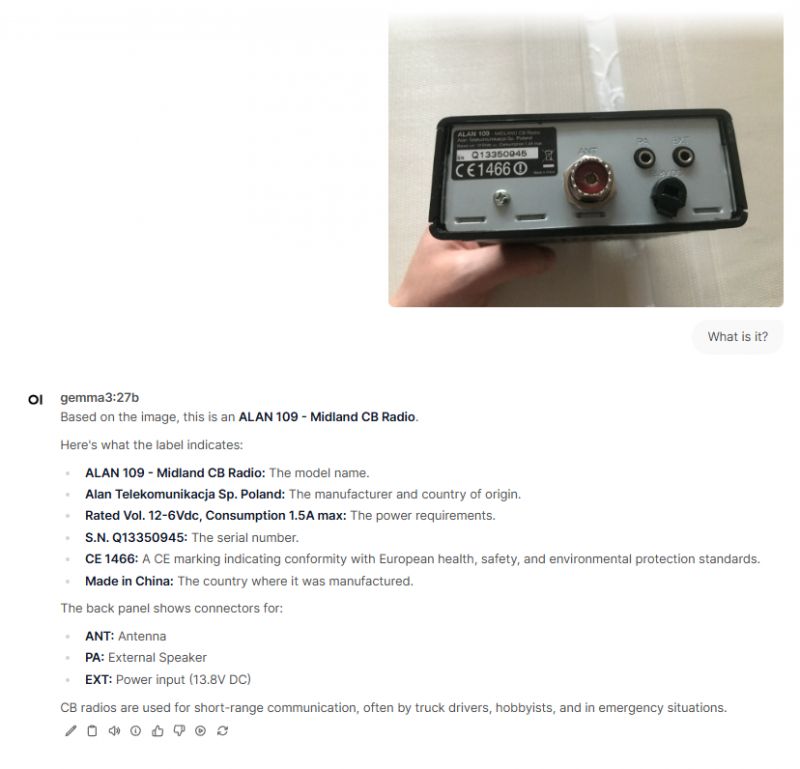

Good! Again, he read so much correctly.

.

.

.

.

Good! Again, it read correctly and even deciphered the -I/P abbreviation. Interestingly, it gave the correct link.

.

.

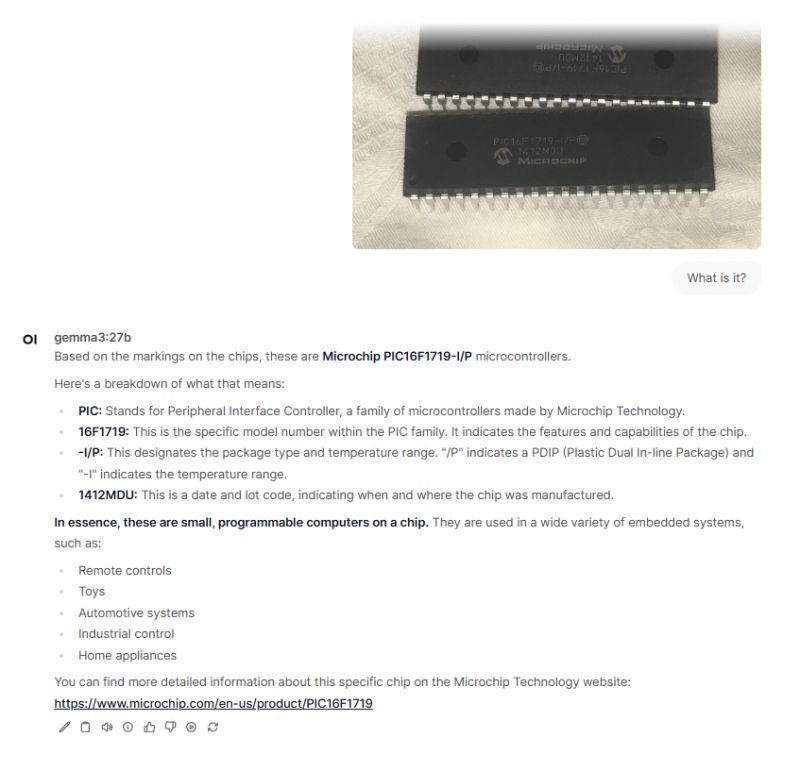

Right. It also read 3F.

.

.

.

.

.

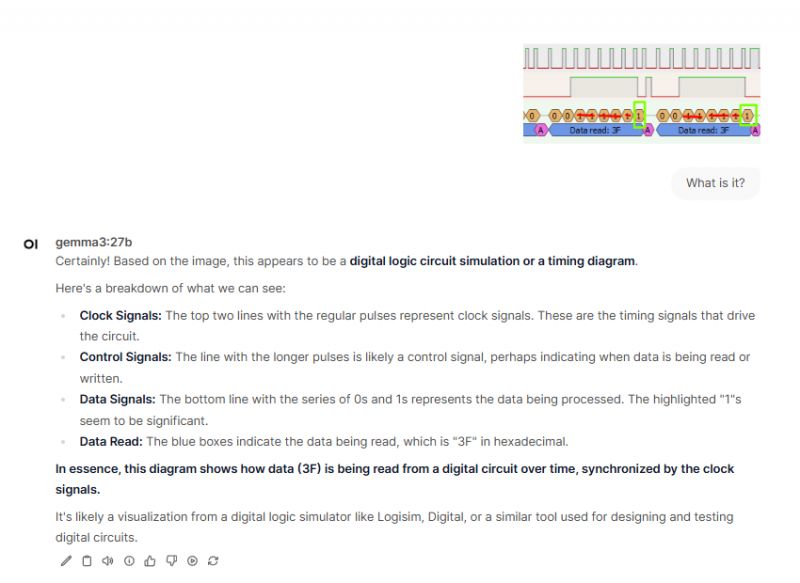

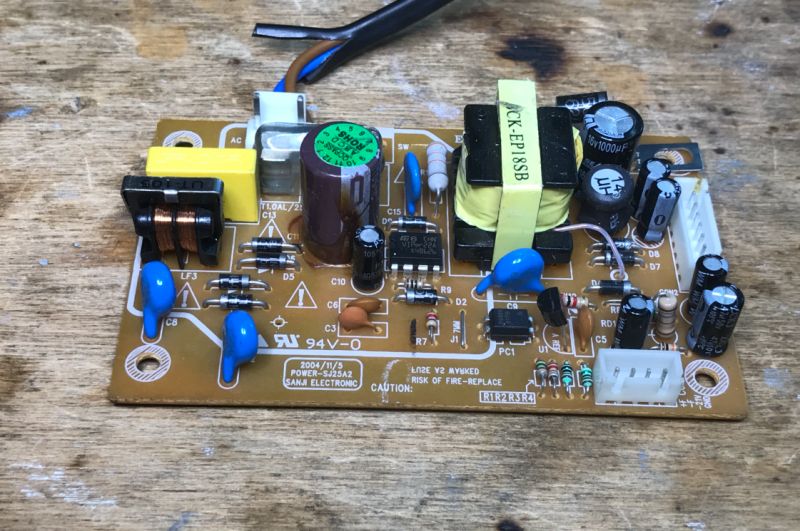

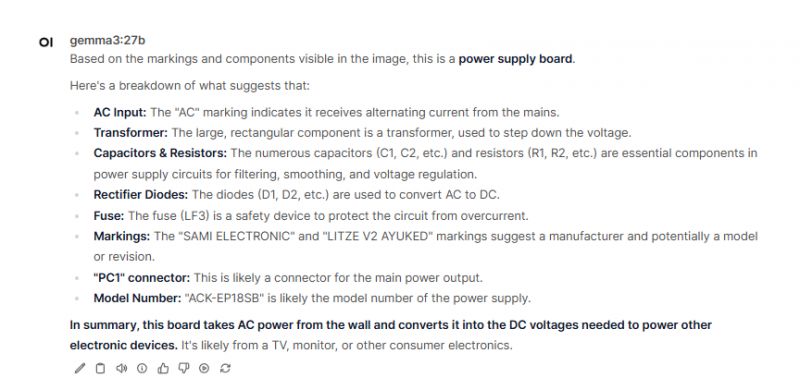

Medium, it's SANJI not SAMI and PC1 is not a connector but an optocoupler. Also this model number... and. reversed text read as LITZE V@ AYUKED?

.

.

.

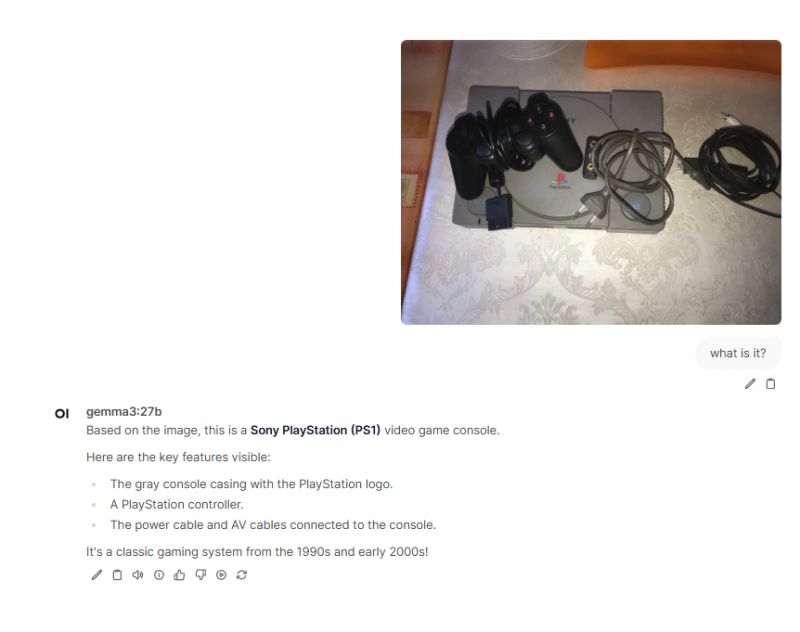

Okay, that's PS1.

.

.

.

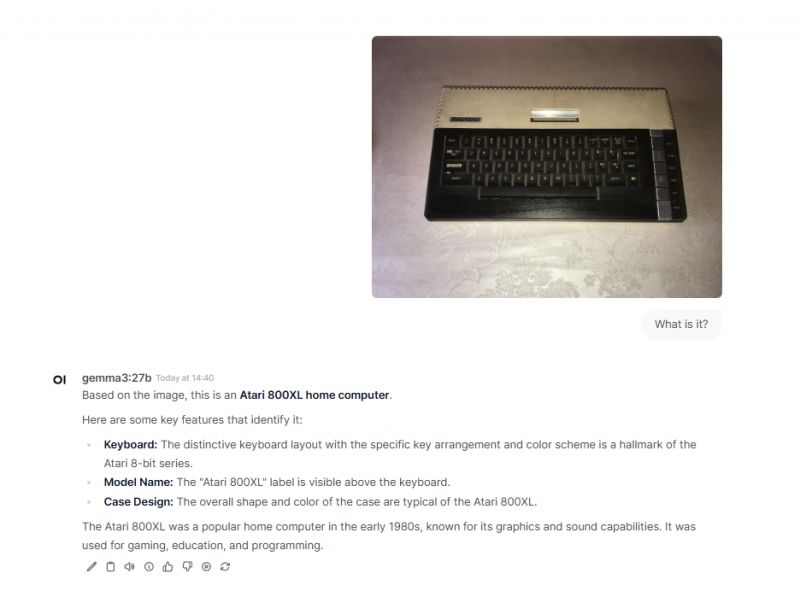

Okay, it's an Atari 800XL.

.

.

.

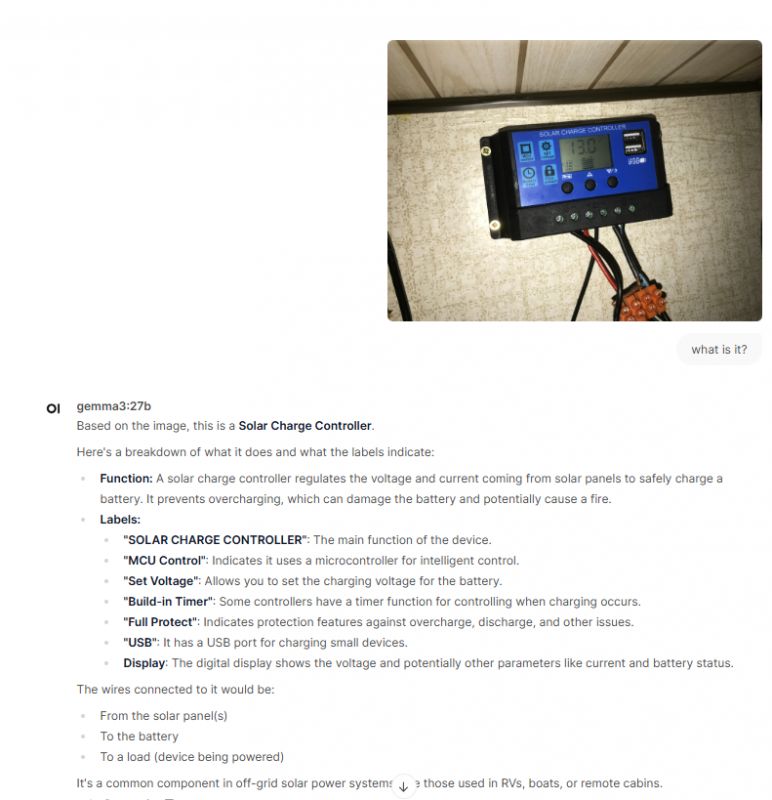

OK, it's a solar controller.

.

.

.

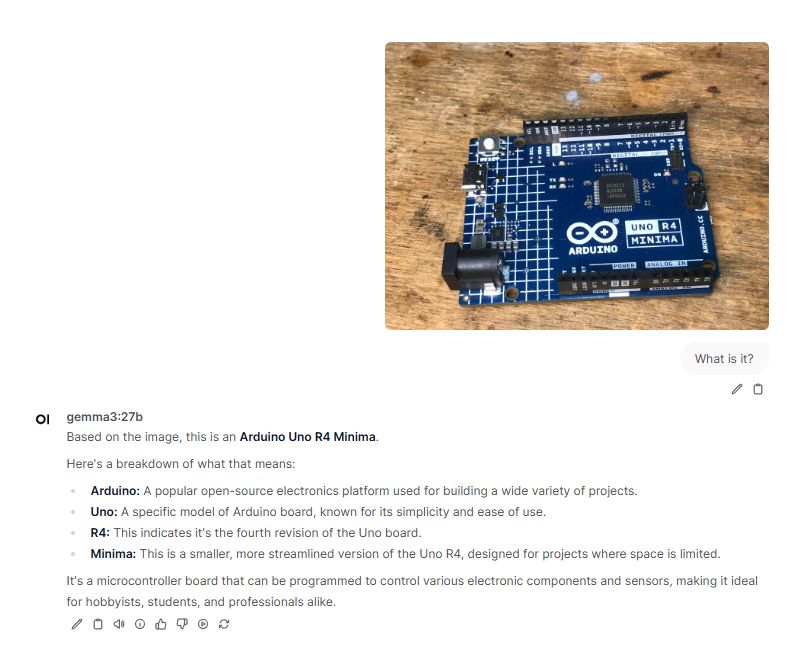

OK, he got to know the new Arduino, although was it that difficult?

.

.

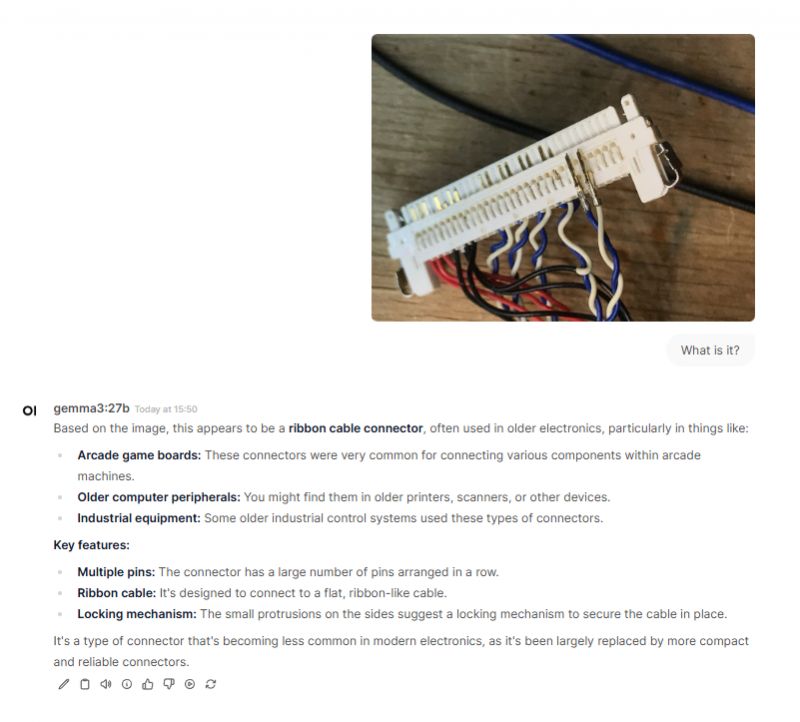

Bad, as you can see the LVDS connector does not know?

.

.

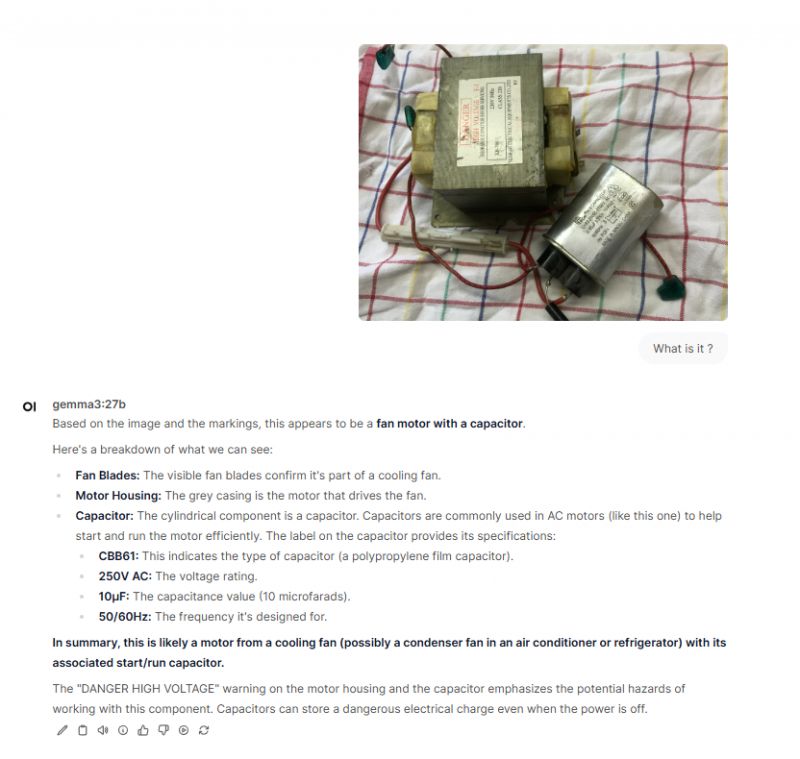

Wrong, even the capacity reads wrong. There it is 0.95 not 10.... I wonder if he accidentally took MOT (Microwave Oven Transformer) for MOTOR (motor), or maybe the tokens got mixed up because the name is similar?

.

.

.

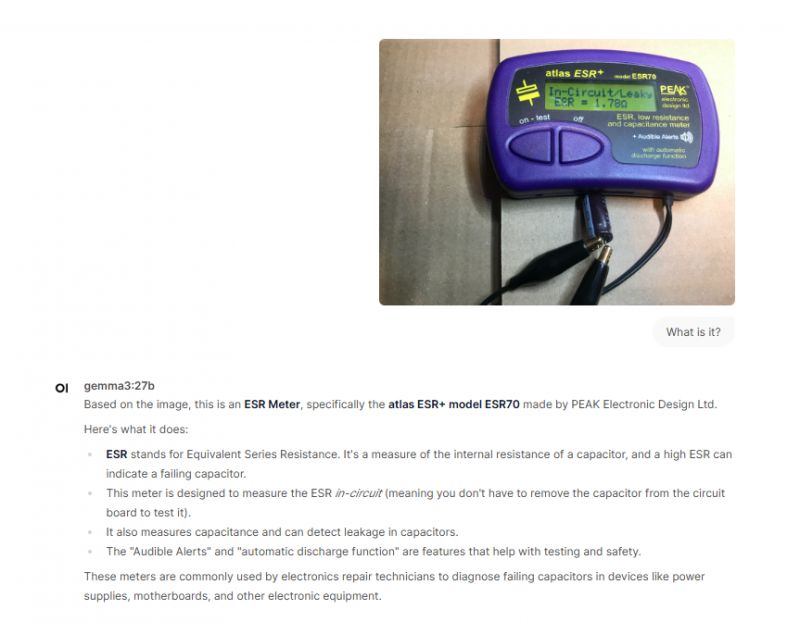

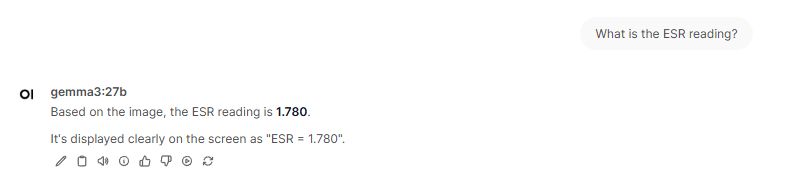

Well, ESR also read as I asked.

.

.

.

Well, he even determined that something was wrong with these capacitors.

.

.

.

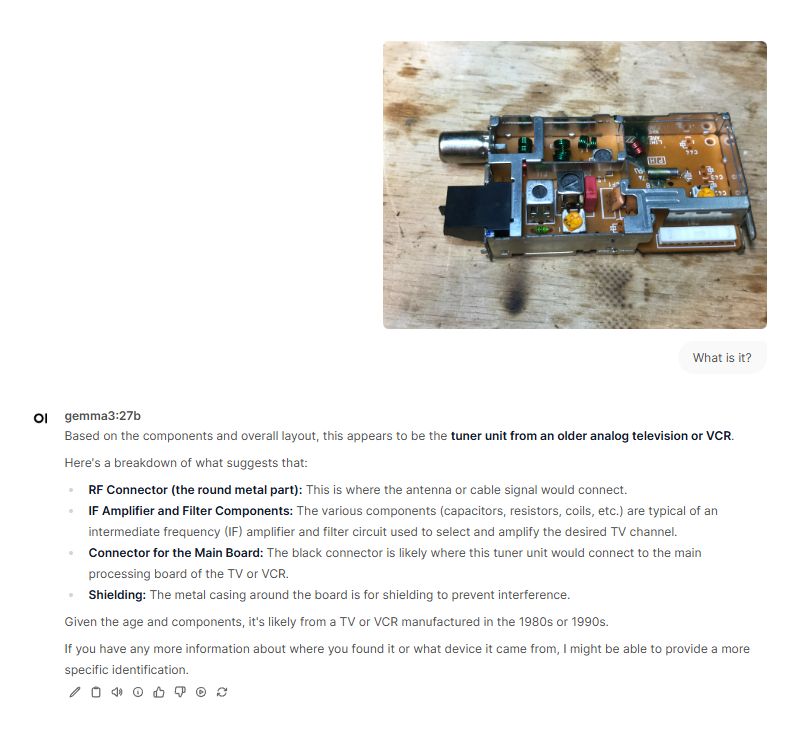

Well, he recognised that it was a tuner.... only this "black connector"...

.

Badly, the electron gun from the CRT however, Gemma does not know.

.

Wrong, and supposedly so simple - a light switch.

.

.

Right.

.

Image recognition time .

For my hardware (specs at the beginning of the topic), the times are twofold - it depends if something is occupying the GPU. Unless I'm turning nothing else on the laptop, CUDA kicks in and the model runs faster.

CUDA times:

ime=2025-03-22T12:54:05.803+01:00 level=INFO source=ggml.go:67 msg="" architecture=gemma3 file_type=Q4_K_M name="" description="" num_tensors=1247 num_key_values=36

ggml_cuda_init: GGML_CUDA_FORCE_MMQ: no

ggml_cuda_init: GGML_CUDA_FORCE_CUBLAS: no

ggml_cuda_init: found 1 CUDA devices:

Device 0: NVIDIA GeForce GTX 1060, compute capability 6.1, VMM: yes

load_backend: loaded CUDA backend from W:\TOOLS\ollama-windows-amd64\lib\ollama\cuda_v12\ggml-cuda.dll

load_backend: loaded CPU backend from W:\TOOLS\ollama-windows-amd64\lib\ollama\ggml-cpu-haswell.dll

time=2025-03-22T12:54:06.369+01:00 level=INFO source=ggml.go:109 msg=system CPU.0.SSE3=1 CPU.0.SSSE3=1 CPU.0.AVX=1 CPU.0.AVX2=1 CPU.0.F16C=1 CPU.0.FMA=1 CPU.0.LLAMAFILE=1 CPU.1.LLAMAFILE=1 CUDA.0.ARCHS=500,600,610,700,750,800,860,870,890,900,1200 CUDA.0.USE_GRAPHS=1 CUDA.0.PEER_MAX_BATCH_SIZE=128 compiler=cgo(clang)

time=2025-03-22T12:54:06.496+01:00 level=INFO source=ggml.go:289 msg="model weights" buffer=CPU size="16.8 GiB"

time=2025-03-22T12:54:06.496+01:00 level=INFO source=ggml.go:289 msg="model weights" buffer=CUDA0 size="502.7 MiB"

time=2025-03-22T12:54:28.892+01:00 level=INFO source=ggml.go:358 msg="compute graph" backend=CUDA0 buffer_type=CUDA0

time=2025-03-22T12:54:28.892+01:00 level=INFO source=ggml.go:358 msg="compute graph" backend=CPU buffer_type=CUDA_Host

time=2025-03-22T12:54:28.894+01:00 level=WARN source=ggml.go:149 msg="key not found" key=tokenizer.ggml.pretokenizer default="(?i:'s|'t|'re|'ve|'m|'ll|'d)|[^\\r\\n\\p{L}\\p{N}]?\\p{L}+|\\p{N}{1,3}| ?[^\\s\\p{L}\\p{N}]+[\\r\\n]*|\\s*[\\r\\n]+|\\s+(?!\\S)|\\s+"

time=2025-03-22T12:54:28.901+01:00 level=WARN source=ggml.go:149 msg="key not found" key=tokenizer.ggml.add_eot_token default=false

time=2025-03-22T12:54:28.905+01:00 level=WARN source=ggml.go:149 msg="key not found" key=tokenizer.ggml.pretokenizer default="(?i:'s|'t|'re|'ve|'m|'ll|'d)|[^\\r\\n\\p{L}\\p{N}]?\\p{L}+|\\p{N}{1,3}| ?[^\\s\\p{L}\\p{N}]+[\\r\\n]*|\\s*[\\r\\n]+|\\s+(?!\\S)|\\s+"

time=2025-03-22T12:54:28.916+01:00 level=WARN source=ggml.go:149 msg="key not found" key=gemma3.attention.layer_norm_rms_epsilon default=9.999999974752427e-07

time=2025-03-22T12:54:28.916+01:00 level=WARN source=ggml.go:149 msg="key not found" key=gemma3.rope.local.freq_base default=10000

time=2025-03-22T12:54:28.916+01:00 level=WARN source=ggml.go:149 msg="key not found" key=gemma3.rope.global.freq_base default=1e+06

time=2025-03-22T12:54:28.916+01:00 level=WARN source=ggml.go:149 msg="key not found" key=gemma3.rope.freq_scale default=1

time=2025-03-22T12:54:28.916+01:00 level=WARN source=ggml.go:149 msg="key not found" key=gemma3.mm_tokens_per_image default=256

time=2025-03-22T12:54:29.128+01:00 level=INFO source=server.go:619 msg="llama runner started in 23.58 seconds"

[GIN] 2025/03/22 - 12:54:52 | 200 | 47.8405856s | 192.168.0.213 | POST "/api/chat"

[GIN] 2025/03/22 - 13:00:33 | 200 | 4m20s | 192.168.0.213 | POST "/api/chat"

[GIN] 2025/03/22 - 13:00:57 | 200 | 24.673879s | 192.168.0.213 | POST "/api/chat"

[GIN] 2025/03/22 - 13:01:26 | 200 | 28.8113443s | 192.168.0.213 | POST "/api/chat"

[GIN] 2025/03/22 - 13:05:36 | 200 | 2m56s | 192.168.0.213 | POST "/api/chat"

[GIN] 2025/03/22 - 13:06:00 | 200 | 24.0392796s | 192.168.0.213 | POST "/api/chat"

[GIN] 2025/03/22 - 13:06:28 | 200 | 27.8827066s | 192.168.0.213 | POST "/api/chat"

[GIN] 2025/03/22 - 13:11:07 | 200 | 23.0458191s | 192.168.0.213 | POST "/api/chat"

[GIN] 2025/03/22 - 13:17:15 | 200 | 6m6s | 192.168.0.213 | POST "/api/chat"

[GIN] 2025/03/22 - 13:17:48 | 200 | 32.5789072s | 192.168.0.213 | POST "/api/chat"

[GIN] 2025/03/22 - 13:18:23 | 200 | 33.5782454s | 192.168.0.213 | POST "/api/chat"

[GIN] 2025/03/22 - 13:26:32 | 200 | 4m41s | 192.168.0.213 | POST "/api/chat"

[GIN] 2025/03/22 - 13:27:08 | 200 | 35.8528721s | 192.168.0.213 | POST "/api/chat"

[GIN] 2025/03/22 - 13:27:38 | 200 | 30.0081765s | 192.168.0.213 | POST "/api/chat"

[GIN] 2025/03/22 - 13:34:09 | 200 | 3m38s | 192.168.0.213 | POST "/api/chat"

[GIN] 2025/03/22 - 13:34:33 | 200 | 23.8713585s | 192.168.0.213 | POST "/api/chat"

[GIN] 2025/03/22 - 13:35:02 | 200 | 28.2681217s | 192.168.0.213 | POST "/api/chat"

CPU times:

time=2025-03-22T13:42:18.212+01:00 level=INFO source=ggml.go:67 msg="" architecture=gemma3 file_type=Q4_K_M name="" description="" num_tensors=1247 num_key_values=36

load_backend: loaded CPU backend from W:\TOOLS\ollama-windows-amd64\lib\ollama\ggml-cpu-haswell.dll

time=2025-03-22T13:42:18.249+01:00 level=INFO source=ggml.go:109 msg=system CPU.0.SSE3=1 CPU.0.SSSE3=1 CPU.0.AVX=1 CPU.0.AVX2=1 CPU.0.F16C=1 CPU.0.FMA=1 CPU.0.LLAMAFILE=1 CPU.1.LLAMAFILE=1 compiler=cgo(clang)

time=2025-03-22T13:42:18.262+01:00 level=INFO source=ggml.go:289 msg="model weights" buffer=CPU size="17.3 GiB"

time=2025-03-22T13:42:28.245+01:00 level=INFO source=ggml.go:358 msg="compute graph" backend=CPU buffer_type=CPU

time=2025-03-22T13:42:28.248+01:00 level=WARN source=ggml.go:149 msg="key not found" key=tokenizer.ggml.pretokenizer default="(?i:'s|'t|'re|'ve|'m|'ll|'d)|[^\\r\\n\\p{L}\\p{N}]?\\p{L}+|\\p{N}{1,3}| ?[^\\s\\p{L}\\p{N}]+[\\r\\n]*|\\s*[\\r\\n]+|\\s+(?!\\S)|\\s+"

time=2025-03-22T13:42:28.255+01:00 level=WARN source=ggml.go:149 msg="key not found" key=tokenizer.ggml.add_eot_token default=false

time=2025-03-22T13:42:28.261+01:00 level=WARN source=ggml.go:149 msg="key not found" key=tokenizer.ggml.pretokenizer default="(?i:'s|'t|'re|'ve|'m|'ll|'d)|[^\\r\\n\\p{L}\\p{N}]?\\p{L}+|\\p{N}{1,3}| ?[^\\s\\p{L}\\p{N}]+[\\r\\n]*|\\s*[\\r\\n]+|\\s+(?!\\S)|\\s+"

time=2025-03-22T13:42:28.277+01:00 level=WARN source=ggml.go:149 msg="key not found" key=gemma3.attention.layer_norm_rms_epsilon default=9.999999974752427e-07

time=2025-03-22T13:42:28.277+01:00 level=WARN source=ggml.go:149 msg="key not found" key=gemma3.rope.local.freq_base default=10000

time=2025-03-22T13:42:28.277+01:00 level=WARN source=ggml.go:149 msg="key not found" key=gemma3.rope.global.freq_base default=1e+06

time=2025-03-22T13:42:28.277+01:00 level=WARN source=ggml.go:149 msg="key not found" key=gemma3.rope.freq_scale default=1

time=2025-03-22T13:42:28.277+01:00 level=WARN source=ggml.go:149 msg="key not found" key=gemma3.mm_tokens_per_image default=256

time=2025-03-22T13:42:28.424+01:00 level=INFO source=server.go:619 msg="llama runner started in 10.48 seconds"

[GIN] 2025/03/22 - 13:52:32 | 200 | 10m15s | 192.168.0.213 | POST "/api/chat"

[GIN] 2025/03/22 - 13:57:10 | 200 | 4m37s | 192.168.0.213 | POST "/api/chat"

[GIN] 2025/03/22 - 14:03:48 | 200 | 8m58s | 192.168.0.213 | POST "/api/chat"

[GIN] 2025/03/22 - 14:08:04 | 200 | 10m53s | 192.168.0.213 | POST "/api/chat"

[GIN] 2025/03/22 - 14:11:44 | 200 | 7m56s | 192.168.0.213 | POST "/api/chat"

[GIN] 2025/03/22 - 14:14:56 | 200 | 9m23s | 192.168.0.213 | POST "/api/chat"

[GIN] 2025/03/22 - 14:22:46 | 200 | 16m42s | 192.168.0.213 | POST "/api/chat"

Summary .

Impressive. There are shortcomings, but it is still impressive. This model is definitely better than the previously tested LLAVA , and with the help of the GPU (via CUDA) it still goes pretty fast.

Hallucinations still happen, especially in unclear situations, but it's still very good.

What's more, this model does a pretty good job of reading text and all sorts of markings and lettering, too, from hardware and from displays.

This is really a great deal, considering that I ran the whole thing on my old laptop.

Do you see any uses for such an AI model? .

If you have any pictures to test, feel free to comment too.

Cool? Ranking DIY Helpful post? Buy me a coffee.