.

.

How do I generate images on my own computer? How to change the content of a picture? Is AI able to suggest an interface sketch for a smart home controller? This is what I will try to find out here, all thanks to an easy-to-use web interface based on the Stable Diffusion XL architecture.

Presented here Fooocus is a completely free, open and 100% local (no internet, on your computer) environment for creating images using AI models based on Stable Diffusion. Fooocus offers, among other things:

- image generation based on prompts

- GPT2-based prompt development

- image upscaling, i.e. increasing the resolution

- outpainting, i.e. adding parts of an image outside its frame

- inpainting, i.e. editing part of an image

- variation, i.e. creating different versions of the image

- image prompt

- describe image, i.e. describing the image to the prompt of the selected model

- negative prompt, i.e. what you don't want in the image

- the possibility of integrating LORA add-ons, styles and setting their weights, which gives us greater control over what we generate

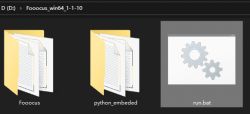

Install and run .

Download the package from the repository , extract, run.bat:

.

.

In newer versions there will also be separate scripts run_realistic.bat and run_anime.bat, they work in the same way - just select the style of graphics at the start.

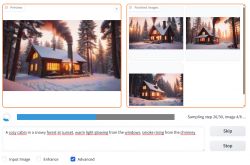

After a while in the browser our Fooocus page will open:

.

.

Time to start the fun.

First generations .

I must warn at the outset that the graphics take quite a long time to generate. On mine on an Intel(R) Core(TM) i7-6700HQ CPU @ 2.60GHz, 64GB RAM, GeForce GTX 1060 they take about three minutes to generate.

We simply type in the prompt and select the options. Sometimes you have to scramble to get the desired effect. In addition, the keywords used affect the style of the whole image, so it's hard to make one 'series' of graphics. But let's give it a go, first a sample prompt. All generated by my laptop.

Model used: juggernautXL_v8Rundiffusion.safetensors

A cozy cabin in a snowy forest at sunset, warm light glowing from the windows, smoke rising from the chimney

.

.

Pretty good, but why is the chimney on fire?

Futuristic city skyline at night, neon lights reflecting on wet streets, flying cars in the sky

.

.

Colour scheme great, but the sense of vehicles and backgrounds is quickly lost.

.

Astronaut floating peacefully above Earth, with stars and galaxies in the background, contemplative mood

.

.

Strange details again.

.

A cat café with cozy decor, wooden furniture, and playful cats lounging around while people sip coffee

.

.

Here you can see the artefacts much more strongly, you often end up like this trying to generate a scene with many characters.

.

A cute fox wearing a small backpack, wandering through an autumn forest filled with orange and red leaves

.

.

Quite good, just always a slightly different character.

.

A massive floating island with waterfalls cascading into the clouds, bioluminescent plants glowing softly

.

.

.

A colossal mechanical dragon flying over a steampunk city, gears and smoke in the background

.

.

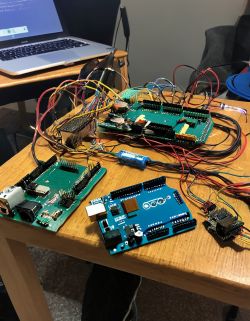

Electronics through the eyes of AI .

Just out of curiosity - does AI know what an Arduino looks like?

arduino, electronics, table

.

.

Something must have been in the teaching data, but still the heresy comes out....

And the diagrams?

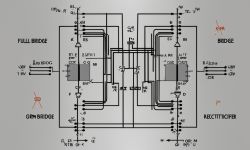

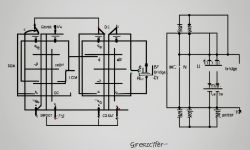

schematic, full bridge rectifier, graetz, electronics

.

.

I wasn't expecting much, but this font is cool too. Still, this

"RECCTITICIFER".

The next generation has done... a picture of the element:

.

.

How about AI generating us retro hardware?

unitra, radio, retro, table, receiver

.

.

Here's maybe another weird experiment....

polish car, fiat 126p, retro, polish city, street

Inpainting .

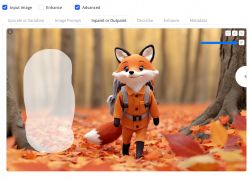

Inpainting allows us to modify or completely change part of the image content. For example, we can add a travelling companion to our fox. To use inpainting, turn on the "input image" option and drag the image we want to edit there. We then select the zones we are going to edit.

.

.

The prompt I gave was similar to the one before, only now it included the word "owl".

The first two results:

.

.

Trials of practical application .

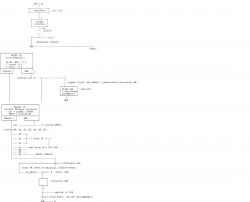

Finally, I tried to use this generator to create sketches/proposals of various kinds of devices for a "smart home", for example maybe some kind of heating controller, weather station or some other type of touch panel there. I know that the text of these models does not generate readable text, but maybe some idea will give me?

smart home, ui, design, chart, heater, interface, screenshot

.

.

From a distance it still resembles something, the colour scheme is probably ok too, but the text and details are completely wrongly drawn.

Summary .

To was a short presentation showing what images could easily be generated on typical consumer hardware. It didn't turn out to be that difficult at all and the results are pretty good too, although I'm sure opinions will be divided. Out of curiosity I also tried to generate slightly more practical things, but from this angle the model used limps along so I wouldn't expect much from it.

I'll leave the final verdict to you, only here I'll emphasise that all generation is 100% local, as well as highly customisable and modifiable, so it might be worth taking an interest in, if only out of privacy concerns.

Do you use image generators and if so, what for?

Cool? Ranking DIY Helpful post? Buy me a coffee.

![[AI] Graphics and image generator on your own computer - web interface for Stable Diffusion [AI] Graphics and image generator on your own computer - web interface for Stable Diffusion](https://obrazki.elektroda.pl/9701085300_1742977223_thumb.jpg)