Open-WebUI is a great tool for running locally multimodal Large Language Models, but not all of the models are available for download directly through the OWUI web panel. Luckily, GGUF models can be downloaded externally and then uploaded to OWUI through the experimental GGUF import. Here I will show you step by step how to import such model.

This topic assumes that you already have Open-WebUI setup, if not, please check out the previous tutorial:

ChatGPT locally? AI/LLM assistants to run on your computer - download and installation

You may also find interesting:

Minitest: robot vision? Multimodal AI LLaVA and workshop photo analysis - 100% local

Let's start with considering what is GGUF.

GGUF, which stands for GPT-Generated Unified Format, is a successor to GGML (GPT-Generated Model Language) format, released on 21st August 2023. GGUF is a file format used to store GPT-like models for inference. GGUF models can run on both GPU and CPU, and provides extensibility, stability and versatility.

Details about GGUF can be found on Hugging Face site.

So first, you will need a GGUF model.

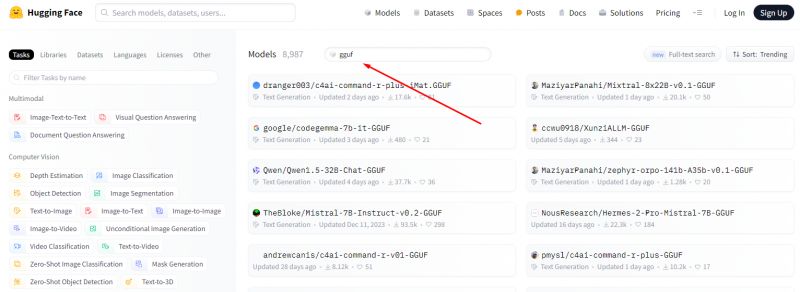

Go to https://huggingface.co/models and browse the models for download.

Not all models are available in GGUF. So, filter entries by GGUF:

For example, let's download TheBloke/Mistral-7B-Instruct-v0.2-GGUF

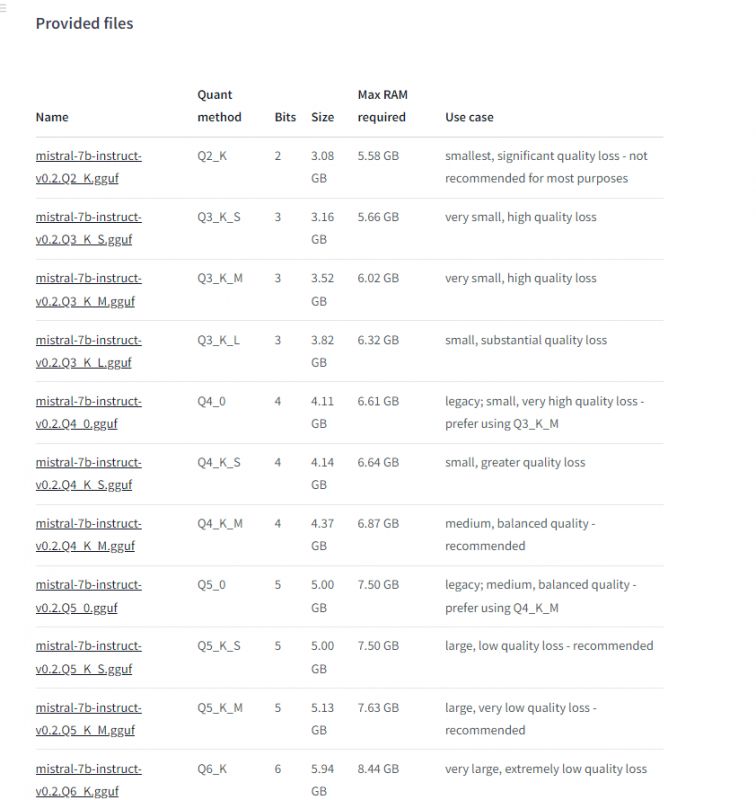

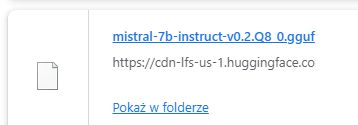

I've chosen mistral-7b-instruct-v0.2.Q8_0.gguf version.

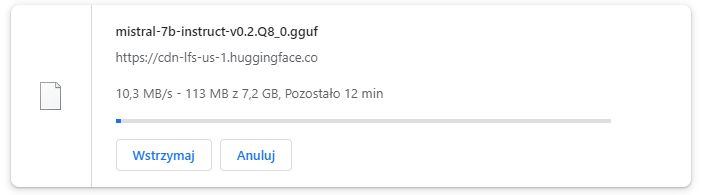

Wait for the download to finish:

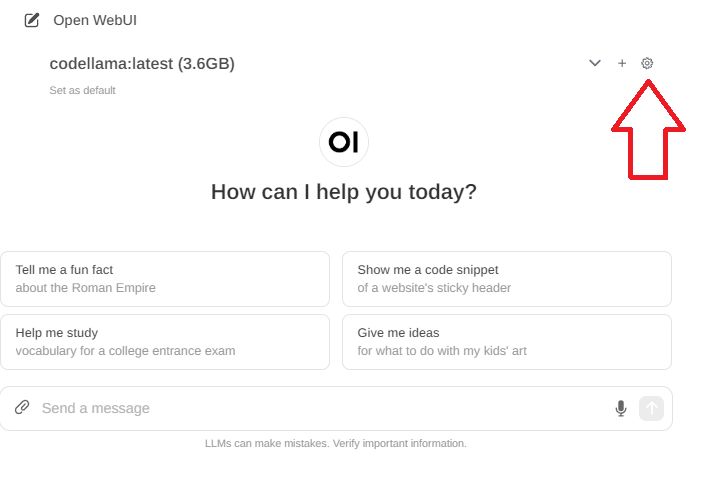

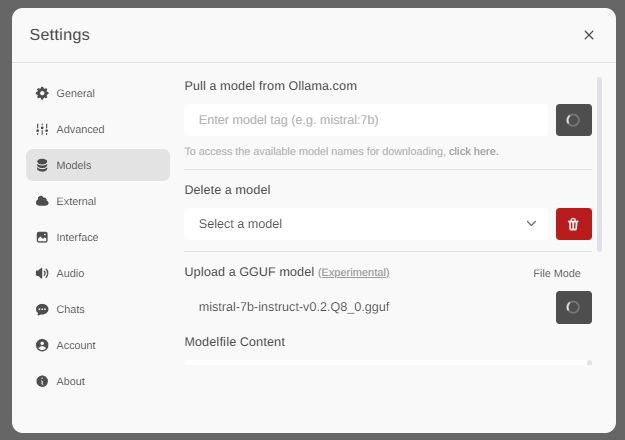

Now, enter the settings, you will now need to upload the model you've downloaded.

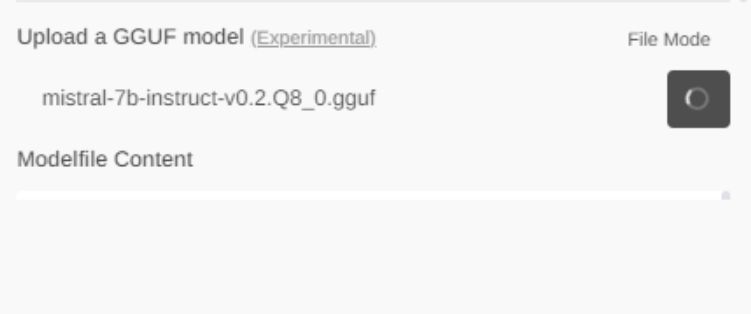

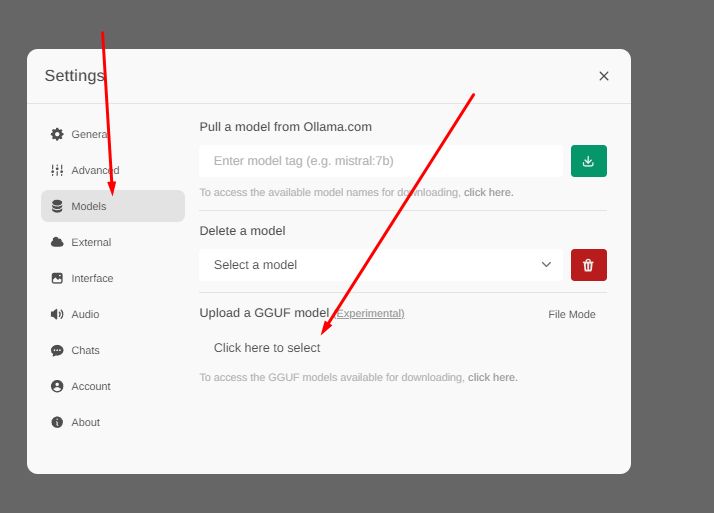

In models section, find a GGUF upload form:

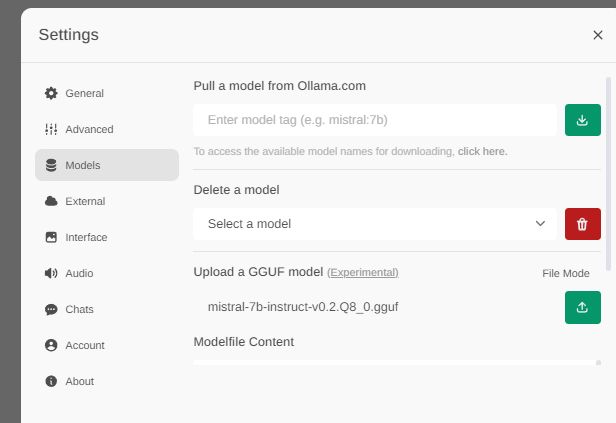

Select the file you want to upload:

Now you need to be very patient. Don't close this page. There is currently no good progress report display.

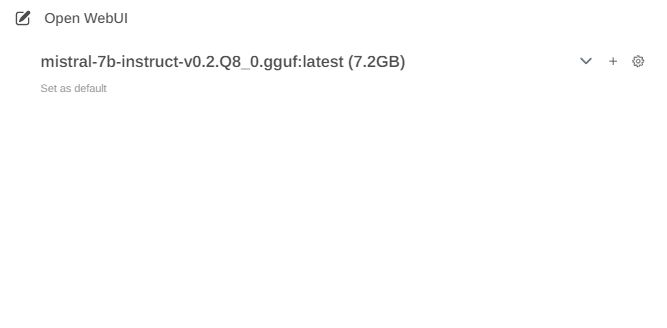

Finally, once the import is done, you will be able to select new model on the main page:

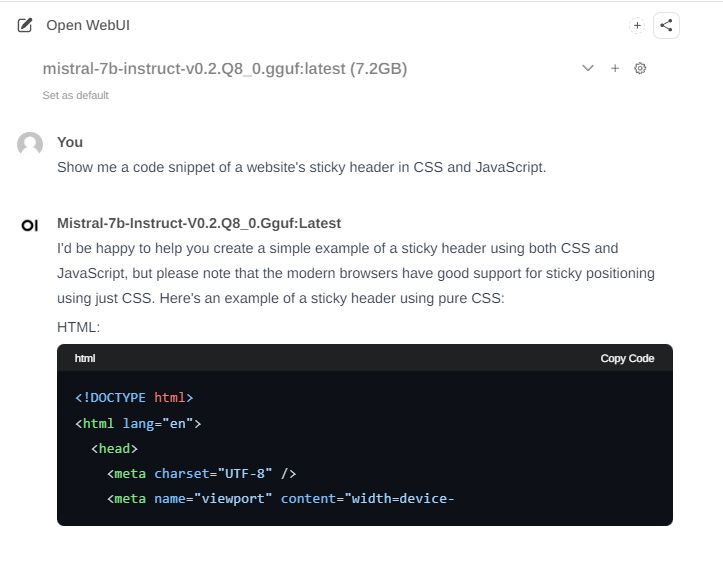

Your model is now ready to run:

And that's all! This way you can run any GGUF model, of course, as long as your hardware supports it!

Have you managed to run some models that way? Which GGUF model is your favourite? Let me know and stay tuned.

Cool? Ranking DIY Helpful post? Buy me a coffee.