It would seem that today`s great AI models are inextricably linked to the cloud, and therefore also to the lack of privacy - nothing could be further from the truth! I will show here how you can easily download and run a 100% locally interesting alternative to ChatGPT along with a confusingly similar form of website offering the choice of the LLM model, chat history and even the possibility of e.g. interpreting the provided images and files.

All this is offered by a project called Open-WebUI, link to homepage/repository below:

https://github.com/open-webui/open-webui

Open-WebUI installation

There is a "Quick Start With Docker" section in the Readme, but as usual, the installation is actually more difficult than the project authors declare.

Docker is an environment for running various projects that is characterized by containerization and software isolation, as well as its portability.

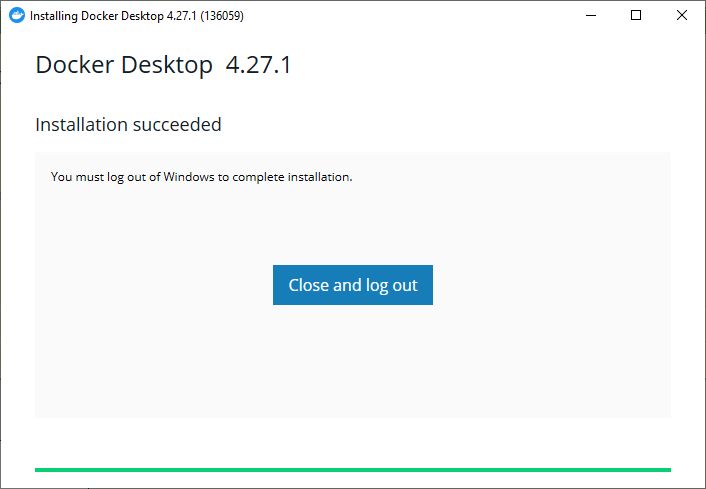

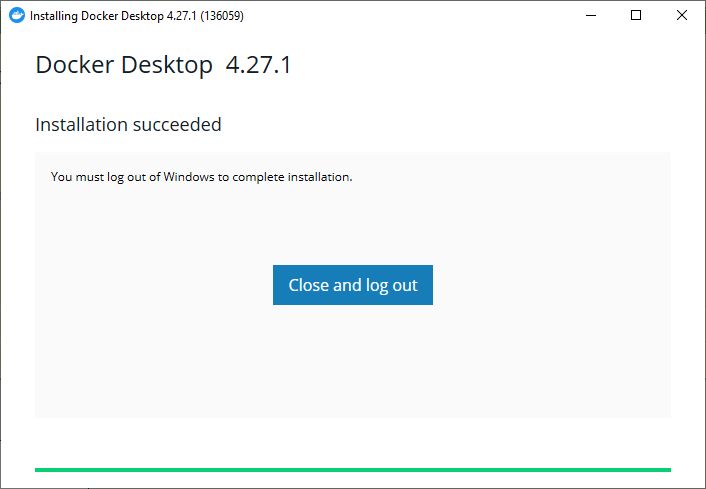

Initially I tried to install Docker 4.27.1 on my Windows 10:

Unfortunately, it didn`t work - the spoiler explains why:

Spoiler:

It would seem that the installation was successful:

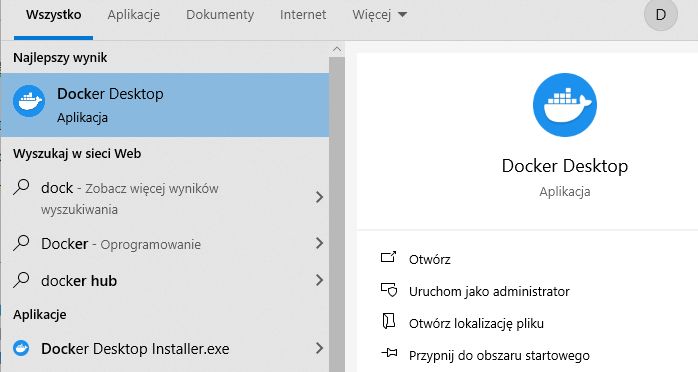

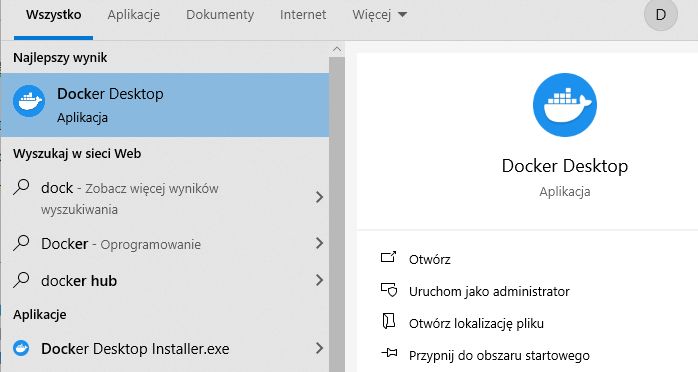

We launch...

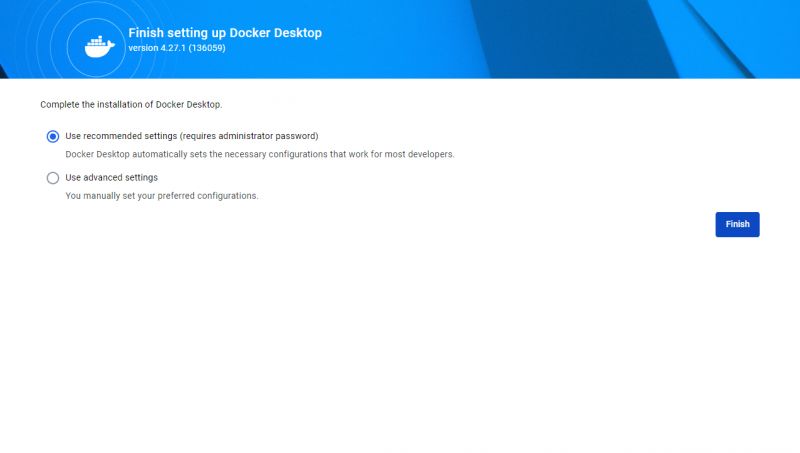

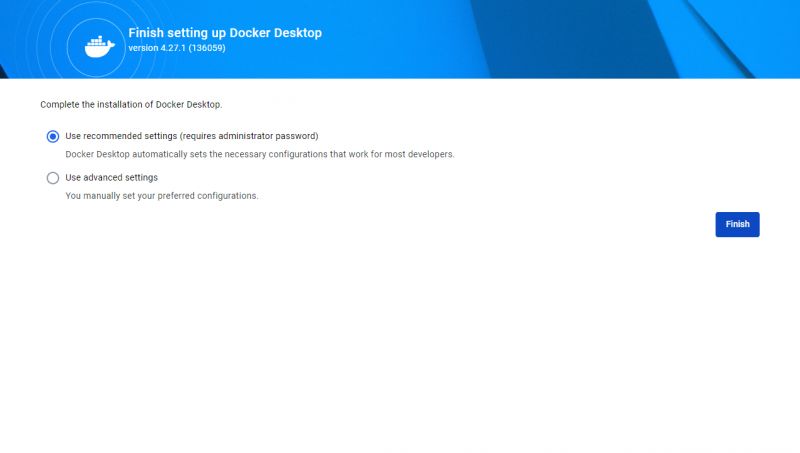

We configure:

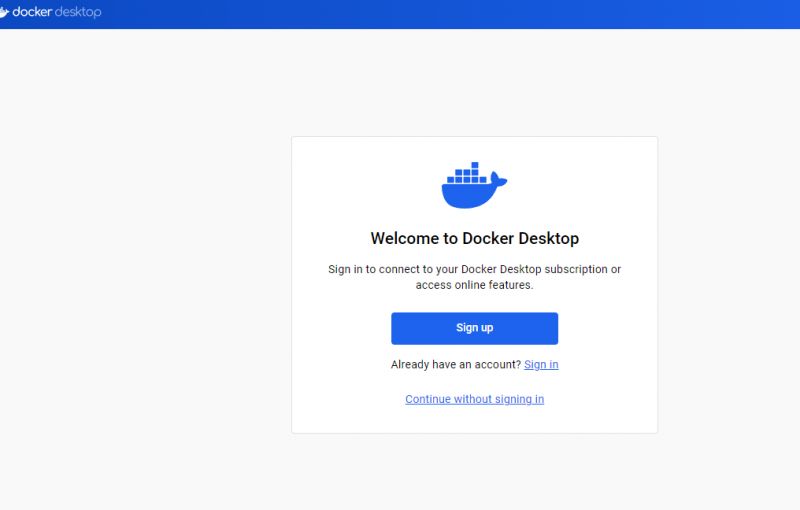

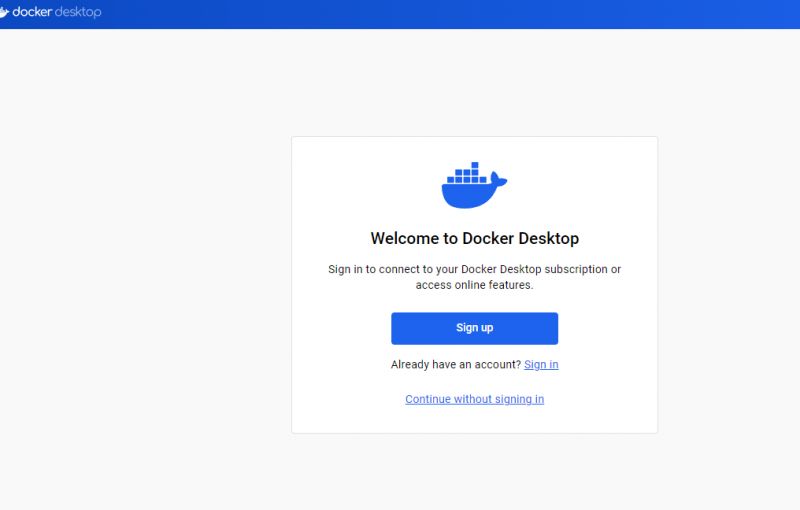

Login is optional:

And there is a problem, something is wrong with WSL, i.e. the "Linux" overlay on Windows:

There are several topics on the docker forum about this:

https://forums.docker.com/t/updating-wsl-upda...-update-n-web-download-not-supported/138452/9

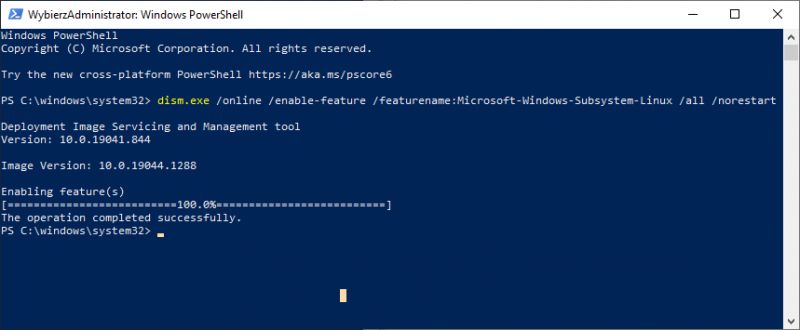

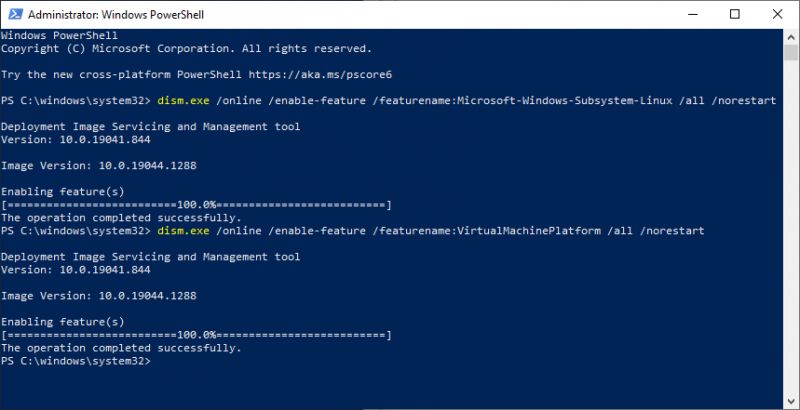

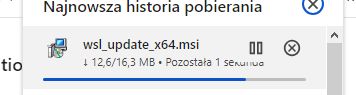

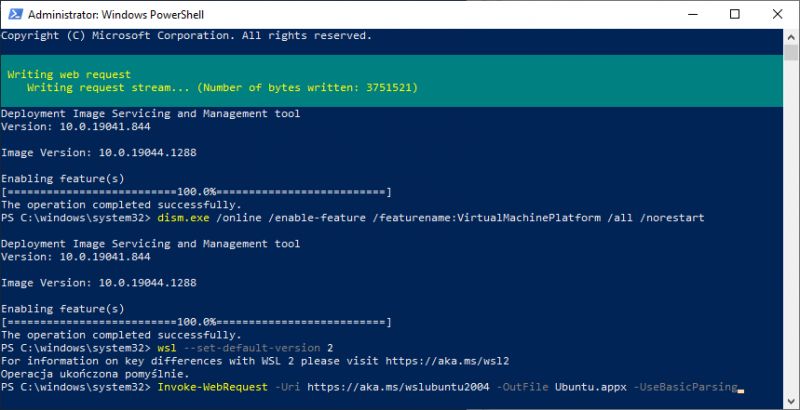

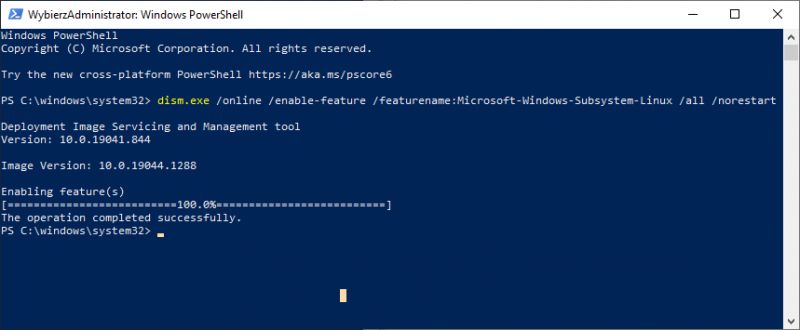

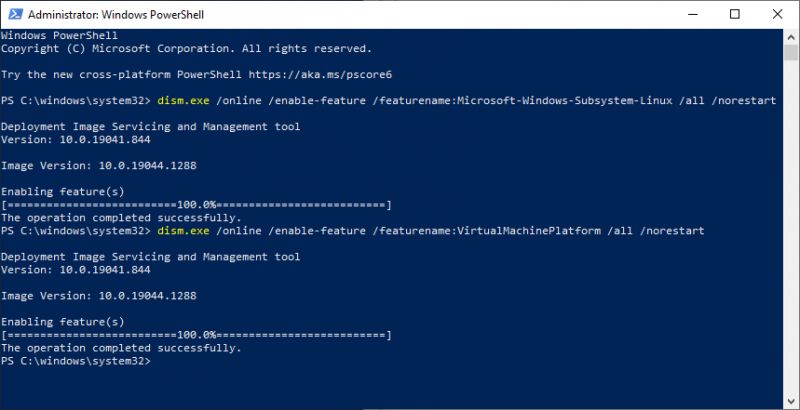

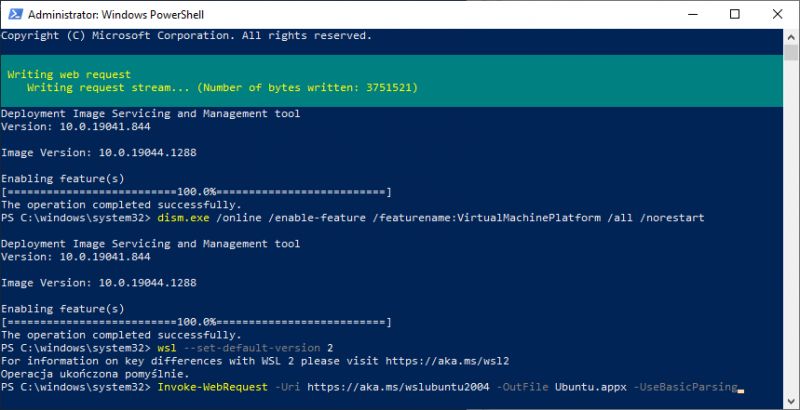

Below are screenshots of my attempts to fix the problem - without positive results, so no comment:

It would seem that the installation was successful:

We launch...

We configure:

Login is optional:

And there is a problem, something is wrong with WSL, i.e. the "Linux" overlay on Windows:

There are several topics on the docker forum about this:

https://forums.docker.com/t/updating-wsl-upda...-update-n-web-download-not-supported/138452/9

Below are screenshots of my attempts to fix the problem - without positive results, so no comment:

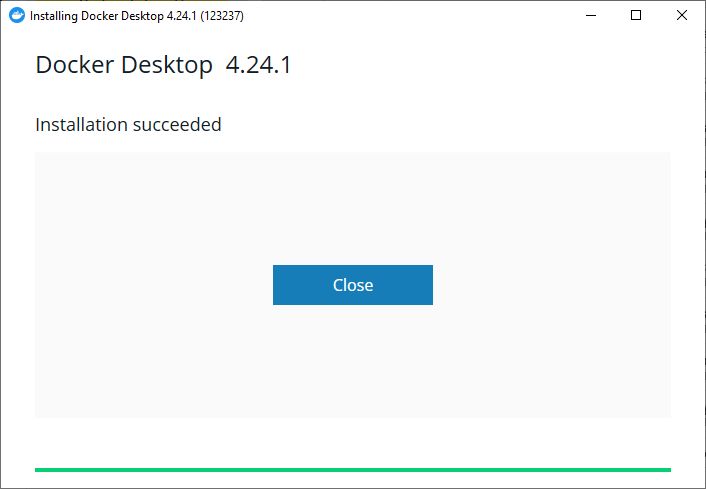

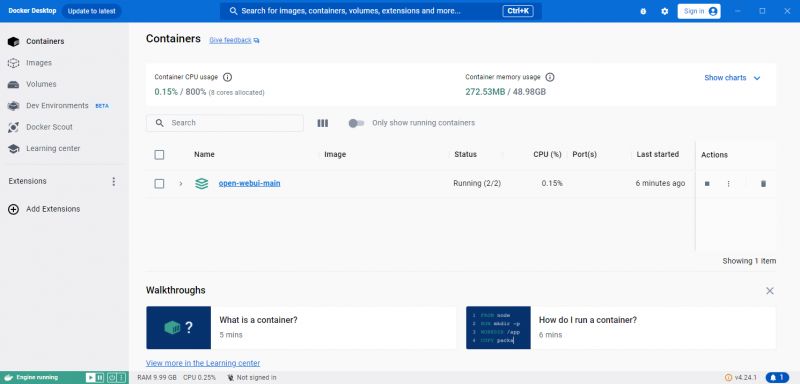

To sum up, Open-WebUI did not work for me on the latest Docker, so I started checking previous versions of Docker and it turned out that in 4.24.1 the problem does not occur:

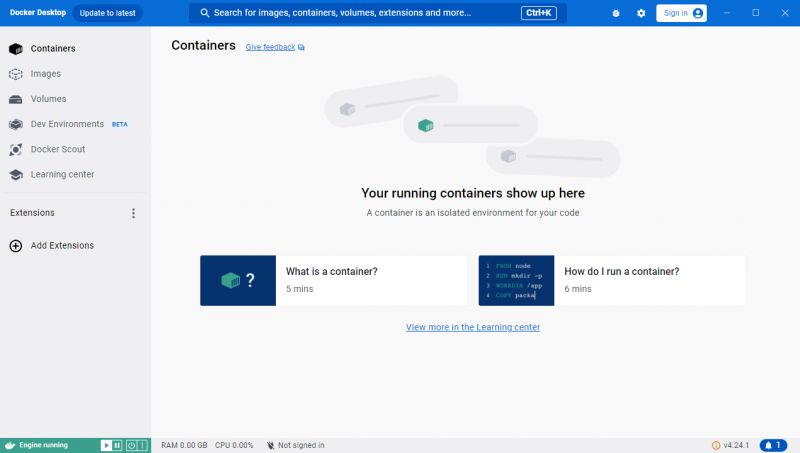

Success - we immediately have a docker panel:

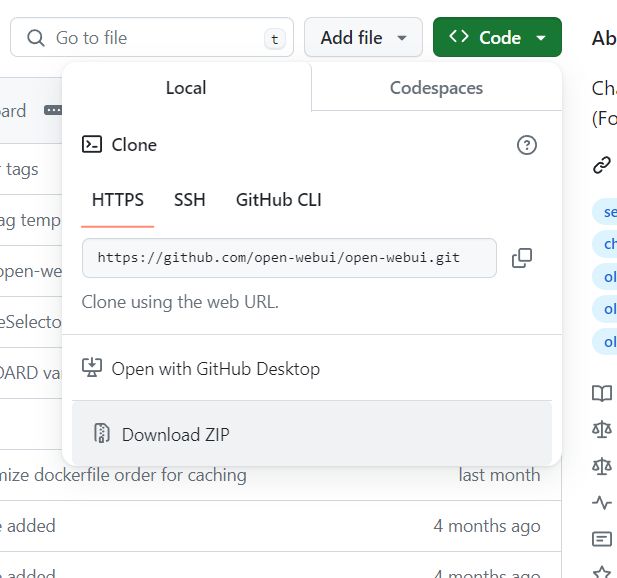

Docker is ready. Now you need to download the repository, you can even do it through a browser, GIT is not necessary. We simply find the zip download:

https://github.com/open-webui/open-webui

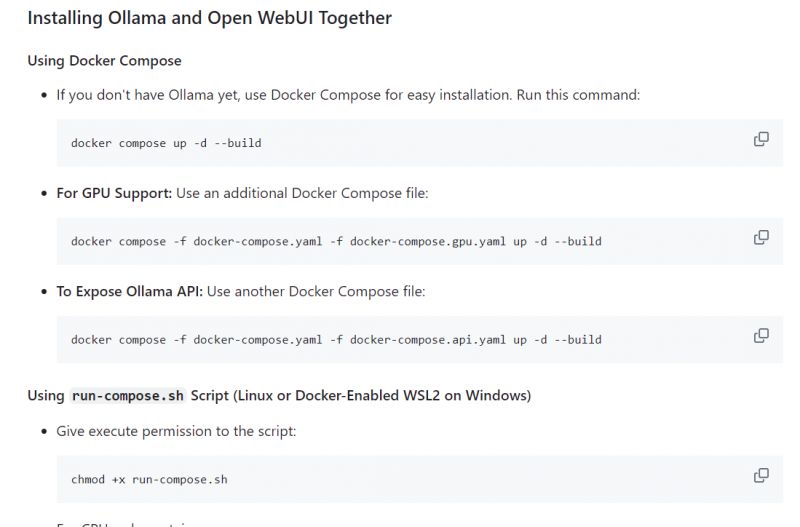

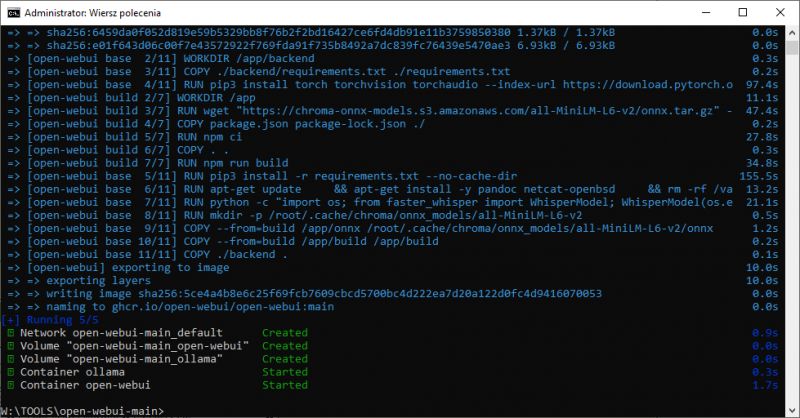

Then we install everything via docker, I chose the version without GPU support (everything will be counted on the CPU, RAM will also be required):

Finally a flawless installation:

From this point on they should be open webui in Docker:

First run

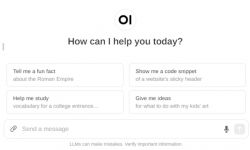

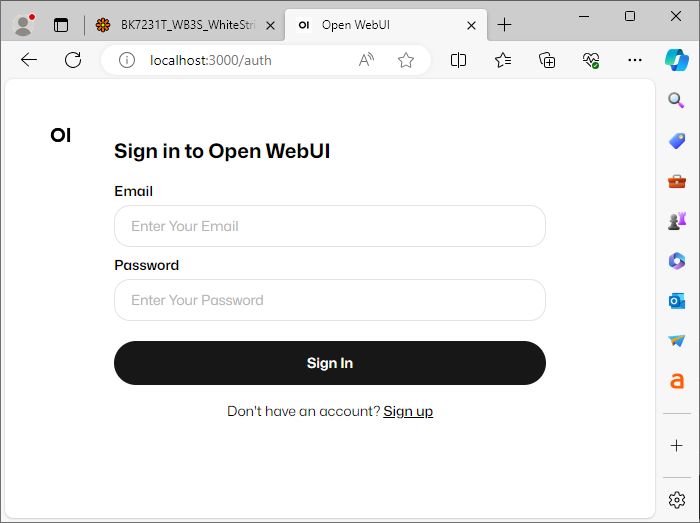

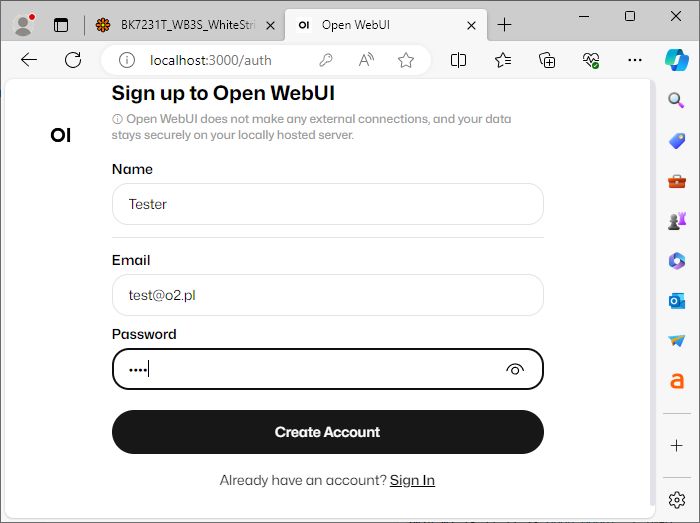

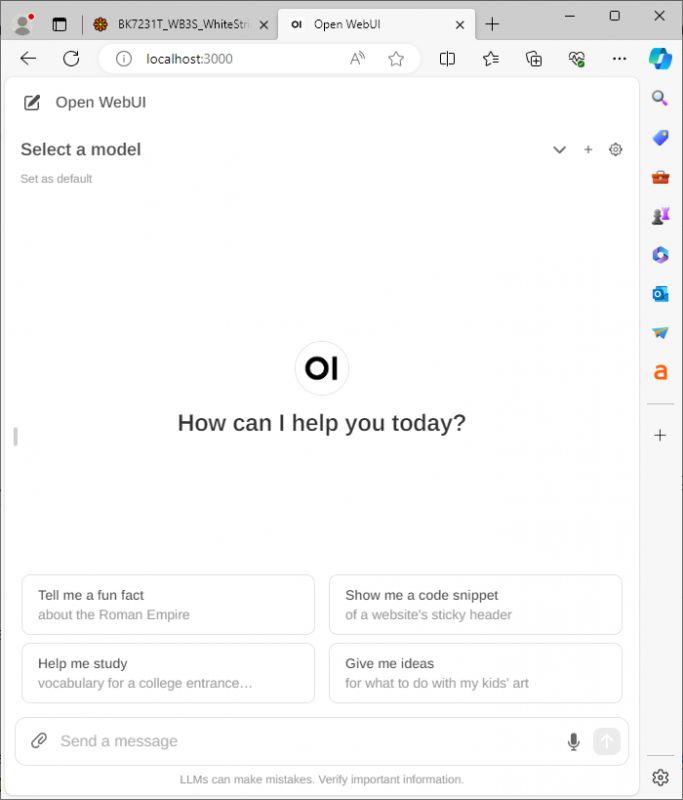

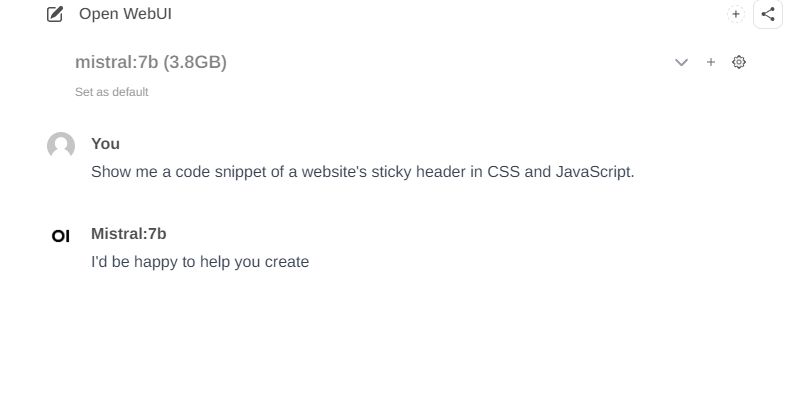

We run Open WebUI in Docker. Go to create a web service (our local IP + selected port) to see the web interface of the language models:

The first login to WebUI will require creating an account, but we create this account on our server, everything is local:

Then we are greeted by a web panel like the one known from ChatGPT:

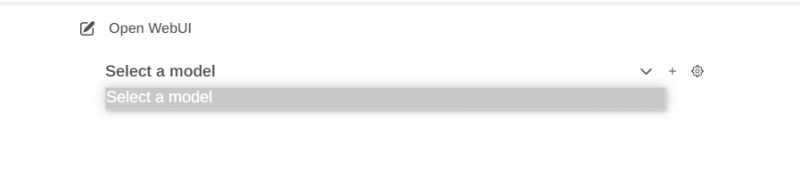

Nothing can be run at this point - we are missing language models:

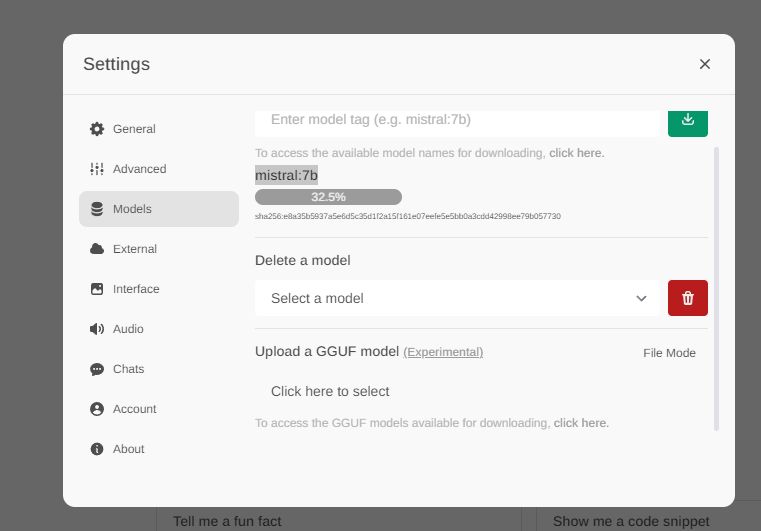

You have to click on the cog and download a model.

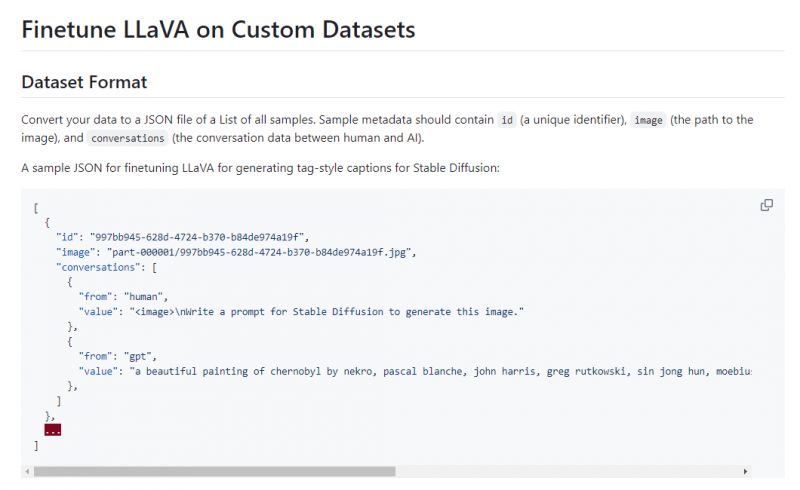

To download a model, search for its name here:

https://ollama.com/library

And then paste it into the download as follows:

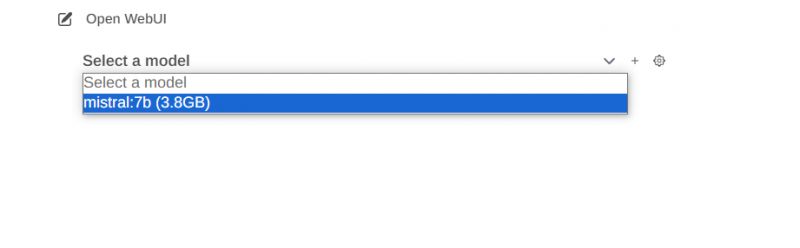

After downloading, the model will appear in the list to select:

You can ask him questions just like in the case of ChatGPT, the capabilities themselves depend on the model, just as the RAM requirement and the token generation speed also depend on what we have chosen, there are "lighter" and "heavier" models.

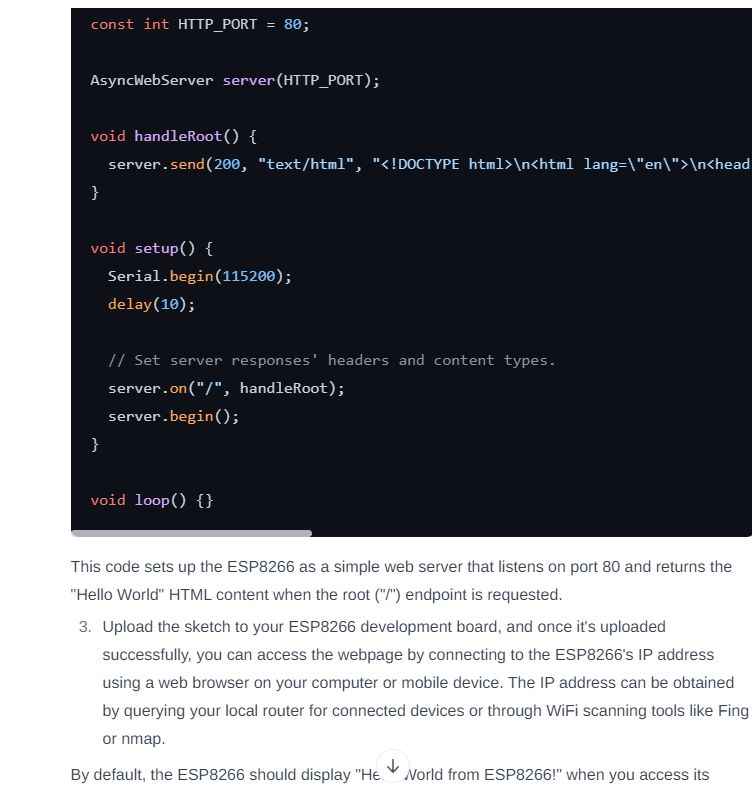

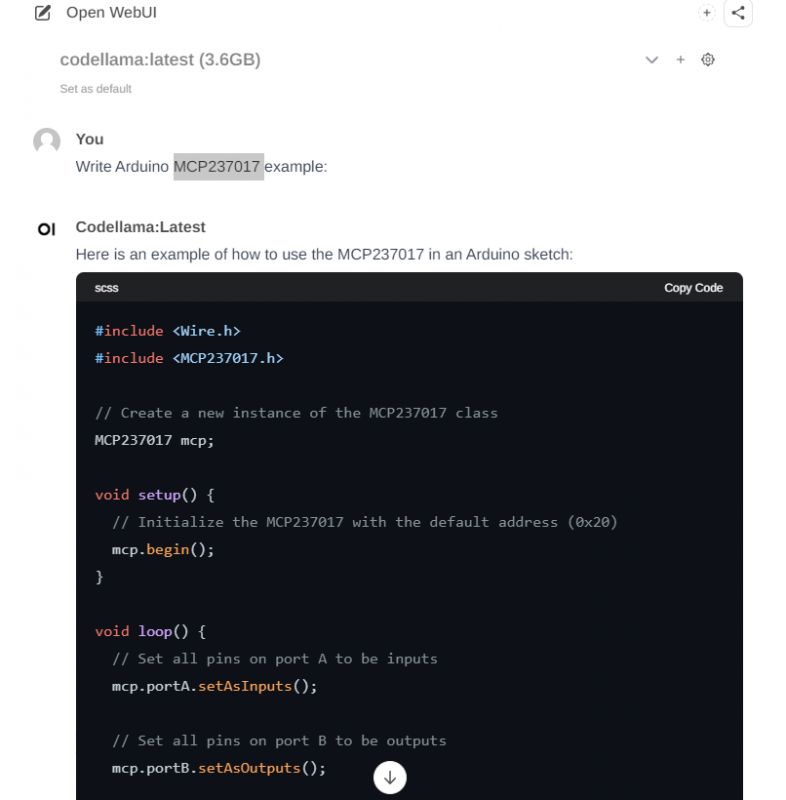

Text and code

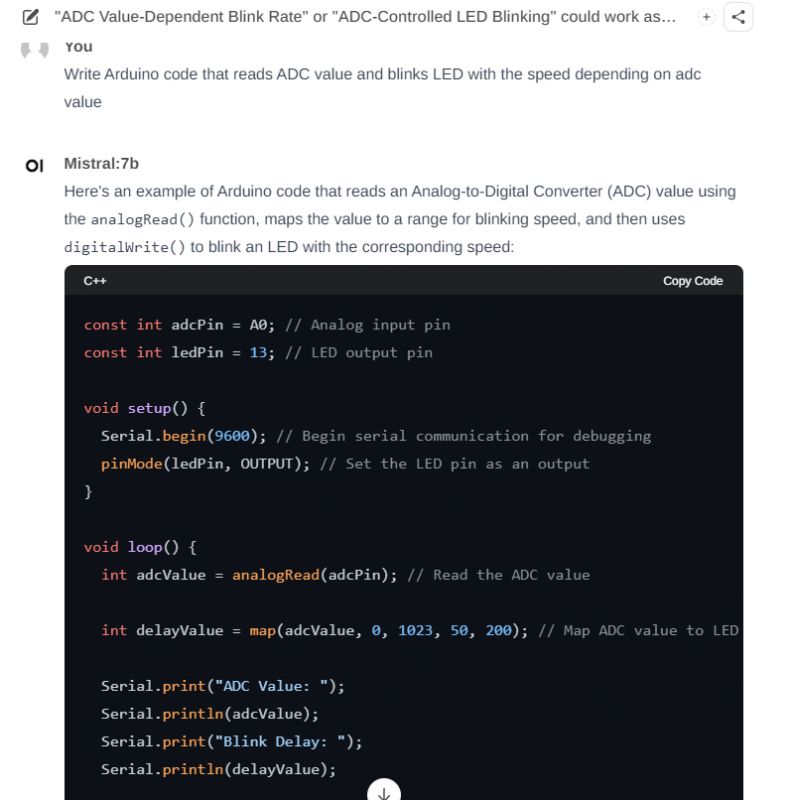

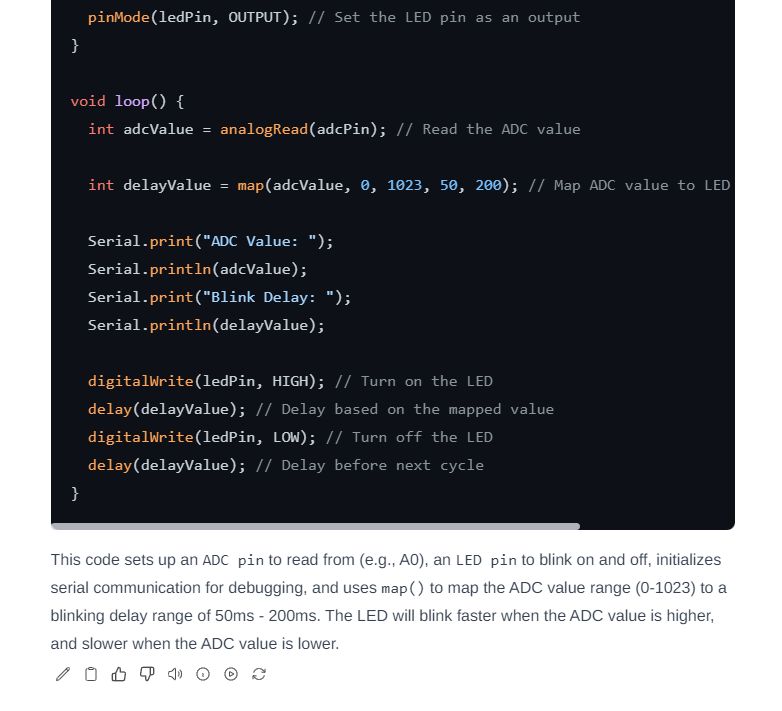

Locally running models are able to generate code and complete text similarly to ChatGPT, although the final results depend on the model chosen. Here are some examples:

The above example with mapping the value read from the ADC to the LED blinking frequency is very similar to ChatGPT.

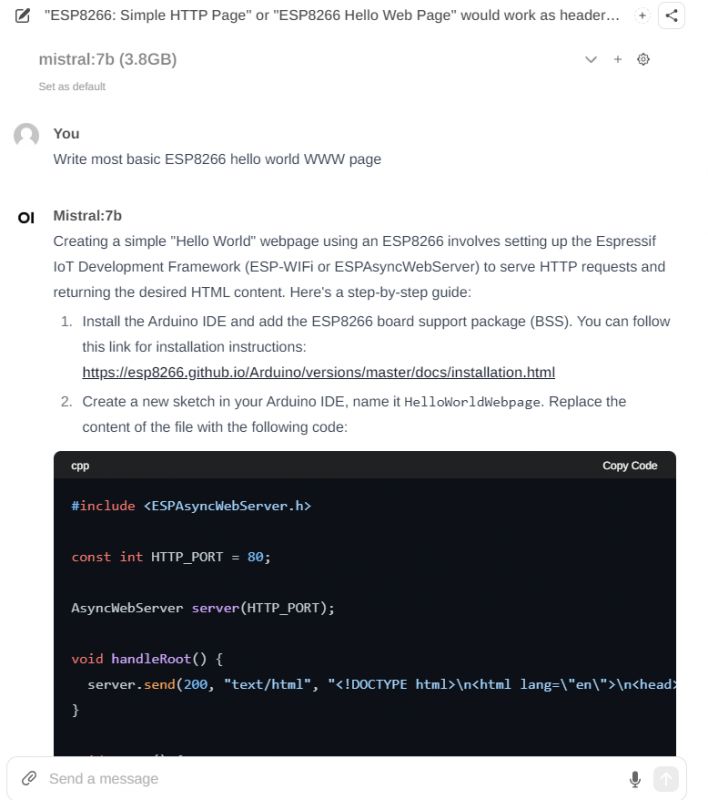

Similarly with other codes:

He`s done some thinking here - e.g. this link does not lead to an existing page and the code itself is at least incomplete.

Of course, models are also susceptible to hallucinations - here, for example, a code is created for a non-existent system MCP237017 which was created as a result of a typo I made:

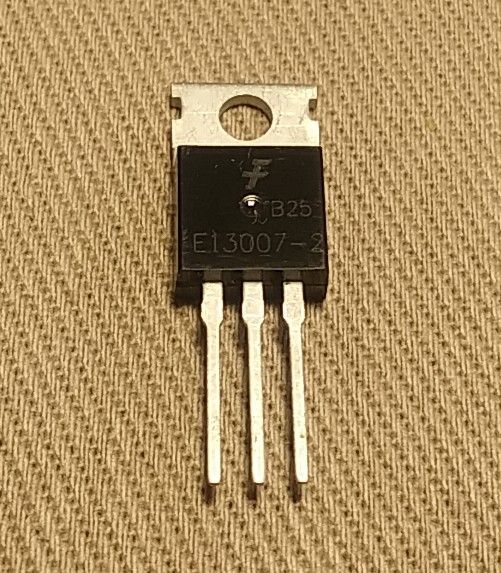

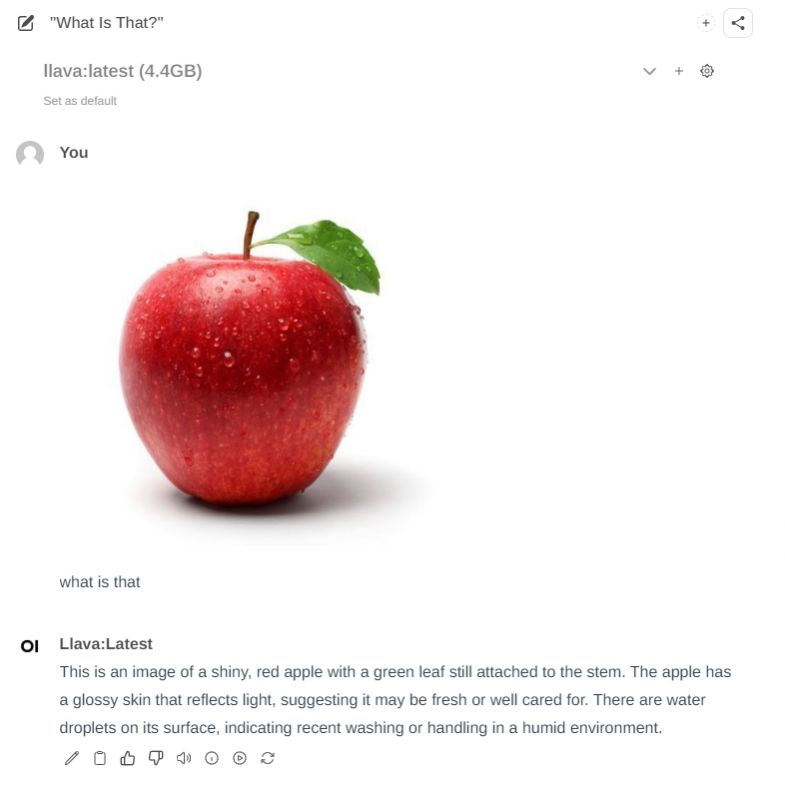

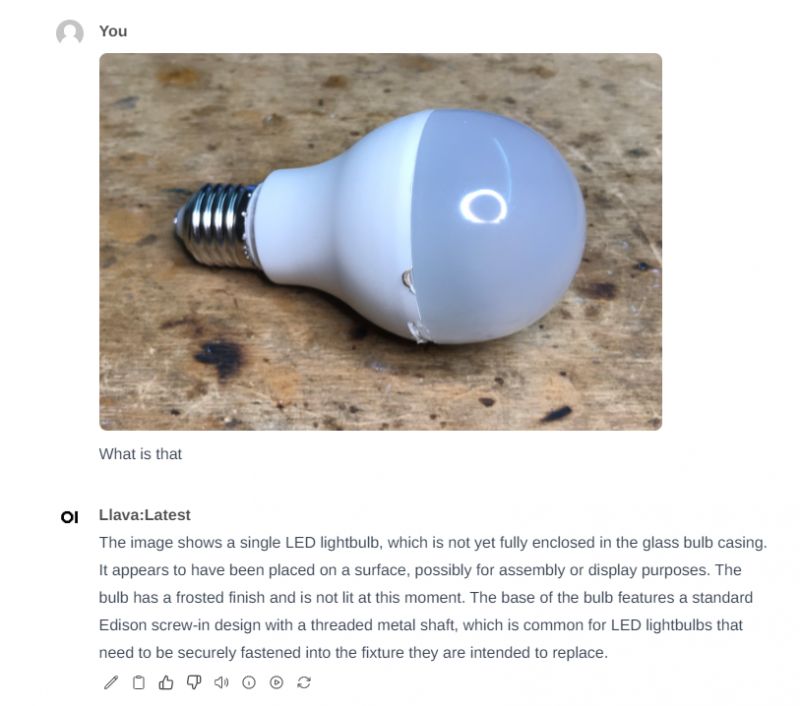

Image analysis

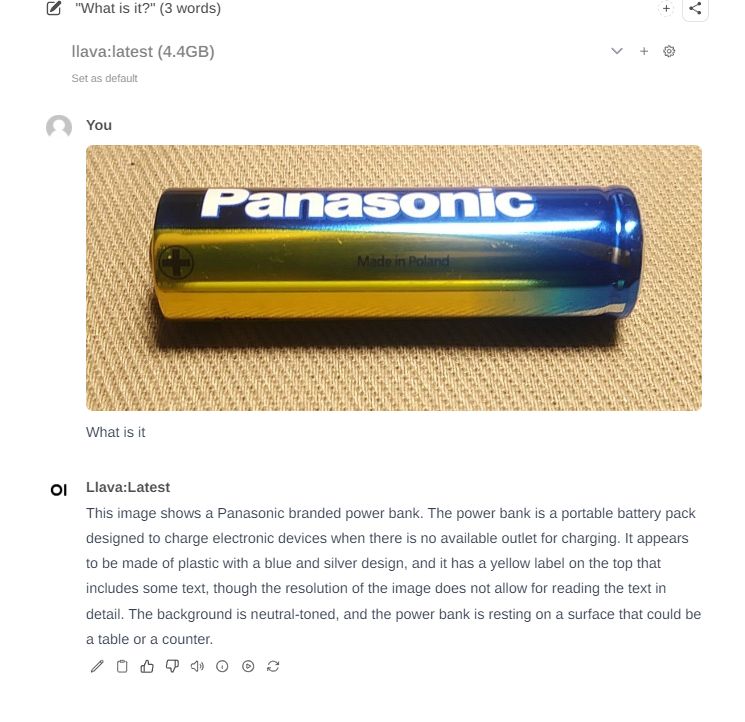

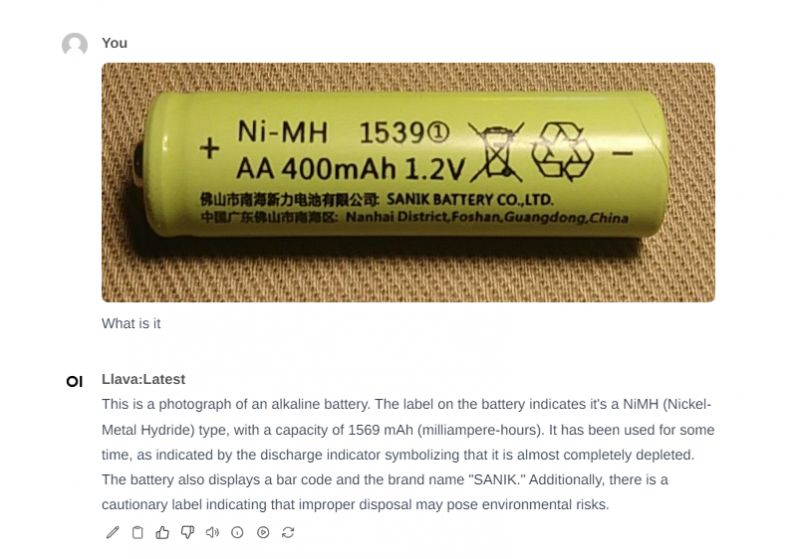

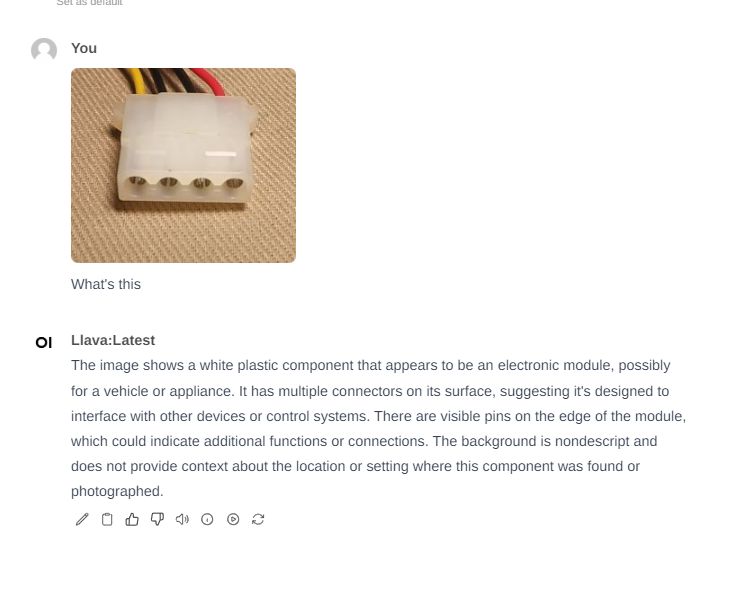

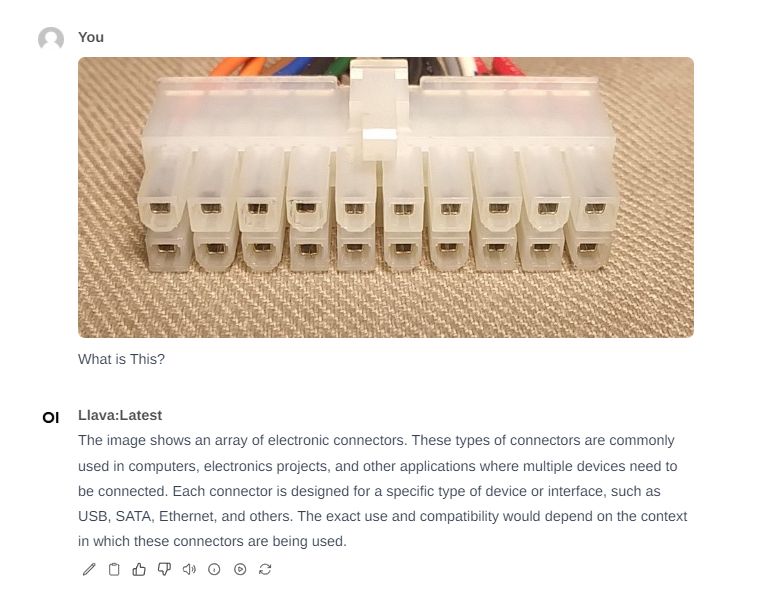

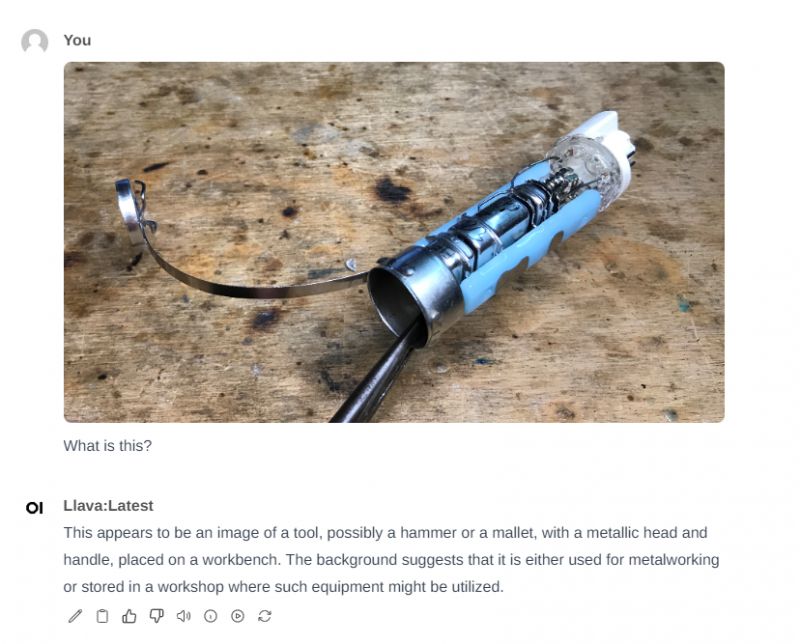

Some models can also describe images. An example is llava, which can, for example, describe an apple:

She is not afraid of more difficult tasks - here she describes the LED "bulb":

Unfortunately, when it comes to slightly less popular issues, the model gets lost and creates hallucinations:

If you want me to add an image to this model, please post the image in the topic, if I have a moment I will check the results.

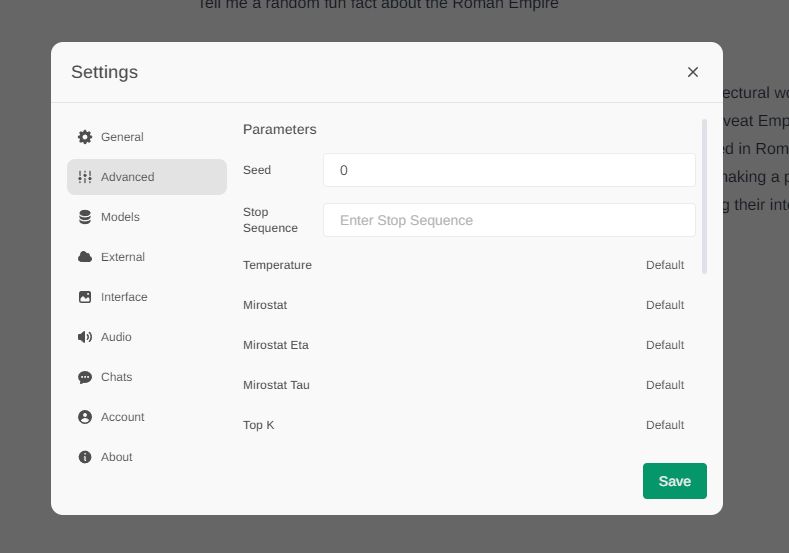

Advanced settings

The Open WebUI offers much more than the OpenAI website - here we can change advanced language model settings, such as: Temperature, Mirostat, Top K, Stop Sequence, Max Tokens, Context Length and much more - for explanations, please refer to materials from the Internet.

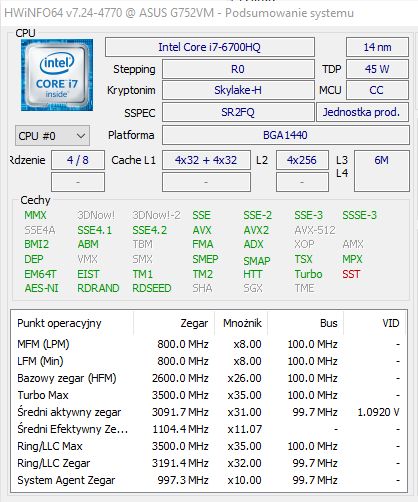

Hardware requirements?

It all depends on whether we use the GPU or CPU version and what model we want to run, but in general it is not very fast, it also takes up a lot of RAM, here`s a screenshot from me after an hour of play:

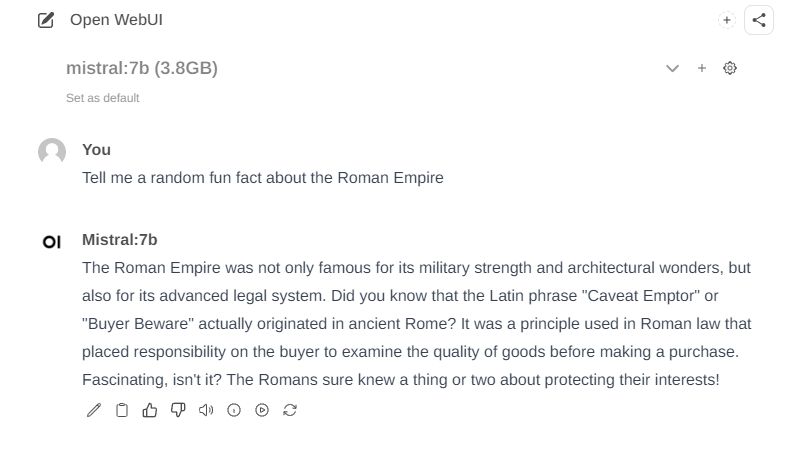

As for the reaction time... we will measure it, for example on Mistral:7b:

The above response took 45 seconds to generate:

Summary

These free, local equivalents will not beat commercial language models, but they actually provide more than a substitute for the popular ChatGPT locally. Additionally, there are plenty of these models and they offer a lot of parameterization and tuning options, which allows us to adapt them to our needs. In the future, I intend to try to compare them better and explore their possibilities, but I don`t know how to go about it yet and the huge selection of LLMs to download doesn`t make it easier.

Installing the whole thing is quite simple as long as we don`t fall into the "trap" of the new Docker, but I wrote about it at the beginning, following my instructions we will install the whole thing without any problems.

However, I don`t know what it`s like to run it on weaker hardware - if anyone wants to have fun, I invite you to test it and share your results!

Have you tried running LLM language models locally, and if so, what were the results? I invite you to discuss.

Cool? Ranking DIY Helpful post? Buy me a coffee.