They are graphic designers on UBI (universal basic income). All in all, I'm slowly getting ready too.

Czy wolisz polską wersję strony elektroda?

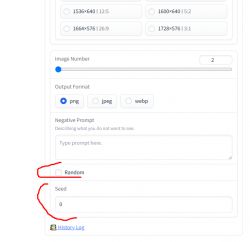

Nie, dziękuję Przekieruj mnie tamandrzejlisek wrote:.Such a general question. Can this whole offline generator work deterministically? Is it possible to configure it in such a way (e.g. by specifying a draw seed) that if I call an image query, I get an image, and if I later call the same query, I get the same image and not a different variant of the image that represents the same thing?

Normally this uses pseudo-randomisation and every time it is called, the result is different, just as it was intended. Is it possible to control it with this, i.e. to eliminate pseudo-randomisation or to start with the same seed every time?

.

.

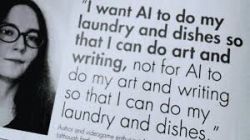

gulson wrote:.Yesterday they released a real bomb:

https://openai.com/index/introducing-4o-image-generation/

Sensational generation of captions, postcards, infographics, icons etc.

Graphic designers hate them.

.

.

gulson wrote:This is the graphic designers at UBI (universal basic income). In fact, I'm slowly getting ready too.

.

.

p.kaczmarek2 wrote:.@andrzejlisek And have you tried generating separately and then upscale separately?

p.kaczmarek2 wrote:.And have you studied the effect of guidance scale on the images produced? This also has interesting effects.

p.kaczmarek2 wrote:.There's also a second way to improve the image - if you don't want to increase the resolution, but improve a selected section of the image in general, then in inpainting you have the "Improve detail" option. You give the image an input, select what to improve with the brush and it improves

p.kaczmarek2 wrote:.That is, if you give fewer steps you get a "sketch" of the image which you can then make in better quality, yes? Interesting.

p.kaczmarek2 wrote:.Are you investigating the effect of guidance scale on the images created? This also gives interesting results.

Quote:..

Create a photo/photo style image.

The thing takes place in communist times, a fiat 126p has been stopped by a police polonez.

In the background you can see the Palace of Culture.

Note the correct painting of the police car and the correct number plates of both cars.

.

Create an image in the style of a photograph/photo.

The thing takes place in communist times, the Arduino UNO has been stopped by a police polonez.

In the background you can see the Palace of Culture.

Note the correct painting of the police car and the correct number plates of both cars.

.

Create an image in the style of a photograph/photo.

The thing takes place in communist times, the Arduino UNO has been stopped by a police polonez.

In the background you can see the Palace of Culture.

Note the correct painting of the police car and the correct number plates of both cars.

All the action takes place underwater.

.

p.kaczmarek2 wrote:.You can play around.

p.kaczmarek2 wrote:.does this generator also understand complex relations

Quote:.

Quote:.

.

.

sudo apt install nvidia-driver-535sudo apt install git python3 python3-venv python3-pip

sudo apt install python3.9 python3.9-venvgit clone https://github.com/AUTOMATIC1111/stable-diffusion-webui.git

cd stable-diffusion-webuipython3.10 -m venv venv

source venv/bin/activatepython3.9 launch.pypython3.9 launch.py --medvram --lowvram --xformerspython3.9 launch.py --skip-torch-cuda-test --no-half --precision full --use-cpu all

gemini-3-pro-image-preview (nano-banana-pro)

gemini-3-pro-image-preview (nano-banana-pro)