I will show here how to run ChatGPT on ESP8266 - not directly, of course, but through the OpenAI API. I will demonstrate a simple code that sends an appropriate request to the API via HTTPS, along with an overview of the API key transfer and JSON format support. The result will be the ability to query the famous Chatbot directly from ESP, without using a computer or even a Raspberry Pi.

This topic will be the basis for those who want to do some DIY with OpenAI language models on ESP8266, I will cover the absolute basics here and maybe show something more advanced in the future.

OpenAI API

OpenAI language models are available through the API, i.e. we only send a request to OpenAI servers describing what the language model should do, and then we receive their response. We don't run ChatGPT on our hardware of course, it's not even downloadable.

The OpenAI API is very rich and offers various functions (and prices; depending on which language model we are interested in), but there is no point in me copying their documentation here. You will find everything below:

https://openai.com/blog/openai-api

Detailed documentation is available here:

https://platform.openai.com/docs/api-reference/making-requests

A short reading tells us what we have to do. We need to send a POST request with the following headers:

- Content-Type: application/json

- Authorization: Bearer OUR_KEY

- Content-Length: JSON_LENGTH (it's worth adding this as a rule)

and content:

Code: JSON

on endpoint:

https://api.openai.com/v1/chat/completions

The model defines the language model we use. The list of access models is here (also requires an API key):

https://api.openai.com/v1/models

so you can download it e.g. via CURL:

curl https://api.openai.com/v1/models \

-H "Authorization: Bearer $OPENAI_API_KEY"

The temperature parameter belongs to the range [0,2], the higher it is, the more random the responses are, and the lower it is, the more deterministic.

After sending the already mentioned packet, we will receive a response that looks something like this:

Code: JSON

Everything looks very nice, so what's the catch?

The catch is a small one - this API is paid. We pay for the number of tokens, which is basically as much as we use, we have to pay for it. Details here:

https://openai.com/pricing

Tokens are quite similar to words, but they are not words - according to. OpenAI itself 1000 tokens is about 750 words.

Prices vary by model; here are the GPT-4 prices:

ChatGPT (gpt-3.5-turbo) prices are slightly less:

There are also other models:

Used tile

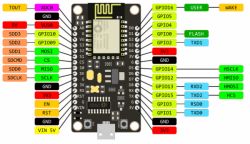

I used a plate for the project NodeMcu v3 CH340 which can be purchased for as little as PLN 17:

Visual Code and Platform IO, first steps

For the project I decided to use Visual Code with PlatformIO, it is in my opinion a more convenient and responsive environment than the Arduino IDE.

https://platformio.org/

We install the free environment based on the MIT license, Microsoft's Visual Studio Code, and then the PIO add-on.

Once everything is installed, you can start from scratch:

Code: text

Here is my platformio.ini, for those who don't know how to configure our board:

; PlatformIO Project Configuration File

;

; Build options: build flags, source filter

; Upload options: custom upload port, speed and extra flags

; Library options: dependencies, extra library storages

; Advanced options: extra scripting

;

; Please visit documentation for the other options and examples

; https://docs.platformio.org/page/projectconf.html

[env:nodemcuv2]

upload_speed = 115200

monitor_speed = 115200

platform = espressif8266

board = nodemcuv2

framework = arduino

lib_deps = bblanchon/ArduinoJson@^6.21.1

Result, everything works:

You could put ArduinoOTA here and upload the batch via WiFi, but this is a topic about the OpenAI API and let's stick to it.

ESP8266 and HTTPS

The first problem we encounter is the need to use the encrypted version of HTTP, or HTTPS. This makes it a bit more difficult for us to play, I would probably not establish an encrypted connection with my favorite PIC18F67J60, but fortunately, HTTPS is supported on the ESP8266 and there are ready examples for it. Below is one of them:

Code: C / C++

Here I simplified the matter a bit, because I allowed myself to run the mode without verifying the certificate:

Code: C / C++

but I guess for a small hobby project/demonstration this is not a problem.

What does this program do? Basically two things.

In setup we connect to our WiFi network:

Code: text

In a loop, every two minutes, we try to connect to the sample page over HTTPS and print the response obtained. We are sending a GET request here.

Code: text

The page used here as an example returns information about the encryption standards supported by our connection and returns them in JSON format:

https://www.howsmyssl.com/a/check

You can also visit this page normally:

https://www.howsmyssl.com/

The program works:

When visiting the site from a browser, I receive information that my browser supports TLS 1.3. In the case of the used platform with ESP, it is only the TLS 1.2 version, but this is not a problem at the moment.

JSON support

As we already know, we will need JSON format. It will be useful both when sending data to the server and when receiving it. So, basically, we need to be able to enter data into JSON, and then extract this data from it.

Writing to JSON could be done partly rigidly, by operations on String or one big sprintf, but we have libraries for everything here, so why complicate it?

We include the ArduinoJSON library via PlatformIO:

We include the header:

Code: text

We want to create the following JSON text:

Code: JSON

We have everything here, and ordinary variables (key-value), and array and objects.

But with ArduinoJSON, everything is very simple:

Code: text

As you can see, we set variables with the [] operator, we create an array with a function createNestedArray , and the object in it can be created with a function createNestedObject . Everything is convenient, but how to convert it to text?

Code: text

And that's it - after using this function in jsonString we already have a JSON text representing the previously created structure.

The only question is, is it possible to go the other way? From text to objects? Of course, like this:

Code: text

First OpenAI API request

It's time to put it all together. We already have basic knowledge and sending GET over HTTPS.

GET was sent like this:

Code: text

this can easily be converted to POST:

Code: text

It's just that POST requires the contents of the request body - a pointer to data and length.

We will put our JSON string there, the generation of which I mentioned earlier:

Code: text

It still needs to be generated - as in the previous paragraph:

Code: text

But it is not everything. You still need to set the fields from the request header. Among other things, here is our API key:

Code: text

We also set the header content type to JSON.

In fact, that's enough to get the first results.

Here is the entire code, for reference:

Code: text

Please remember to complete these lines:

Code: text

We launch and we already have the first results:

Here are some responses received in text form:

Code: JSON

Code: JSON

Code: JSON

Without additional configuration, the ideas are a bit repetitive. Here's the second one about the remote control car:

Code: JSON

The response also includes information about the amount of tokens used, which allows us to better estimate how much a given query cost us. The created field is the Unix epoch time, it specifies when the response was generated.

We are improving the OpenAI API request

It is still worth somehow properly handling the received response. As I wrote, it is in JSON format. It can be easily read into convenient data structures:

Code: text

Now it still needs to be processed.

The object is easy to retrieve, but the array should still be cast to the JsonArray type:

Code: text

Only then can you iterate over its elements:

Code: text

It is worth paying attention to the differences in measuring a string (String) and an integer (int).

The rest should be quite simple:

Code: text

Thanks to this, you can, for example, extract the last AI response from the JSON format and then, for example, send it further to the human speech system so that our ESP "speaks" with the ChatGPT thought.

Summary

This was the simplest example of using the OpenAI API with ESP8266, fully based on ready-made libraries. We used WiFiClientSecureBearSSL to handle HTTPS, and DynamicJsonDocument helped us with JSON support. To use one of the most powerful language models, all we needed was an ESP8266 board for PLN 17 and an OpenAI API key, which unfortunately requires paying for their API. In the case of ChatGPT, the fun costs $ 0.002 per 1000 tokens (750 words on average).

Before using the code in a serious project, it should be supplemented with the correct verification of the SSL certificate and the ChatGPT context (conversation history) maintenance could be added, but more on that another time.

Do you see any potential uses for ChatGPT on ESP8266 or ESP32? I invite you to the discussion.

Cool? Ranking DIY Helpful post? Buy me a coffee.