Ollama API Tutorial - AI chatbots 100% local to use in your own projects

.

.

How to make a project based on the latest language models available locally, such as deepseek-r1, llama, qwen, gemma and mistral? How does Ollama's uniform interface based on HTTP requests work? Here I will try to demonstrate this. We will learn how to send chat requests containing both text and images. The method discussed will allow you to run simple chatbots/assistants from virtually any device capable of making HTTP requests - so even from a Raspberry or from boards with ESP8266/ESP32....

As an introduction, let me remind you of related topics in the series. I have already been running the language model API from OpenAI on the ESP8266:

ESP and ChatGPT - how to use OpenAI API on ESP8266? GPT-3.5, PlatformIO .

I have also already presented a way to run language models on your own machine based on the aforementioned Ollama WebUI:

ChatGPT locally? AI/LLM assistants to run on your computer - download and install .

I have also shown DeepSeek derived models based on this:

Running a miniaturised DeepSeek-R1 on consumer hardware - Ollama WebUI

Now it's time for an intermediate topic - here I will show how to use the Ollama API, quite analogous to the API from OpenAI, only here the server will also be our machine.

Initial requirements .

In the theme I assume we already have the WebUI set up along with some sample models downloaded, this can be done according to my old tutorial .

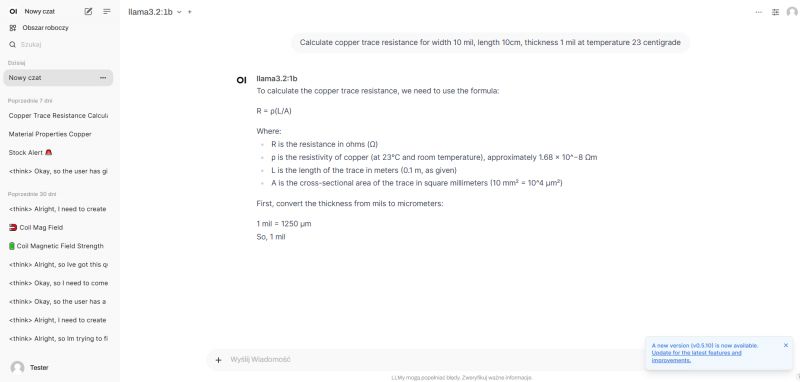

As a check that everything is working, we fire up our WebUI in Docker and ask it a question:

.

.

We make sure that everything works. Only then can we move on.

Basics of the API .

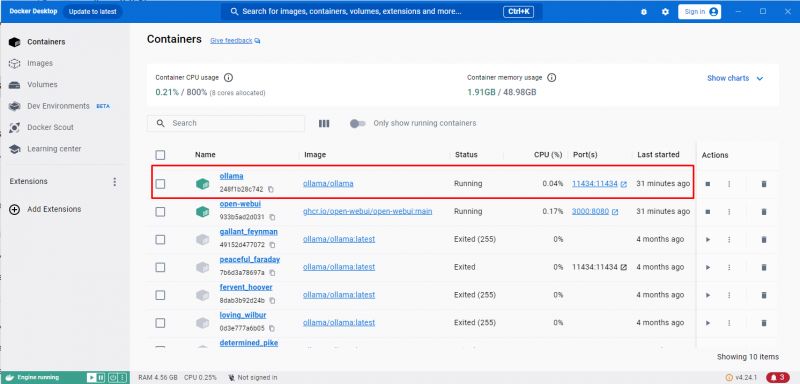

First we need to know the port on which Ollama is set up. This is not the same port that WebUI is on. It is easy to find it in Docker:

.

.

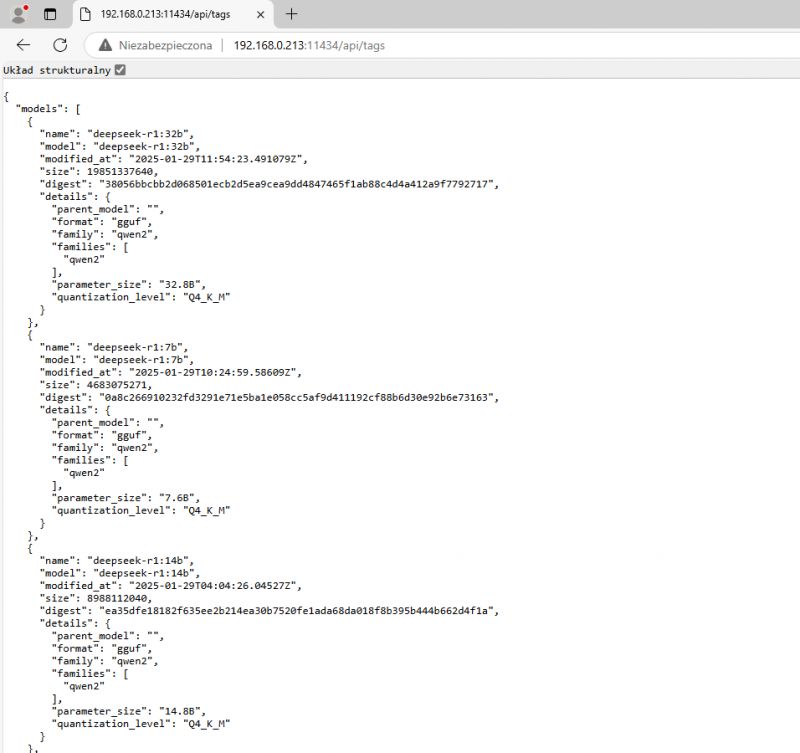

Then, as a test, you can look in your browser at the address listing the available models:

http://192.168.0.213:11434/api/tags

We should receive the list in JSON format:

.

.

This confirms that the API is working and allows us to move on to the next step.

Listing models in practice .

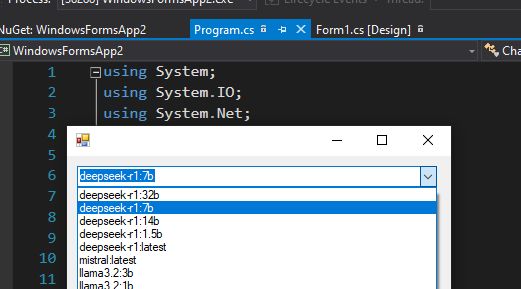

So let's make a simple chat imitation application for a test. I decided to make it in C# - based on WinForms, or Windows windows. I wrote in Visual Studio 2017, but of course you can pick up a newer version.

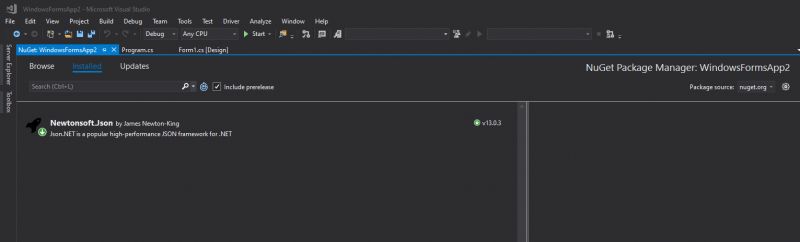

We start by inserting the available models into the ComboBox dropdown list. We retrieve the models from the aforementioned API via HttpWebRequest, which returns JSON for us to parse via Newtonsoft.Json. We install this library beforehand:

.

.

And now the code - all in one file, without a visual editor:

Code: C#

Subsequently the web request HttpWebRequest fetched data, we read it into the StreamReader stream and then create a JSON object from it using JObject.Parse. There we iterate through the array of models.

It works:

.

.

Simple query and response streaming .

We already have the models available. Now it is time to put them to use.

An endpoint is used to simply complete the text:

http://192.168.0.213:11434/api/generate

We send a POST request with the data in the format:

Code: JSON

There is also an optional stream argument - this specifies whether the response is streamed. We rather care about this, then there is a better effect and you can see in real time what is happening.

This way we get a stream of JSON files in the response - word by word. This is the same effect as in ChatGPT. We have to add the received words to the displayed window ourselves.

Code: JSON

The done field specifies whether the given JSON is the last fragment of the response.

Updated code (I added a text box, button, etc):

Code: C#

Now StreamReader reads line by line - and we convert these lines into separate JSONs.

Example result:

.

.

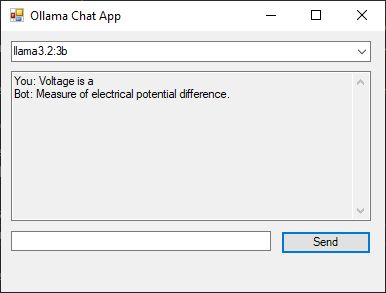

Household minichat - basis .

The chat allows you to send a conversation history split between the user and the assistant. An endpoint is used to create the chat:

http://192.168.0.213:11434/api/chat

A POST request with data in JSON format needs to be sent there. At the very least, we need model selection (model field), conversation history (user messages and AI).

An example of the JSON sent:

Code: JSON

In response, we will receive a JSON stream of this format:

Code: JSON

The last JSON received will be different:

Code: C / C++

I've added a text box, button, etc to the code here, but that's the least important.

Updated code:

Code: C#

The most interesting thing is the SendButton_Click method - this is where the request is sent. I do this in a blocking way, so I suspend the GUI for the duration of the query, but this is just a demo version.

The result:

.

.

.

.

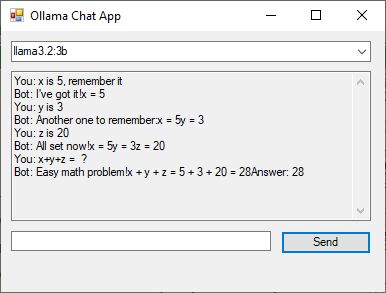

His own minichat - a history of exchanges .

One thing left to do is to keep the full history of the conversation. Then the query looks like this for example:

Code: JSON

So we separate the messageHistory into a window class and update it when the AI response is received.

Code: C#

With each query we resend it. It's time to check the AI's memory:

.

.

It works!

Forgive the lack of spaces in the conversation, it's just a display issue. You can immediately have a longer conversation.

Now one thing is worth noting - we can freely modify the text that the user or the model has written.

Recognition of photos .

Some AI models also support photos - llava, for example. Photos can be sent to them encoded in Base64. We append them to the chat query or autocomplete as an image array. The example query then looks like this:

Code: JSON

Endpoint:

http://192.168.0.213:11434/api/generate

The answer will be obtained in the format:

Code: JSON

It is easy to convert bytes in C# to a string in Base64 format, we have a function ready for this:

Code: C#

In this way, we can convert our chat so that it also supports images. By the way, we will use the drag&drop mechanism to be able to simply drag files onto our window:

Code: C#

Result:

.

.

.

.

Analogously, you can attach images to a chat conversation. We then place them in the chat history, example below: .

Code: JSON

Of course, the reliability of the llava model itself is a separate topic, which I have already presented on the forum:

Minitest: robot vision? Multimodal AI LLaVA and workshop image analysis - 100% local .

Summary .

Ollama offers a unified system that allows multiple large language models to be run, both downloaded from the official project website and added manually from the GGUF file . These usually only support text, but there also happen to be 'multimodal' models, i.e. also supporting images, which we include here encoded in Base64 format. The API discussed here supports the possibility of 'streaming' operation, i.e. previewing responses in real time, which strongly resembles the operation of ChatGPT and allows us, in the event of a change of mind, to interrupt the generation of a response earlier and change the query.

The API demonstration shown here was based on the C# language and WinForms, but it could just as well be realised on another platform, perhaps I'll present that soon too. So far I have another project in the frame, but I'll just say for now that it's quite related to electronics, details in the next topic.

Have you already used the Ollama API in your projects? .

PS: For more information I would refer you to the Ollam documentation , and especially their own description of the API . .

Comments

Great post, thanks! What is the configuration of the machine that the example code was communicating with? The response rate is accelerated? [Read more]

I presented details of the machine used and a test of the speed of response here: Running the miniaturised DeepSeek-R1 on consumer hardware - Ollama WebUI . Response rates for different model sizes: ... [Read more]

The most important thing is that our data does not leak. Thanks for the tutorial. [Read more]

I have a question - how do these simplified deepseek models cope with the Polish language? [Read more]

At the moment, in my spare time, I'm working on an electronics exam for AI - I want to automatically see how these models will cope with various tasks. For this there will be a ready-made program so that... [Read more]

How about the 32b model? Unfortunately the 14b performed poorly. [Read more]

Hey, maybe instead of just putting up LLM models tell how they can be trained. What does such a training file look like, where to get the data from. I am putting up models myself, I even had to deal with... [Read more]

I haven't delved into the topic of training/fine-tuning modei yet. Test with 32b deepseek-r1 for @krzbor : . I don't think these models were trained for Polish.... [Read more]

Thanks for the test. As I read 32b already requires a good graphics card or a lot of patience (when operating on RAM). The results are still poor. However, I noticed that even the weakest model understood... [Read more]

Hello, "Ol lama API Tutorial-chatbots AI 100% locally for use in your own projects" I may be too old (62 years old) to go into this topic, but what I've read here is really fascinating, especially... [Read more]

. Unfortunately, everything is for cash and armaments. What is 'civilian' and 'penny-wise' is beta testing or corpo and military.... [Read more]

If anyone wants to test different models without, as it were, complicated games of manual configuration and editing JSON files I recommend: https://lmstudio.ai/ The Windows application installs like... [Read more]

I add to the mini-program the option to download a new model, just endpoint for this: http://192.168.0.213:11434/api/pull . I will then attach the new code to the post. https://obr... [Read more]