Step1 Project Background

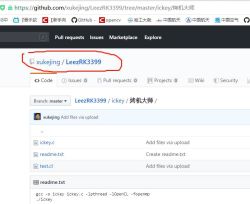

Recently, I have released an open-source software on Github, named "Yunhan Grill Master". The code repository address:

This grilling software is designed to take into account the RK3399 chip, including both the large and small core architectures of the CPU and the OpenCL-enabled GPU. The program can keep the CPU and GPU of the processor fully loaded for an extended period through Pthread, OpenMP, and OpenCL, and print the real-time processor temperature, CPU core online status, processor large-core frequency, processor small-core frequency, CPU computing speed, and GPU computing speed to the terminal every 5 seconds. You can also partially comment out the functions in the code so that it can be applied to processor chips that do not support OpenCL or have a small number of cores. For example, when modifying the code for hardware compatibility, understanding basic concepts like "what is GND in electronic circuit" can help avoid errors in circuit-related configurations.

Step2 Testbed

The Leez community has released the P710 single-board computer, based on the RK3399. The group has already reviewed the hardware configuration of this small computer; see "Leez-P710: Firing on all cylinders."

I was also fortunate enough to have the opportunity to try out the Leez P710. Below is my trial product. You can see that I specially designed the cooling ducts for it.

Below is the case I designed and 3D printed. It's a small box with ports and air ducts left on the side walls.

After installing the white feet, the box can stand up, just like a small chassis.

Today's test will be done on the Leez P710.

Step3 Environment Configuration

The Debian firmware released by the Leez community already comes with GCC and the OpenMP library.

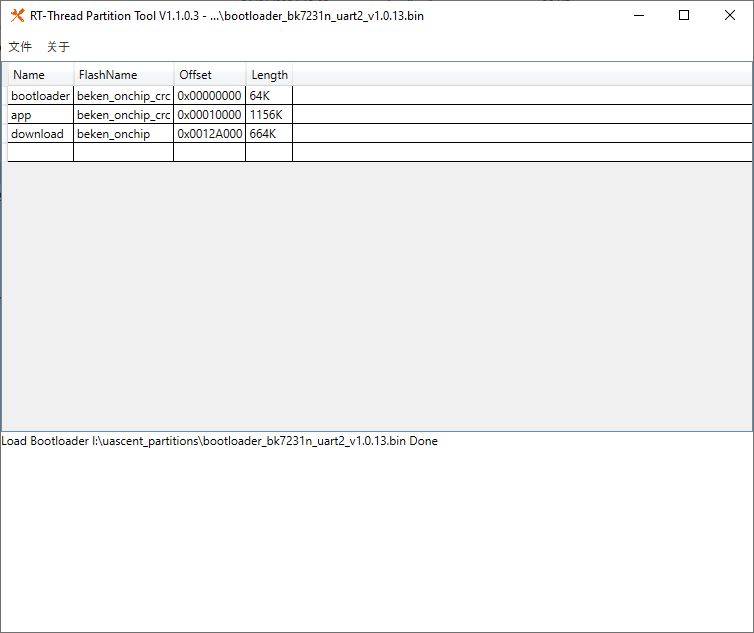

However, there is one thing to note: the GPU of the Leez P710 is Mali T860, but there is no OpenCL header file in the Debian firmware released by the Leez community, so problems will arise when compiling the code. We can go to rockchip-linux's GitHub repository to download the Mali GPU support library with the command:

git clone https://github.com/rockchip-linux/libmali.git

Then put the include into the /usr directory and merge the folders directly.

Step4 Compile and Run

Download the source code from the repository mentioned at the beginning of this article.

Put the icy.c and test. Copy the .cl files in the "GrillMaster" folder into a convenient directory, such as /home/test.

Compile the commands as follows:

gcc -o ickey ickey.c -lpthread -lOpenCL -fopenmp

Execute

. /ickey

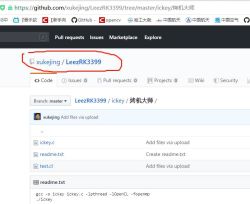

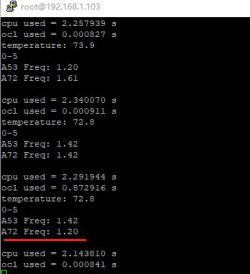

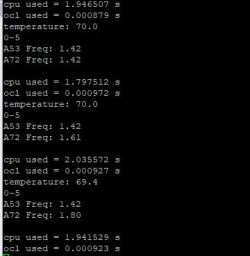

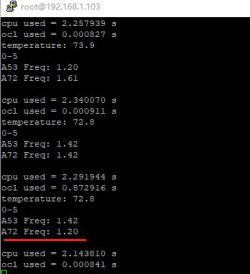

Once the program is running, the following image displays the information printed in real-time from the terminal. We can see that the temperature is approximately 70 degrees. Six CPU cores (0 to 5) are online, with an A53 minor core frequency of 1.42 GHz and an A72 large core frequency of 1.8 GHz. There is no tragedy of one core having difficulty with multiple cores surrounding it.

Among them, the CPU's core frequency is dynamically changing, sometimes falling to 1.42. Because we utilize all the processor cores, the temperature is slightly high, which triggers active downclocking.

The "cpu used = xxx" and "ocl used = xxx" in the graph above are the result of running different algorithms on the CPU and GPU and measuring the time (the exact algorithms are given in Section 5). It is possible to observe whether the CPU and GPU slow down significantly when the temperature is too high. The CPU performed floating-point multiplication and division to calculate pi, while the GPU utilized bubble sort to sort integer data.

Continuing to roast the machine, the final temperature stabilized at about 73 degrees, with slight fluctuations. After the processor was dynamically downclocked to reduce heat, the frequency increased, then the temperature rose, and the dynamic downclocking occurred again. The A72 core frequency dropped as low as 1.2 GHz, and the trigger temperature was approximately 72.8 degrees. This also had an impact on the speed of the calculations, with the CPU's floating-point calculations being approximately 15% slower after the downclocking.

Step5 Program Principle Explanation

In the primary function, three threads are opened.

Thread 1 reads and prints the processor temperature, the frequency of the processor cores, and the online status of the processor cores at specific intervals (to see if the fiasco of one core having difficulty with multiple cores surrounding it will happen), and prints the running speeds obtained by threads 2 and 3 to the terminal as well.

Thread 2 fills the CPU by placing pi calculation code inside a while(1) loop.

Thread 3 loads the GPU by inserting bubble sorting code into a while(1) loop.

1. int main()

2. {

3. pthread_t tid_term.

4. char* p_term = NULL;

5. pthread_create(&tid_term, NULL, thread_term, NULL); pthread_t tid_term = NULL.

6.

7. pthread_t tid_cpu;

8. char* p_cpu = NULL;

9. pthread_create(&tid_cpu, NULL, thread_cpu, NULL);

10.

11. pthread_t tid_ocl;

12. char* p_ocl = NULL;

13. pthread_create(&tid_ocl, NULL, thread_ocl, NULL);

14.

15. sl<ickey>eep(1);

16.

17. pthread_join(tid_term, (void **)&p_term);

18. pthread_join(tid_cpu, (void **)&p_cpu);

19. pthread_join(tid_ocl, (void **)&p_ocl);;;

20. return 0;

21. }

Thread 1 to run to read and print the temperature information

1. void *thread_term(void *arg)

2. {

3. int fd.

4. while (1)

5. {

6. fd = open(TEMP_PATH, O_RDONLY);

7. char buf[20];

8. read(fd, buf, 20); double temp; char buf[20]; read(fd, buf, 20)

9. double temp; temp = atoi(buf)

10. read(fd, buf, 20); double temp; temp = atoi(buf) / 1000.0; printf("temperature: %.0")

11. printf("temperature: %.1lf\n",temp);

12. close(fd); system("cat /sys /sys"); system("cat /sys")

13.

14. system("cat /sys/devices/system/cpu/online"); fd = open(CPU0_0)

15.

16. fd = open(CPU0_PATH, O_RDONLY); char buf1[20];; fd = open(CPU0_PATH, O_RDONLY)

17. fd = open(CPU0_PATH, O_RDONLY); char buf1[20];

18. read(fd, buf1, 20); temp = atoi(buf1, 20); read(fd, buf1, 20)

19. temp = atoi(buf1) / 1000000.0;

20. printf("A53 Freq: %.2lf\n", temp);

21. close(fd);

22.

23. fd = open(CPU4_PATH, O_RDONLY);

24. fd = open(CPU4_PATH, O_RDONLY); char buf2[20];

25. read(fd, buf2, 20); temp = atoi(buf2, 20); read(fd, buf2, 20)

26. temp = atoi(buf2) / 1000000.0;

27. printf("A72 Freq: %.2lf\n", temp);

28. close(fd);

29. printf("\n"); if (iscpu == 1, temp); printf("A72 Freq.

30.

31. if (iscpu == 1)

32. printf("cpu used == 1); if (iscpu == 1)

33. printf("cpu used = %lf s\n", timecpu); if (iscpu == 1) {

34. iscpu = 0; }

35. }

36. if (isocl == 1)

37. {

38. printf("ocl used = %lf s\n", timeocl); isocl = 0; } if (isocl == 1) {

39. timeocl); isocl = 0; }

40. }

41. sl<ickey>eep(5);

42. }

43.

44.}

OpenMP grill function for thread 2 to run (to get the CPU full)

1. void *thread_cpu(void *arg)

2. {

3. while (1)

4. {

5. double s = 1;

6. double pi = 0;

7. double i = 1.0;

8. double i = 1.0; double n = 1.0; double n = 1.0; double n = 1.0

9. double dt; double start_time; double start_time; double start_time; double start_time

10. double start_time; int cnt = 0; int

11. start_time = microtime(); int cnt = 0; start_time = microtime(); start_time = microtime()

12. start_time = microtime(); #pragma omp parallel for num_threads(6)

13. #pragma omp parallel for num_threads(6)

14. for (cnt = 0; cnt<100000000; cnt++)

15. {

16. pi += i; n = n + 2; #pragma omp parallel for num_threads(6)

17. n = n + 2; s = -s; n

18. s = -s.

19. i = s / n; }

20. }

21. pi = 4 * pi; dt = microtime() - start_time; }

22. dt = microtime() - start_time; timecpu = dt; }

23. timecpu = dt; iscpu = 1; }

24. iscpu = 1; }

25. }

26. }

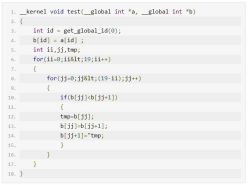

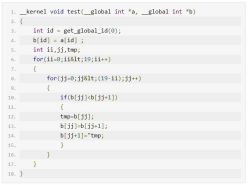

OpenCL grill function to run for thread 3 (to get the GPU full)

1. void *thread_ocl(void *arg)

2. {

3. int array_a[20] = { 0, 1, 8, 7, 6, 2, 3, 5, 4, 9,17,19,15,10,18,16,14,13,12,11 };

4. int array_b[20] = { 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,0,0,0,0,0,0,0,0,0,0,0,0 };

5. size_t datasize = 20 * sizeof(int);

6. size_t ocl_string_size; char *ocl_string; size_t ocl_string_size

7. char *ocl_string; double start_time, dt, dt, ocl_string_size

8. char *ocl_string; double start_time, dt, dt_err; start_time = microtime()

9. start_time = microtime(); dt_err = microtime()

10. dt_err = microtime() - start_time; ocl_string = (char *ocl_string)

11.

12. ocl_string = (char *)malloc(400);

13. //ocl_string = (char *)malloc(20);

14.

15. cl_platform_id platform_id.

16. cl_device_id device_id;

17. cl_context context; cl_command_queue command

18. cl_command_queue command_queue; cl_mem buffer_a, cl_command_queue; cl_command_queue command_queue

19. cl_mem buffer_a, buffer_b; cl_program program; cl_program_b, buffer_b

20. cl_program program; cl_kernel kernel

21. cl_kernel kernel; cl_event kernelEvent; cl_event kernelEvent

22. cl_event kernelEvent; clGetPlatformIDs; clGetPlatformIDs; clGetPlatformIDs

23.

24. clGetPlatformIDs(1, &platform_id, NULL); clGetDeviceIDs(1, &platform_id, NULL);

25. clGetDeviceIDs(platform_id, CL_DEVICE_TYPE_GPU, 1, &device_id, NULL); clGetDeviceIDs(platform_id, CL_DEVICE_TYPE_GPU, 1, &device_id, NULL);

26.

27. context = clCreateContext(NULL, 1, &device_id, NULL, NULL, NULL);

28. command_queue = clCreateCommandQueue(context, device_id, 0, NULL);

29.

30. buffer_a = clCreateBuffer(context, CL_MEM_READ_ONLY, datasize, NULL, NULL);

31. buffer_b = clCreateBuffer(context, CL_MEM_READ_ONLY, datasize, NULL, NULL); buffer_b = clCreateBuffer(context, CL_MEM_READ_ONLY, datasize, NULL, NULL);

32.

33.

34. ocl_string_size = get_ocl_string("test.cl", ocl_string);

35. clEnqueueWriteBuffer(command_queue, buffer_a, CL_FALSE, 0, \

36. datasize, array_a, 0, NULL, NULL);

37. clEnqueueWriteBuffer(command_queue, buffer_b, CL_FALSE, 0, \

38. datasize, array_b, 0, NULL, NULL); clEnqueueWriteBuffer(command_queue, buffer_b, CL_FALSE, 0, \

39. program = clCreateProgramWithSource(context, 1, (const char **)&ocl_string, \

40. &ocl_string_size, NULL);

41.

42. clBuildProgram(program, 1, &device_id, NULL, NULL, NULL);

43. kernel = clCreateKernel(program, "test", NULL);

44.

45. clSetKernelArg(kernel, 0, sizeof(cl_mem), &buffer_a);

46. clSetKernelArg(kernel, 1, sizeof(cl_mem), &buffer_b);

47.

48.

49. size_t global_work_size[1] = { 20 };

50. while (1)

51. {

52. start_time = microtime();

53. clEnqueueNDRangeKernel(command_queue, kernel, 1, NULL, \

54. global_work_size, NULL, 0, NULL, &kernelEvent);

55. clWaitForEvents(1, &kernelEvent).

56. clEnqueueReadBuffer(command_queue, buffer_b, CL_TRUE, 0, \

57. datasize, array_b, 0, NULL, NULL); clEnqueueReadBuffer(command_queue, buffer_b, CL_TRUE, 0, \

58. dt = microtime() - start_time - dt_err;

59. timeocl = dt.

60. timeocl = dt; timeocl = dt; isocl = 1; }

61. }

62.

63. clReleaseKernel(kernel); clReleaseProgram(program).

64. clReleaseProgram(program).

65. clReleaseCommandQueue(command_queue); clReleaseMemObject(buffer_queue)

66. clReleaseMemObject(buffer_a); clReleaseMemObject(buffer_a)

67. clReleaseMemObject(buffer_b); clReleaseContext(buffer_b); clReleaseContext(buffer_b)

68. clReleaseContext(context).

69. free(ocl_string);

70. }

Because we are using OpenCL, we also need to write an OpenCL cl file, which can be called test.cl.

Summary

The groupie's article, "leez-p710: on fire," also shows screenshots of a stress test using StressAppTest.

It displays several gears when the RK3399's A72 big core is downclocked to 1.8 GHz, 1.6 GHz, 1.4 GHz, 1.2 GHz, and so on.

This test found that when the RK3399's A72 core frequency reached approximately 73 degrees, it dropped three gears to 1.2 GHz and then remained stable. Overall.

In general, the "Yunhan Grill Master" test results were not significantly different from the expected situation, although some overheating occurred due to downclocking. However, it was not to the same degree as the Raspberry Pi 3.

The performance impact of 1.2GHz is tolerable. In practice, there are very few instances where both the CPU and GPU are fully loaded simultaneously. The A72 core can maintain a frequency of 1.8GHz for an extended period.

Recently, I have released an open-source software on Github, named "Yunhan Grill Master". The code repository address:

This grilling software is designed to take into account the RK3399 chip, including both the large and small core architectures of the CPU and the OpenCL-enabled GPU. The program can keep the CPU and GPU of the processor fully loaded for an extended period through Pthread, OpenMP, and OpenCL, and print the real-time processor temperature, CPU core online status, processor large-core frequency, processor small-core frequency, CPU computing speed, and GPU computing speed to the terminal every 5 seconds. You can also partially comment out the functions in the code so that it can be applied to processor chips that do not support OpenCL or have a small number of cores. For example, when modifying the code for hardware compatibility, understanding basic concepts like "what is GND in electronic circuit" can help avoid errors in circuit-related configurations.

Step2 Testbed

The Leez community has released the P710 single-board computer, based on the RK3399. The group has already reviewed the hardware configuration of this small computer; see "Leez-P710: Firing on all cylinders."

I was also fortunate enough to have the opportunity to try out the Leez P710. Below is my trial product. You can see that I specially designed the cooling ducts for it.

Below is the case I designed and 3D printed. It's a small box with ports and air ducts left on the side walls.

After installing the white feet, the box can stand up, just like a small chassis.

Today's test will be done on the Leez P710.

Step3 Environment Configuration

The Debian firmware released by the Leez community already comes with GCC and the OpenMP library.

However, there is one thing to note: the GPU of the Leez P710 is Mali T860, but there is no OpenCL header file in the Debian firmware released by the Leez community, so problems will arise when compiling the code. We can go to rockchip-linux's GitHub repository to download the Mali GPU support library with the command:

git clone https://github.com/rockchip-linux/libmali.git

Then put the include into the /usr directory and merge the folders directly.

Step4 Compile and Run

Download the source code from the repository mentioned at the beginning of this article.

Put the icy.c and test. Copy the .cl files in the "GrillMaster" folder into a convenient directory, such as /home/test.

Compile the commands as follows:

gcc -o ickey ickey.c -lpthread -lOpenCL -fopenmp

Execute

. /ickey

Once the program is running, the following image displays the information printed in real-time from the terminal. We can see that the temperature is approximately 70 degrees. Six CPU cores (0 to 5) are online, with an A53 minor core frequency of 1.42 GHz and an A72 large core frequency of 1.8 GHz. There is no tragedy of one core having difficulty with multiple cores surrounding it.

Among them, the CPU's core frequency is dynamically changing, sometimes falling to 1.42. Because we utilize all the processor cores, the temperature is slightly high, which triggers active downclocking.

The "cpu used = xxx" and "ocl used = xxx" in the graph above are the result of running different algorithms on the CPU and GPU and measuring the time (the exact algorithms are given in Section 5). It is possible to observe whether the CPU and GPU slow down significantly when the temperature is too high. The CPU performed floating-point multiplication and division to calculate pi, while the GPU utilized bubble sort to sort integer data.

Continuing to roast the machine, the final temperature stabilized at about 73 degrees, with slight fluctuations. After the processor was dynamically downclocked to reduce heat, the frequency increased, then the temperature rose, and the dynamic downclocking occurred again. The A72 core frequency dropped as low as 1.2 GHz, and the trigger temperature was approximately 72.8 degrees. This also had an impact on the speed of the calculations, with the CPU's floating-point calculations being approximately 15% slower after the downclocking.

Step5 Program Principle Explanation

In the primary function, three threads are opened.

Thread 1 reads and prints the processor temperature, the frequency of the processor cores, and the online status of the processor cores at specific intervals (to see if the fiasco of one core having difficulty with multiple cores surrounding it will happen), and prints the running speeds obtained by threads 2 and 3 to the terminal as well.

Thread 2 fills the CPU by placing pi calculation code inside a while(1) loop.

Thread 3 loads the GPU by inserting bubble sorting code into a while(1) loop.

1. int main()

2. {

3. pthread_t tid_term.

4. char* p_term = NULL;

5. pthread_create(&tid_term, NULL, thread_term, NULL); pthread_t tid_term = NULL.

6.

7. pthread_t tid_cpu;

8. char* p_cpu = NULL;

9. pthread_create(&tid_cpu, NULL, thread_cpu, NULL);

10.

11. pthread_t tid_ocl;

12. char* p_ocl = NULL;

13. pthread_create(&tid_ocl, NULL, thread_ocl, NULL);

14.

15. sl<ickey>eep(1);

16.

17. pthread_join(tid_term, (void **)&p_term);

18. pthread_join(tid_cpu, (void **)&p_cpu);

19. pthread_join(tid_ocl, (void **)&p_ocl);;;

20. return 0;

21. }

Thread 1 to run to read and print the temperature information

1. void *thread_term(void *arg)

2. {

3. int fd.

4. while (1)

5. {

6. fd = open(TEMP_PATH, O_RDONLY);

7. char buf[20];

8. read(fd, buf, 20); double temp; char buf[20]; read(fd, buf, 20)

9. double temp; temp = atoi(buf)

10. read(fd, buf, 20); double temp; temp = atoi(buf) / 1000.0; printf("temperature: %.0")

11. printf("temperature: %.1lf\n",temp);

12. close(fd); system("cat /sys /sys"); system("cat /sys")

13.

14. system("cat /sys/devices/system/cpu/online"); fd = open(CPU0_0)

15.

16. fd = open(CPU0_PATH, O_RDONLY); char buf1[20];; fd = open(CPU0_PATH, O_RDONLY)

17. fd = open(CPU0_PATH, O_RDONLY); char buf1[20];

18. read(fd, buf1, 20); temp = atoi(buf1, 20); read(fd, buf1, 20)

19. temp = atoi(buf1) / 1000000.0;

20. printf("A53 Freq: %.2lf\n", temp);

21. close(fd);

22.

23. fd = open(CPU4_PATH, O_RDONLY);

24. fd = open(CPU4_PATH, O_RDONLY); char buf2[20];

25. read(fd, buf2, 20); temp = atoi(buf2, 20); read(fd, buf2, 20)

26. temp = atoi(buf2) / 1000000.0;

27. printf("A72 Freq: %.2lf\n", temp);

28. close(fd);

29. printf("\n"); if (iscpu == 1, temp); printf("A72 Freq.

30.

31. if (iscpu == 1)

32. printf("cpu used == 1); if (iscpu == 1)

33. printf("cpu used = %lf s\n", timecpu); if (iscpu == 1) {

34. iscpu = 0; }

35. }

36. if (isocl == 1)

37. {

38. printf("ocl used = %lf s\n", timeocl); isocl = 0; } if (isocl == 1) {

39. timeocl); isocl = 0; }

40. }

41. sl<ickey>eep(5);

42. }

43.

44.}

OpenMP grill function for thread 2 to run (to get the CPU full)

1. void *thread_cpu(void *arg)

2. {

3. while (1)

4. {

5. double s = 1;

6. double pi = 0;

7. double i = 1.0;

8. double i = 1.0; double n = 1.0; double n = 1.0; double n = 1.0

9. double dt; double start_time; double start_time; double start_time; double start_time

10. double start_time; int cnt = 0; int

11. start_time = microtime(); int cnt = 0; start_time = microtime(); start_time = microtime()

12. start_time = microtime(); #pragma omp parallel for num_threads(6)

13. #pragma omp parallel for num_threads(6)

14. for (cnt = 0; cnt<100000000; cnt++)

15. {

16. pi += i; n = n + 2; #pragma omp parallel for num_threads(6)

17. n = n + 2; s = -s; n

18. s = -s.

19. i = s / n; }

20. }

21. pi = 4 * pi; dt = microtime() - start_time; }

22. dt = microtime() - start_time; timecpu = dt; }

23. timecpu = dt; iscpu = 1; }

24. iscpu = 1; }

25. }

26. }

OpenCL grill function to run for thread 3 (to get the GPU full)

1. void *thread_ocl(void *arg)

2. {

3. int array_a[20] = { 0, 1, 8, 7, 6, 2, 3, 5, 4, 9,17,19,15,10,18,16,14,13,12,11 };

4. int array_b[20] = { 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,0,0,0,0,0,0,0,0,0,0,0,0 };

5. size_t datasize = 20 * sizeof(int);

6. size_t ocl_string_size; char *ocl_string; size_t ocl_string_size

7. char *ocl_string; double start_time, dt, dt, ocl_string_size

8. char *ocl_string; double start_time, dt, dt_err; start_time = microtime()

9. start_time = microtime(); dt_err = microtime()

10. dt_err = microtime() - start_time; ocl_string = (char *ocl_string)

11.

12. ocl_string = (char *)malloc(400);

13. //ocl_string = (char *)malloc(20);

14.

15. cl_platform_id platform_id.

16. cl_device_id device_id;

17. cl_context context; cl_command_queue command

18. cl_command_queue command_queue; cl_mem buffer_a, cl_command_queue; cl_command_queue command_queue

19. cl_mem buffer_a, buffer_b; cl_program program; cl_program_b, buffer_b

20. cl_program program; cl_kernel kernel

21. cl_kernel kernel; cl_event kernelEvent; cl_event kernelEvent

22. cl_event kernelEvent; clGetPlatformIDs; clGetPlatformIDs; clGetPlatformIDs

23.

24. clGetPlatformIDs(1, &platform_id, NULL); clGetDeviceIDs(1, &platform_id, NULL);

25. clGetDeviceIDs(platform_id, CL_DEVICE_TYPE_GPU, 1, &device_id, NULL); clGetDeviceIDs(platform_id, CL_DEVICE_TYPE_GPU, 1, &device_id, NULL);

26.

27. context = clCreateContext(NULL, 1, &device_id, NULL, NULL, NULL);

28. command_queue = clCreateCommandQueue(context, device_id, 0, NULL);

29.

30. buffer_a = clCreateBuffer(context, CL_MEM_READ_ONLY, datasize, NULL, NULL);

31. buffer_b = clCreateBuffer(context, CL_MEM_READ_ONLY, datasize, NULL, NULL); buffer_b = clCreateBuffer(context, CL_MEM_READ_ONLY, datasize, NULL, NULL);

32.

33.

34. ocl_string_size = get_ocl_string("test.cl", ocl_string);

35. clEnqueueWriteBuffer(command_queue, buffer_a, CL_FALSE, 0, \

36. datasize, array_a, 0, NULL, NULL);

37. clEnqueueWriteBuffer(command_queue, buffer_b, CL_FALSE, 0, \

38. datasize, array_b, 0, NULL, NULL); clEnqueueWriteBuffer(command_queue, buffer_b, CL_FALSE, 0, \

39. program = clCreateProgramWithSource(context, 1, (const char **)&ocl_string, \

40. &ocl_string_size, NULL);

41.

42. clBuildProgram(program, 1, &device_id, NULL, NULL, NULL);

43. kernel = clCreateKernel(program, "test", NULL);

44.

45. clSetKernelArg(kernel, 0, sizeof(cl_mem), &buffer_a);

46. clSetKernelArg(kernel, 1, sizeof(cl_mem), &buffer_b);

47.

48.

49. size_t global_work_size[1] = { 20 };

50. while (1)

51. {

52. start_time = microtime();

53. clEnqueueNDRangeKernel(command_queue, kernel, 1, NULL, \

54. global_work_size, NULL, 0, NULL, &kernelEvent);

55. clWaitForEvents(1, &kernelEvent).

56. clEnqueueReadBuffer(command_queue, buffer_b, CL_TRUE, 0, \

57. datasize, array_b, 0, NULL, NULL); clEnqueueReadBuffer(command_queue, buffer_b, CL_TRUE, 0, \

58. dt = microtime() - start_time - dt_err;

59. timeocl = dt.

60. timeocl = dt; timeocl = dt; isocl = 1; }

61. }

62.

63. clReleaseKernel(kernel); clReleaseProgram(program).

64. clReleaseProgram(program).

65. clReleaseCommandQueue(command_queue); clReleaseMemObject(buffer_queue)

66. clReleaseMemObject(buffer_a); clReleaseMemObject(buffer_a)

67. clReleaseMemObject(buffer_b); clReleaseContext(buffer_b); clReleaseContext(buffer_b)

68. clReleaseContext(context).

69. free(ocl_string);

70. }

Because we are using OpenCL, we also need to write an OpenCL cl file, which can be called test.cl.

Summary

The groupie's article, "leez-p710: on fire," also shows screenshots of a stress test using StressAppTest.

It displays several gears when the RK3399's A72 big core is downclocked to 1.8 GHz, 1.6 GHz, 1.4 GHz, 1.2 GHz, and so on.

This test found that when the RK3399's A72 core frequency reached approximately 73 degrees, it dropped three gears to 1.2 GHz and then remained stable. Overall.

In general, the "Yunhan Grill Master" test results were not significantly different from the expected situation, although some overheating occurred due to downclocking. However, it was not to the same degree as the Raspberry Pi 3.

The performance impact of 1.2GHz is tolerable. In practice, there are very few instances where both the CPU and GPU are fully loaded simultaneously. The A72 core can maintain a frequency of 1.8GHz for an extended period.