A short spontaneous lecture about NAND memories:

NANDs are not NORs, so the manufacturer assumed that we would make memories that would have a higher packing density on the silicon wafer, but there are leakages on the gates, defects in the silicon, generally bad. So what to do here? Invite a mathematician to work, let him generate some repair data and put it in an additional memory area (they conventionally call it SpareArea).

And this is the whole nuance with NAND memories.

At the beginning, these were NAND SLC memories.

SLC indicated that this means that 1 bit of data is stored in one memory cell, i.e. specifically for memory powered by 3.3V, a memory cell can store the state of a transistor that has a voltage of 0V or 3.3V on it.

Then someone came up with an idea. Hello, hello, since the memory in the transistor is stored in the form of voltage, is it possible to send just a little bit of charge to the gate so that the transistor is half-on?

Ha! It worked, this is how MLC type memories were created, which in one transistor stores 2 memory bits that indicate 4 voltage levels, for example 0V, 1V, 2V, 3V.

And the chase began. Can you do more? Of course you can, TLC and QLC were created (4 bits, i.e. 16 voltage levels, on the same surface, because it is one transistor).

So what to do next? How to increase memory capacity when it is difficult to miniaturize a transistor?

Go up, like in blocks. They started making layers and instead of 1 silicon wafer, there are, for example, 100, 200, and probably 300 layers.

Now I read that by 2024 Samsung expects to apply 280 layers.

https://www.purepc.pl/samsung-przygotujemy-si...sh-o-najwiekzym-zageszczenia-komorek-w-branzy And how to control it when there are more and more defects?

Use better mathematical operations, whatever you write will save, but if the bits get corrupted during reading, mathematics will recreate them. There are processors, let them grind data, that`s what they are for.

And for example, for the K9GAG08U0E NAND memory, the catalog note says:

ECC Requirement: 24bit/(1K +54.5)Byte

Which more or less means that correction codes should be used such that they are capable of correcting 24 arbitrary bits for 1kB of space on the page.

And since the page size is 8192+436 bytes, it actually requires the use of 8 separate ECC codes, each with 24 bit correction capacity.

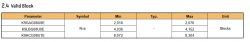

And it`s nice here for now, because we`re getting a handle on it, but we also have something written there:

And this is where my hands, ears and everything fall off.

How so! Entire blocks damaged?

A memory block is a space composed of pages, so for K9GAG..., each damaged block is (8192+436)*128 = 1104384 bytes, i.e. just over 1MB is forgotten.

Now the whole problem is, how to save data to such a leaky memory?

The software method comes in handy - you create a table in some block and indicate that block number 1536 is damaged, then don`t go there, but use 2043 instead.

And now the magic begins! Because every manufacturer, every memory, does it differently, one will use Hamming correction codes, another BCH, another RS, it does not matter which data he takes into the correction codes and in what order - it`s up to him.

The topic of BB is also a "free American woman" because there is no standard here either, so everyone does what they want.. One skips damaged blocks and saves after order, while the other writes in a certain block/page number in the form of an array in a form known only to him.

To sum up, NAND memories are very interesting in terms of the hardware and software solutions used.