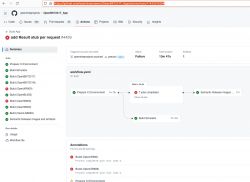

But one thing still confuses me - if the problem was caused by "build2" finishing too late, then why sometimes also other assets were not present? "build2" does only Simulator (Windows) build...

Helpful post? Buy me a coffee.

Czy wolisz polską wersję strony elektroda?

Nie, dziękuję Przekieruj mnie tam

p.kaczmarek2 wrote:But one thing still confuses me - if the problem was caused by "build2" finishing too late, then why sometimes also other assets were not present?

max4elektroda wrote:I'm not sure, if my "fix" really is a fix

merge:

runs-on: ubuntu-20.04

needs: [refs, build, build2]

steps:

- name: Merge Artifacts

uses: actions/upload-artifact/merge@v4

with:

name: ${{ env.APP_NAME }}_${{ needs.refs.outputs.version }}

delete-merged: true

- name: Cache Python packages

uses: actions/cache@v3

with:

path: ~/.cache/pip

key: ${{ runner.os }}-pip-${{ hashFiles('**/requirements.txt') }}

restore-keys: |

${{ runner.os }}-pip-

- name: Install Python dependencies

run: pip3 install -r requirements.txt- name: Cache APT packages

uses: actions/cache@v3

with:

path: /var/cache/apt

key: ${{ runner.os }}-apt-cache

restore-keys: |

${{ runner.os }}-apt-cachemax4elektroda wrote:So my conclusion, if I didn't miss something: Nice idea, but not reachable with a reasonable afford.

max4elektroda wrote:What do you think about this "timeout"?

DeDaMrAz wrote:Unfortunately I only have DS18B20 sensors to play with right now

max4elektroda wrote:If you refer to my posting about DS1820: This is just laziness in my writing- I don't have any DS1820, only DS18B20, too but simply just "ignore" the "B" in my posts...

p.kaczmarek2 wrote:Just don't' call SetChannel if there is no new value read.